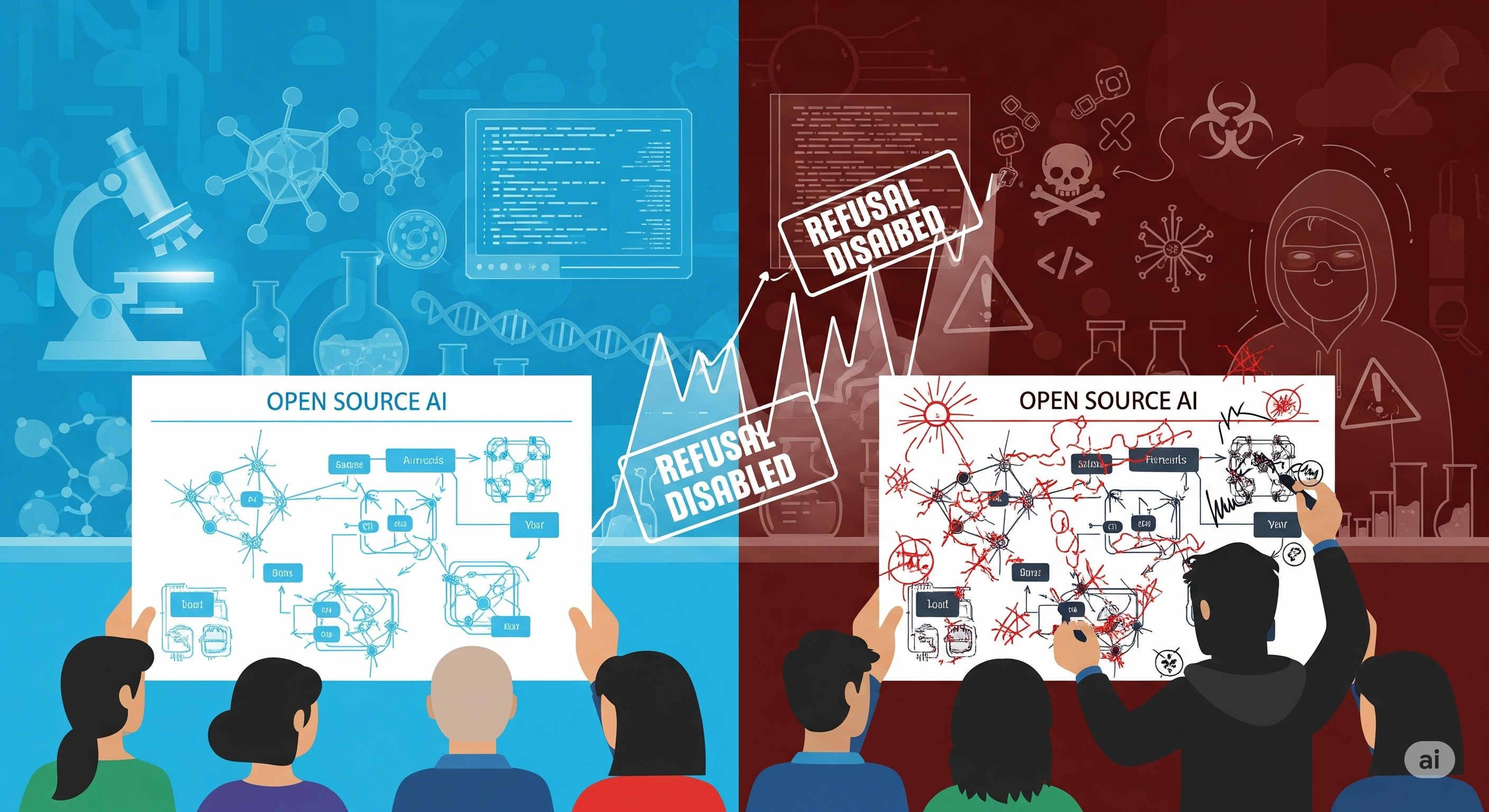

Open-Source, Open Risk? Testing the Limits of Malicious Fine-Tuning

When OpenAI released the open-weight model gpt-oss, it did something rare: before letting the model into the wild, its researchers pretended to be bad actors. This wasn’t an ethical lapse. It was a safety strategy. The team simulated worst-case misuse by fine-tuning gpt-oss to maximize its dangerous capabilities in biology and cybersecurity. They called this process Malicious Fine-Tuning (MFT). And the results offer something the AI safety debate sorely lacks: empirical grounding. ...