The Sims Get Smart? Why LLM-Driven Social Simulations Need a Reality Check

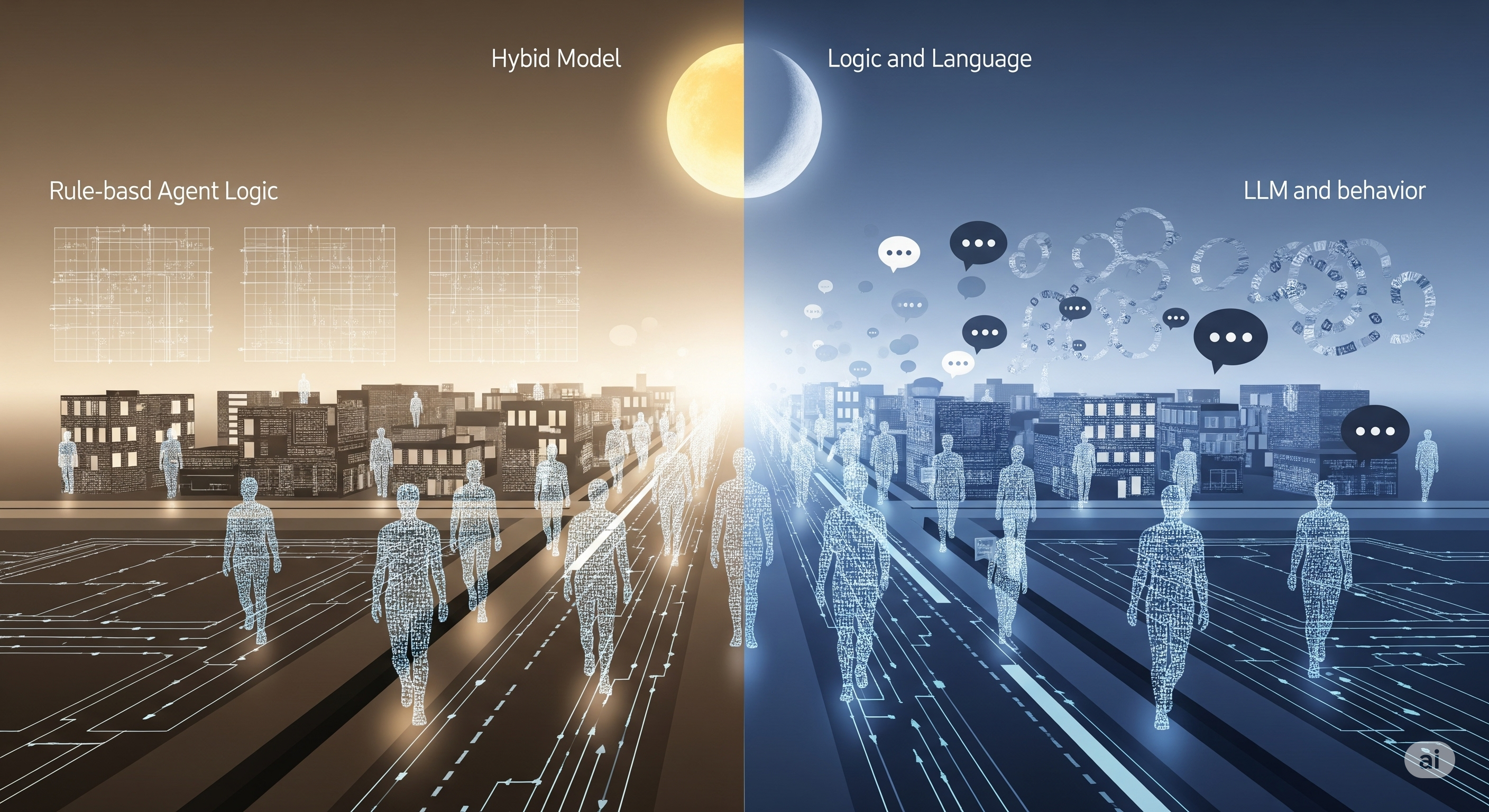

Social simulations are entering their uncanny valley. Fueled by generative agents powered by Large Language Models (LLMs), recent frameworks like Smallville, AgentSociety, and SocioVerse simulate thousands of lifelike agents forming friendships, spreading rumors, and planning parties. But do these simulations reflect real social processes — or merely replay the statistical shadows of the internet? When Simulacra Speak Fluently LLMs have demonstrated striking abilities to mimic human behaviors. GPT-4 has passed Theory-of-Mind (ToM) tests at levels comparable to 6–7 year-olds. In narrative contexts, it can detect sarcasm, understand indirect requests, and generate empathetic replies. But all of this arises not from embodied cognition or real-world goals — it’s just next-token prediction trained on massive corpora. ...