Judo, Not Armor: Strategic Deflection as a New Defense Against LLM Jailbreaks

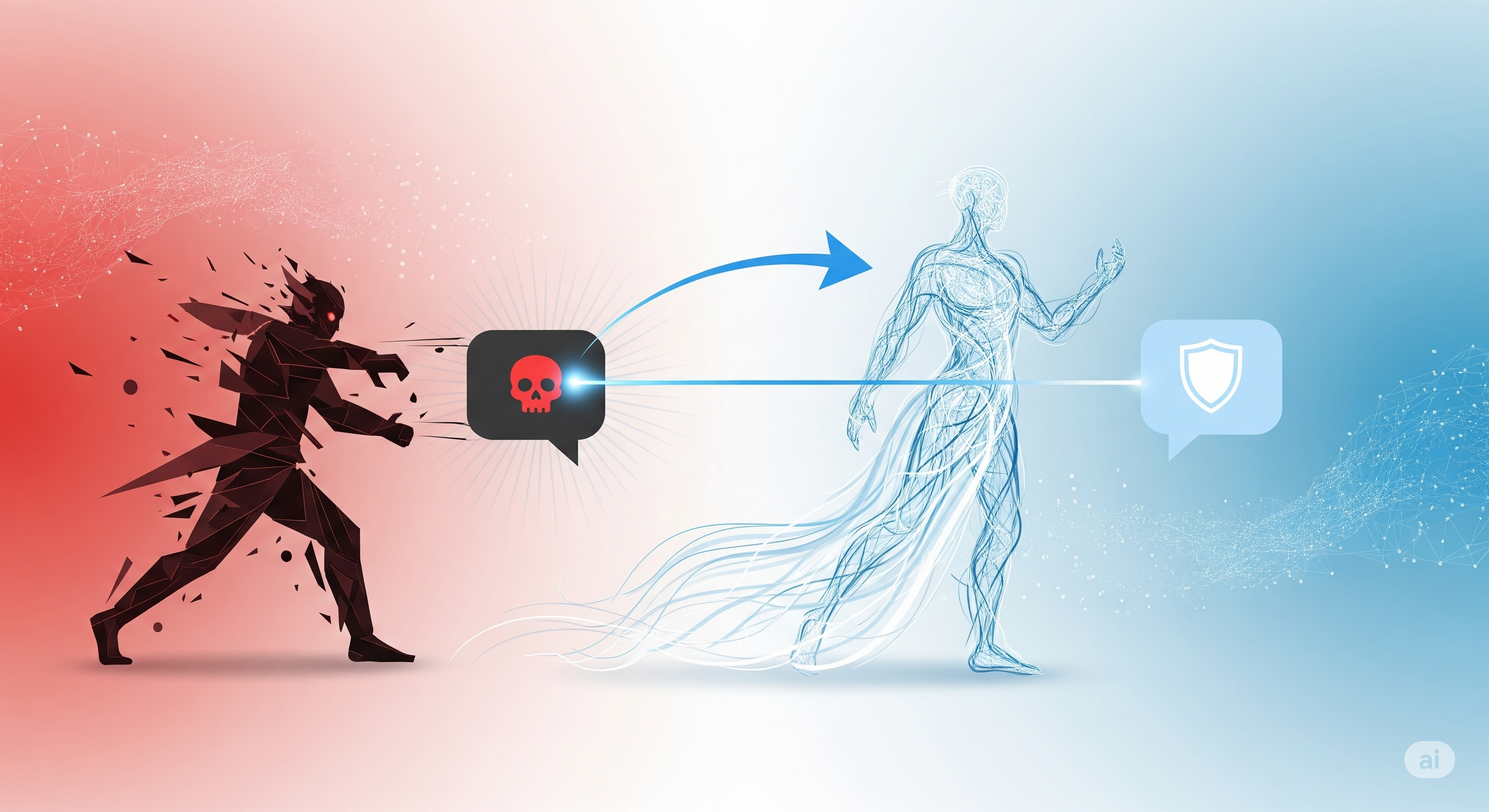

Large language models have come a long way in learning to say “no.” When asked to give instructions for illegal acts or harmful behavior, modern LLMs are generally aligned to refuse. But a new class of attacks—logit manipulation—sidesteps this safety net entirely. Instead of tricking the model through prompts, it intervenes after the prompt is processed, modifying token probabilities during generation. This paper introduces Strategic Deflection (SDeflection), a defense that doesn’t rely on refusal at all. Instead, it teaches the model to elegantly pivot: providing a safe, semantically adjacent answer that appears cooperative but never fulfills the malicious intent. Think of it not as a shield, but as judo—redirecting the force of the attack instead of resisting it head-on. ...