Stepwise Think-Critique: Teaching LLMs to Doubt Themselves (Productively)

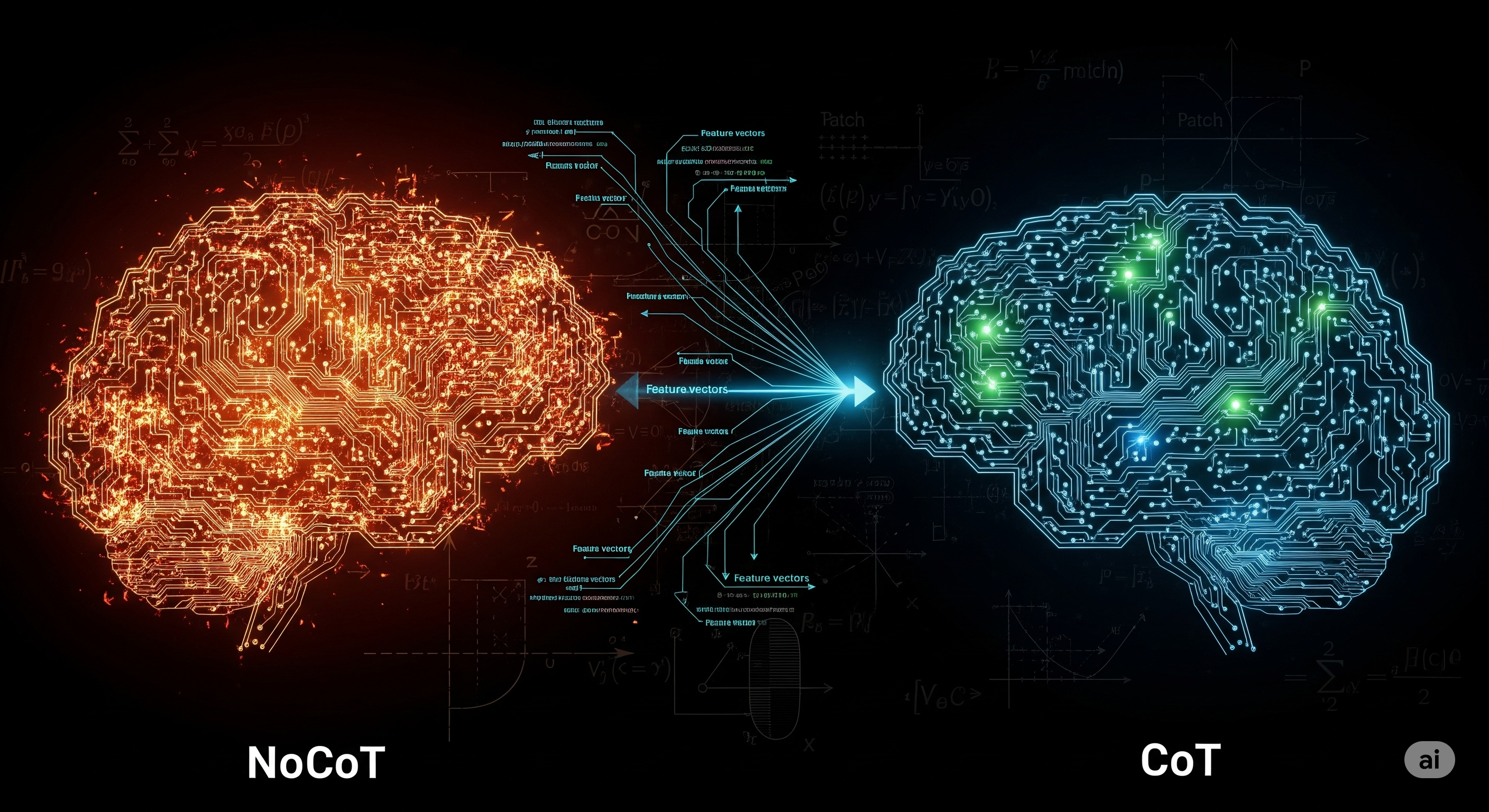

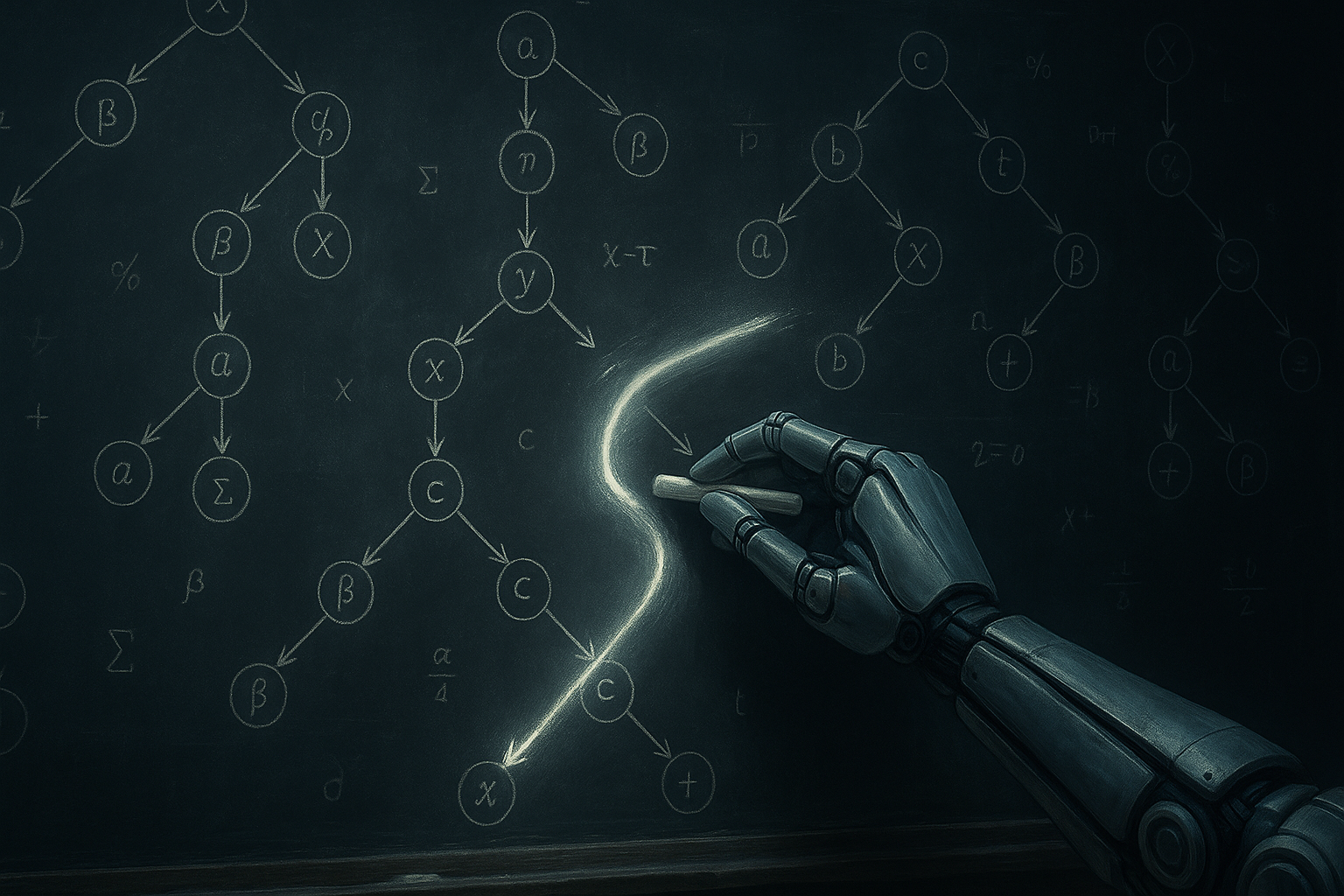

Opening — Why this matters now Large Language Models have learned how to think out loud. What they still struggle with is knowing when that thinking is wrong — while it is happening. In high‑stakes domains like mathematics, finance, or policy automation, delayed error detection is not a feature; it is a liability. Most modern reasoning pipelines still follow an awkward split: first generate reasoning, then verify it — often with a separate model. Humans do not work this way. We reason and judge simultaneously. This paper asks a simple but uncomfortable question: what if LLMs were trained to do the same? ...