Raising the Bar: Why AI Competitions Are the New Benchmark Battleground

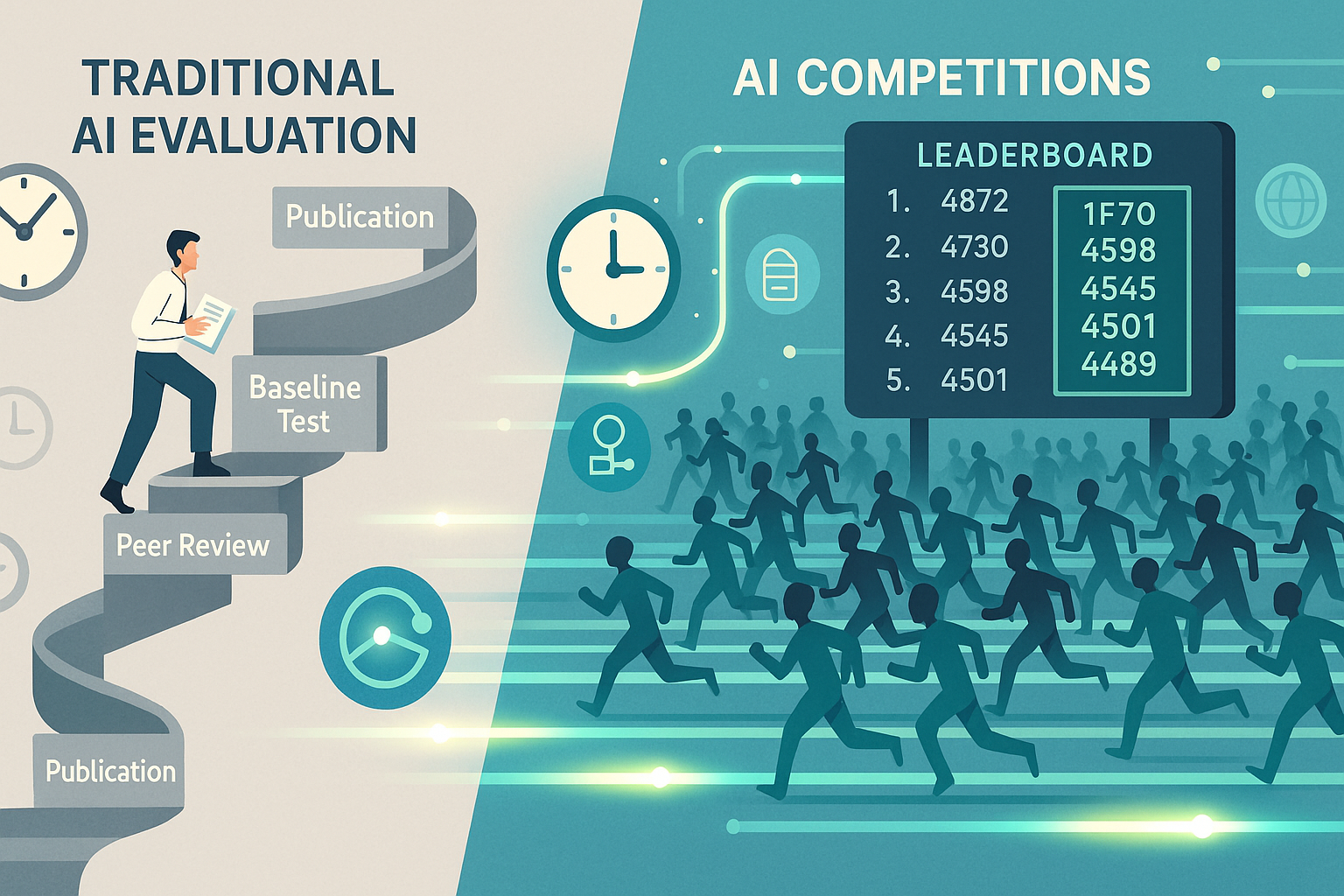

In the rapidly evolving landscape of Generative AI (GenAI), we’ve long relied on static benchmarks—standardized datasets and evaluations—to gauge model performance. But what if the very foundation we’re building our trust upon is fundamentally shaky? Static benchmarks often rely on IID (independent and identically distributed) assumptions, where training and test data come from the same statistical distribution. In such a setting, a model achieving high accuracy might simply be interpolating seen patterns rather than truly generalizing. For example, in language modeling, a model might “memorize” dataset-specific templates without capturing transferable reasoning patterns. ...