Thinking Isn’t Free: Why Chain-of-Thought Hits a Hard Wall

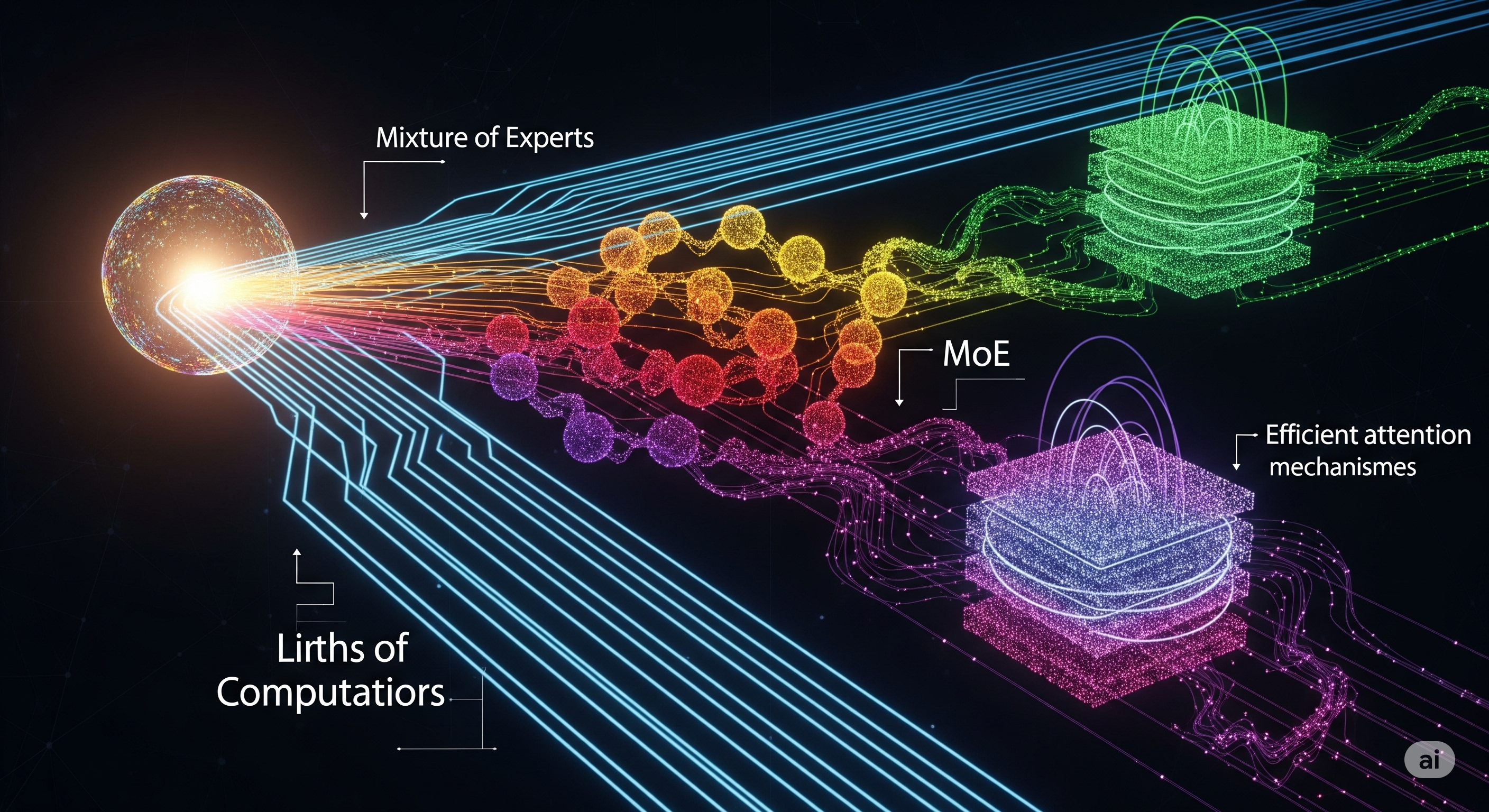

Opening — Why this matters now Inference-time reasoning has quietly become the dominant performance lever for frontier language models. When benchmarks get hard, we don’t retrain—we let models think longer. More tokens, more scratchpad, more compute. The industry narrative is simple: reasoning scales, so accuracy scales. This paper asks an uncomfortable question: how long must a model think, at minimum, as problems grow? And the answer, grounded in theory rather than vibes, is not encouraging. ...