Razor Burn: Why LLMs Nick Themselves on Induction and Abduction

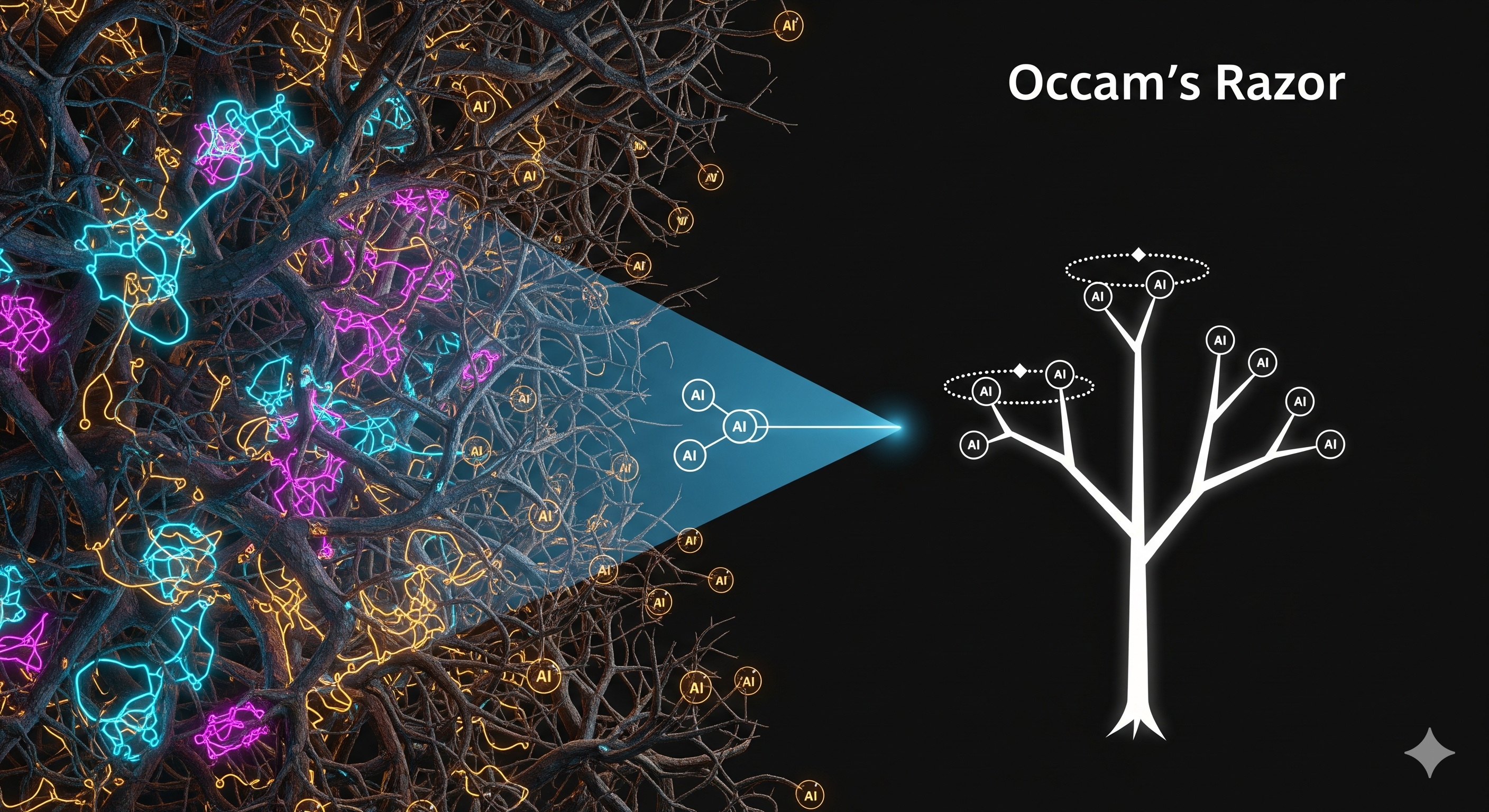

TL;DR A new synthetic benchmark (INABHYD) tests inductive and abductive reasoning under Occam’s Razor. LLMs handle toy cases but falter as ontologies deepen or when multiple hypotheses are needed. Even when models “explain” observations, they often pick needlessly complex or trivial hypotheses—precisely the opposite of what scientific discovery and root-cause analysis require. The Big Idea Most reasoning work on LLMs obsesses over deduction (step-by-step proofs). But the real world demands induction (generalize rules) and abduction (best explanation). The paper introduces INABHYD, a programmable benchmark that builds fictional ontology trees (concepts, properties, subtype links) and hides some axioms. The model sees an incomplete world + observations, and must propose hypotheses that both explain all observations and do so parsimoniously (Occam’s Razor). The authors score: ...