What LLMs Remember—and Why: Unpacking the Entropy-Memorization Law

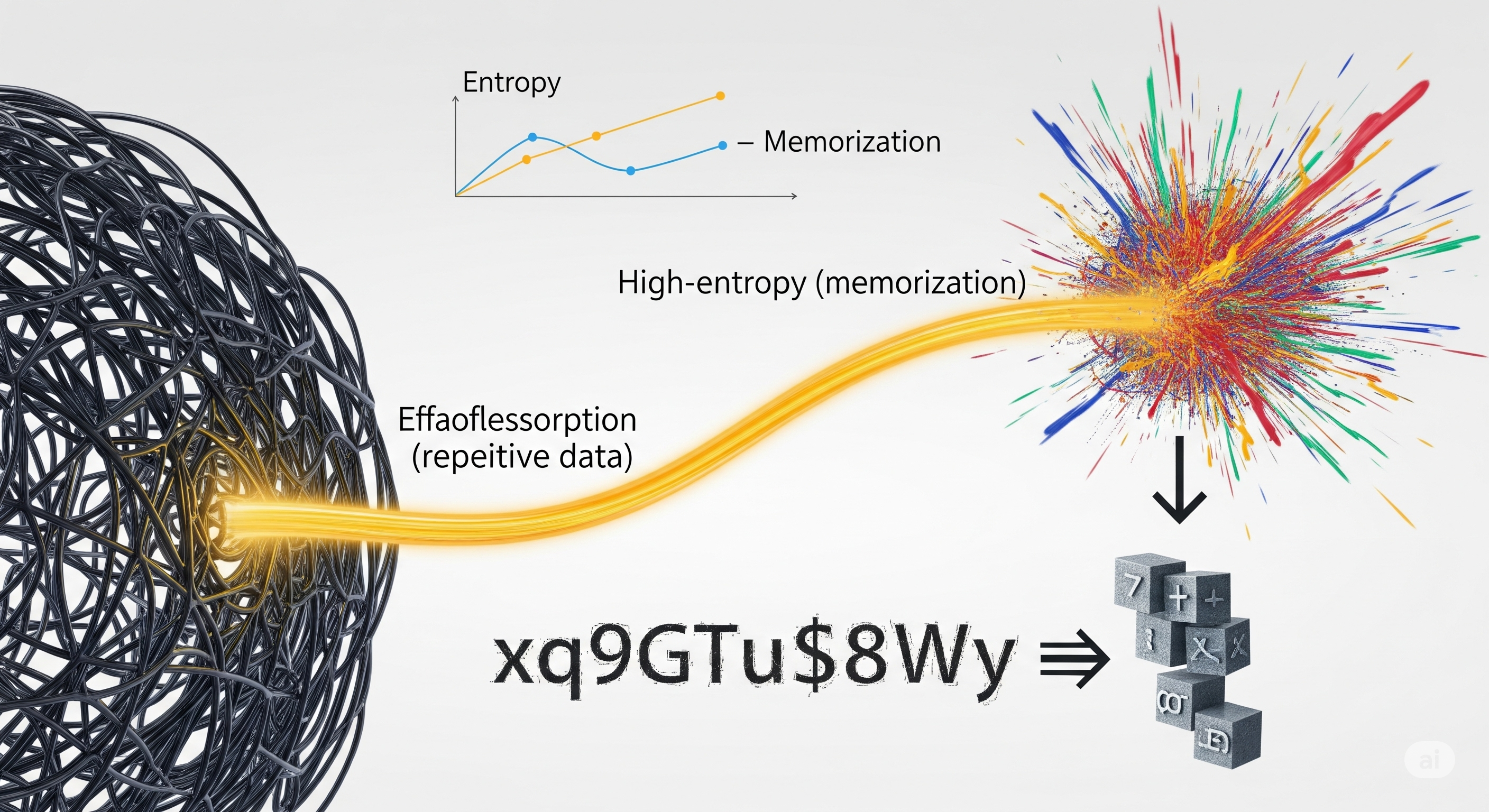

The best kind of privacy leak is the one you can measure. A recent paper by Huang et al. introduces a deceptively simple but powerful principle—the Entropy-Memorization Law—that allows us to do just that. It claims that the entropy of a text sequence is strongly correlated with how easily it’s memorized by a large language model (LLM). But don’t mistake this for just another alignment paper. This law has concrete implications for how we audit models, design prompts, and build privacy-aware systems. Here’s why it matters. ...