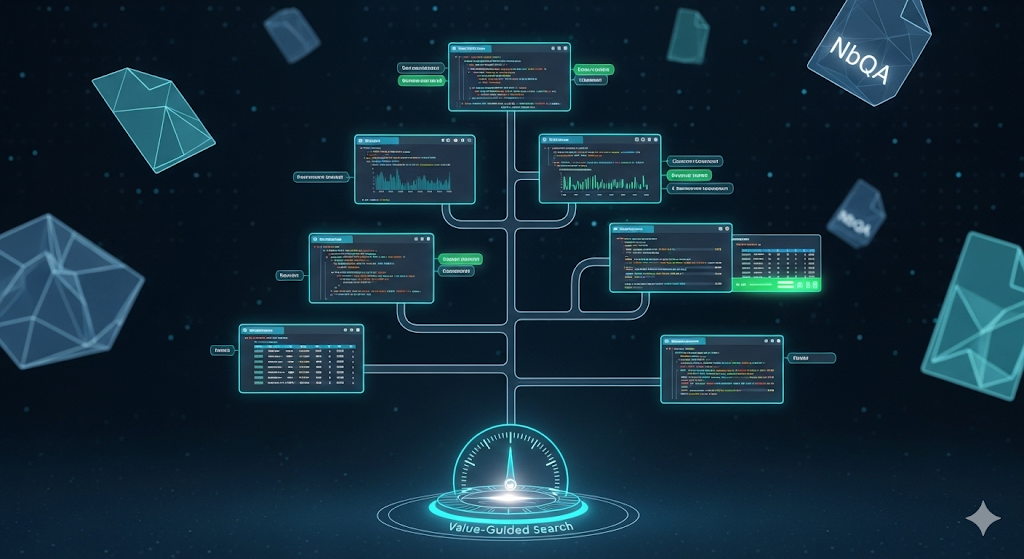

TL;DR Why this paper matters: It shows that how you search matters more than how big your model is for multi‑step, tool‑using analytics. With a notebook‑grounded dataset (NbQA) and value‑guided search, mid‑size open models rival GPT‑4o–based agents on a leading data‑analysis benchmark. What’s new: (1) NbQA, a large corpus of real Jupyter tasks with executable multi‑step solutions; (2) JUPITER, a planner that treats analysis as a tree search over “thought → code → output” steps, guided by a learned value model. Why you should care (operator’s view): This blueprint turns flaky “Code Interpreter”-style sessions into repeatable playbooks—fewer dead ends, more auditable steps, and better generalization without paying for the biggest model. The core idea: analytics as a search tree Most LLM data‑analysis failures come from branching mistakes: choosing the wrong intermediate step, compounding errors, and wasting tool calls. JUPITER reframes the whole exercise as search over notebook states. Each node is a concrete state—accumulated thoughts, code, and execution outputs. The system expands only a few promising branches and prunes the rest using a value model trained on successful and failed trajectories.

...