Routing the Brain: Why Smarter LLM Orchestration Beats Bigger Models

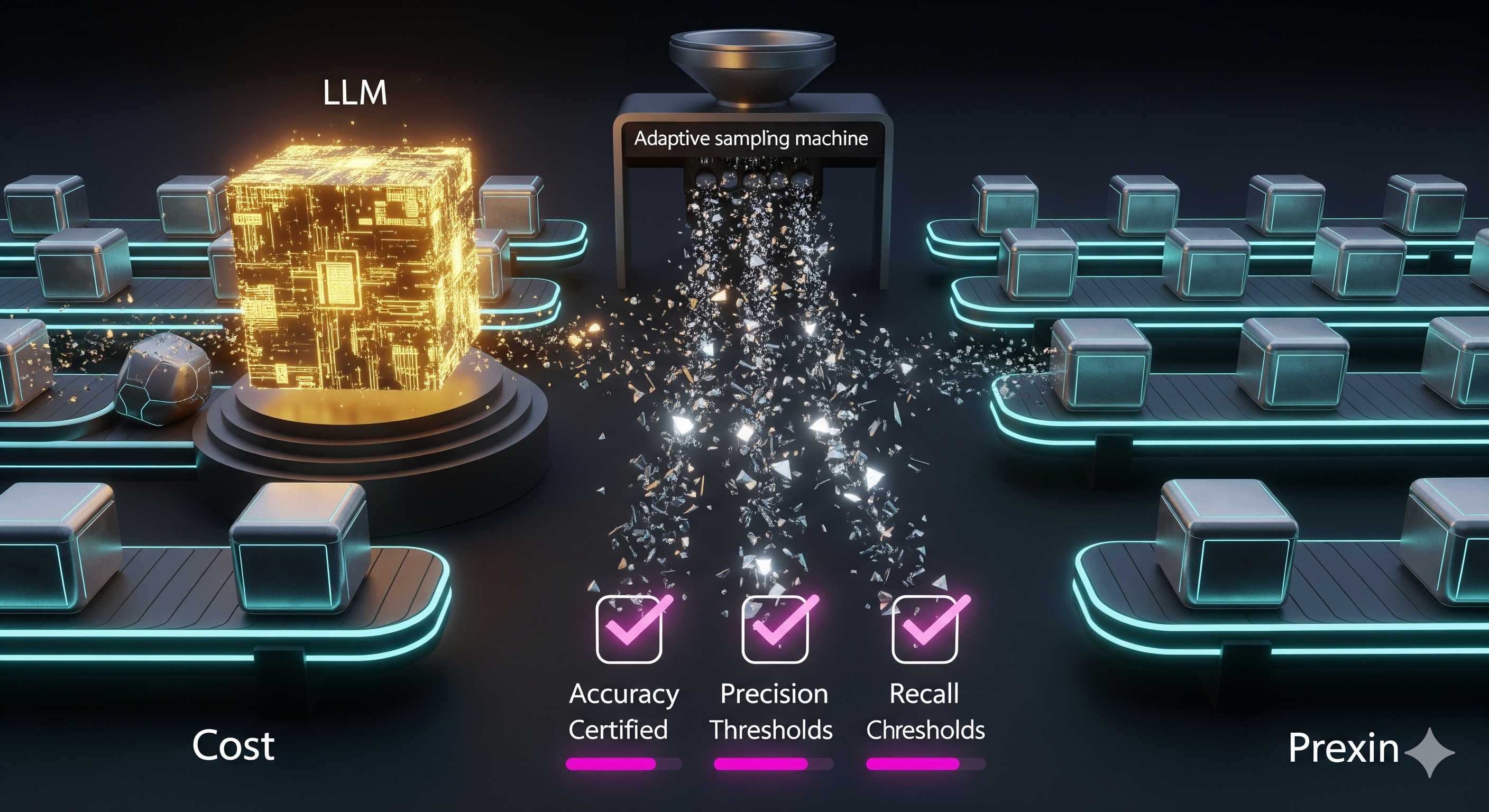

Opening — Why this matters now As large language models quietly slide from novelty to infrastructure, a less glamorous question has become existential: who pays the inference bill? Agentic systems amplify the problem. A single task is no longer a prompt—it is a chain of reasoning steps, retries, tool calls, and evaluations. Multiply that by production scale, and cost becomes the bottleneck long before intelligence does. ...