Edge Cases Matter: Teaching Drones to See the Small Stuff

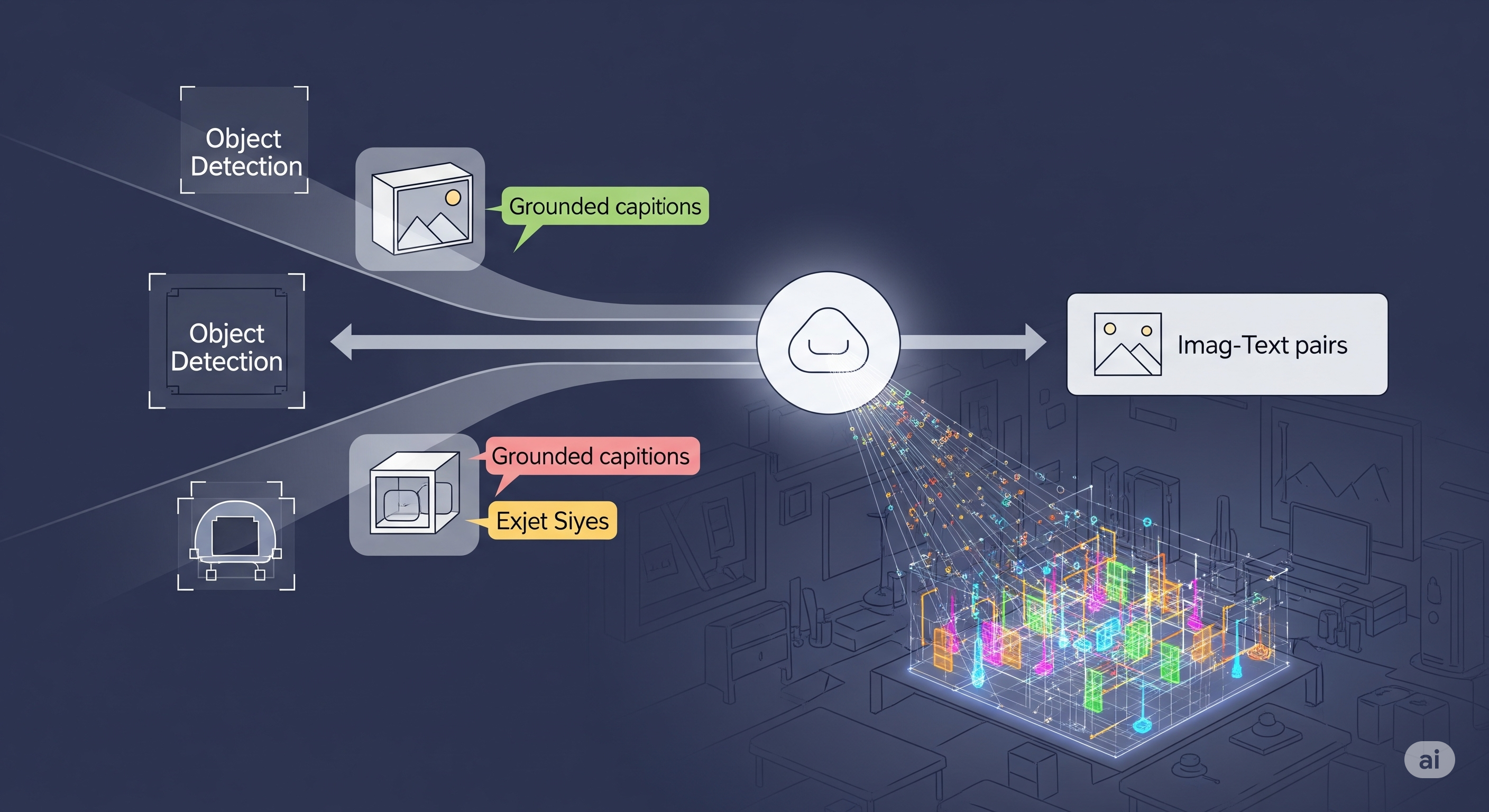

Opening — Why this matters now Drones have learned to fly cheaply, see broadly, and deploy everywhere. What they still struggle with is something far less glamorous: noticing small things that actually matter. In aerial imagery, most targets of interest—vehicles, pedestrians, infrastructure details—occupy only a handful of pixels. Worse, they arrive blurred, partially occluded, and embedded in visually noisy backgrounds. Traditional object detectors, even highly optimized YOLO variants, are structurally biased toward medium and large objects. Small objects are the first casualties of depth, pooling, and aggressive downsampling. ...