Opening — Why this matters now The fintech industry is an alphabet soup of acronyms and compliance clauses. For a large language model (LLM), it’s a minefield of misunderstood abbreviations, half-specified processes, and siloed documentation that lives in SharePoint purgatory. Yet financial institutions are under pressure to make sense of their internal knowledge—securely, locally, and accurately.

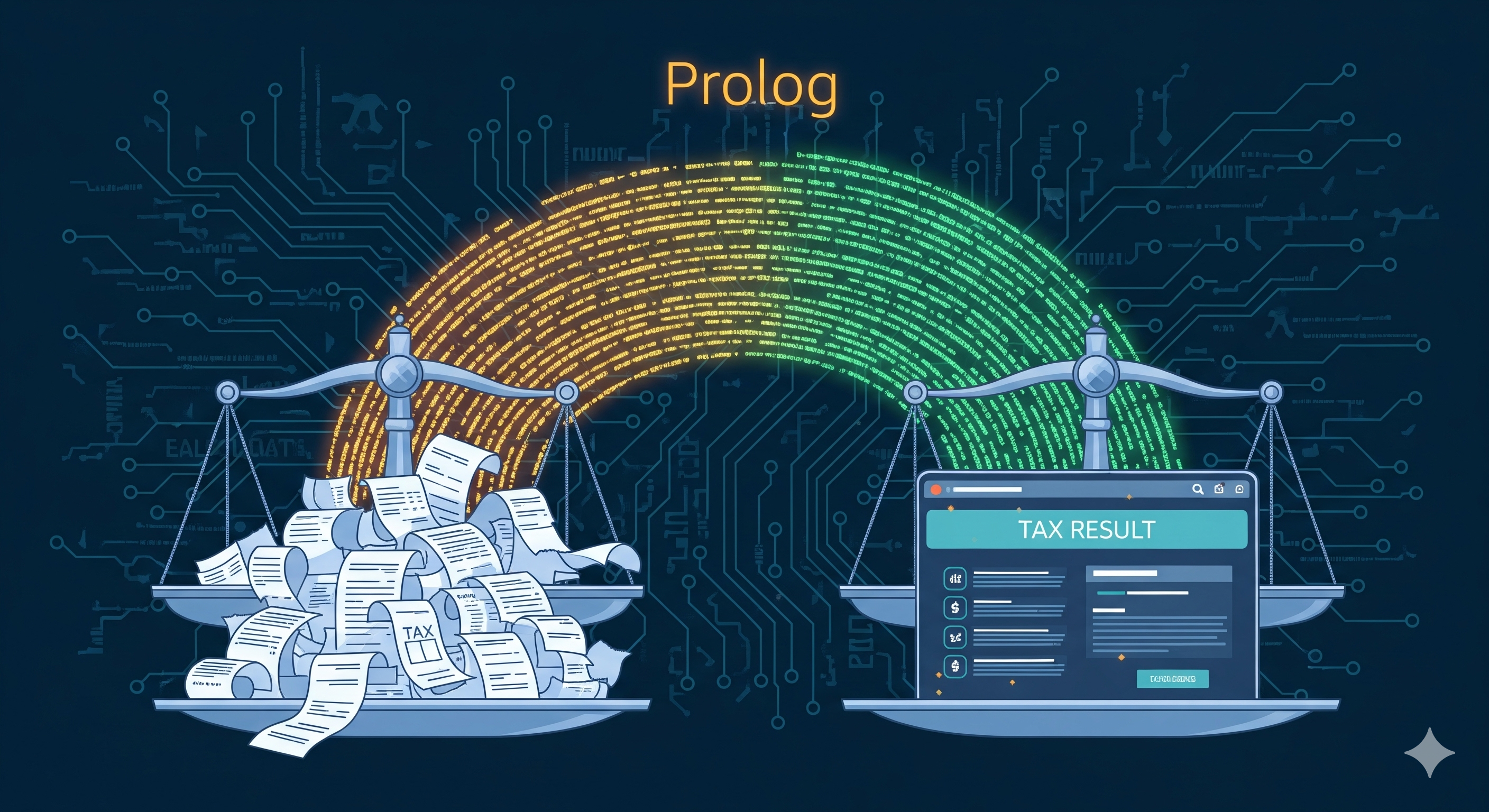

Retrieval-Augmented Generation (RAG), the method of grounding LLM outputs in retrieved context, has emerged as the go-to approach. But as Mastercard’s recent research shows, standard RAG pipelines choke on the reality of enterprise fintech: fragmented data, undefined acronyms, and role-based access control. The paper Retrieval-Augmented Generation for Fintech: Agentic Design and Evaluation proposes a modular, multi-agent redesign that turns RAG from a passive retriever into an active, reasoning system.

...