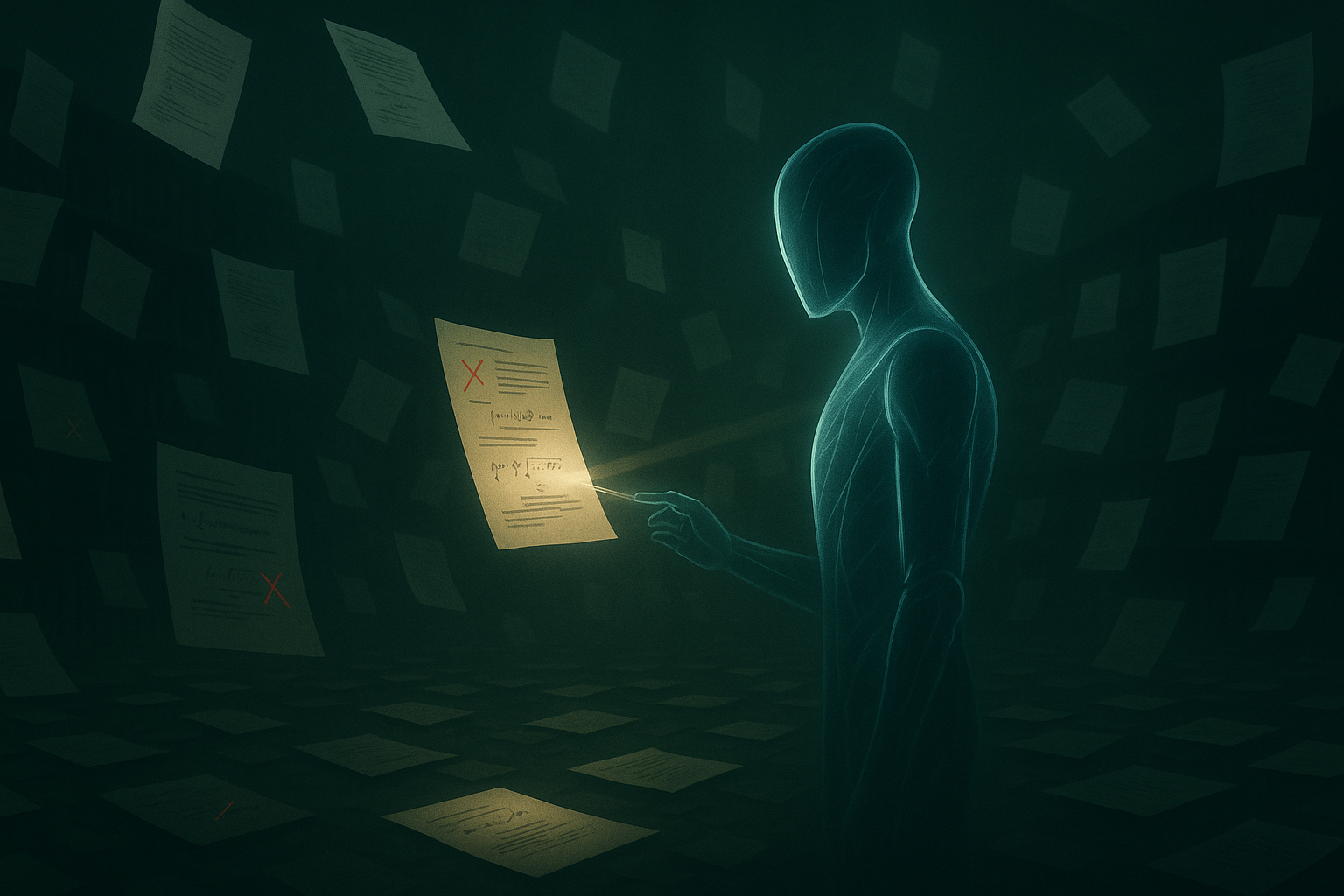

Error 404: Peer Review Not Found — How LLMs Are Quietly Rewriting Scientific Quality Control

Opening — Why this matters now The AI research ecosystem is sprinting, not strolling. Submissions to ICLR alone ballooned from 1,013 (2018) to nearly 20,000 (2026) — a growth curve that would make even the wildest crypto bull market blush. Yet the peer‑review system evaluating these papers… did not scale. The inevitable happened: errors slipped through, and then multiplied. ...