Hook, Line, and Import: How RAG Lets Attackers Snare Your Code

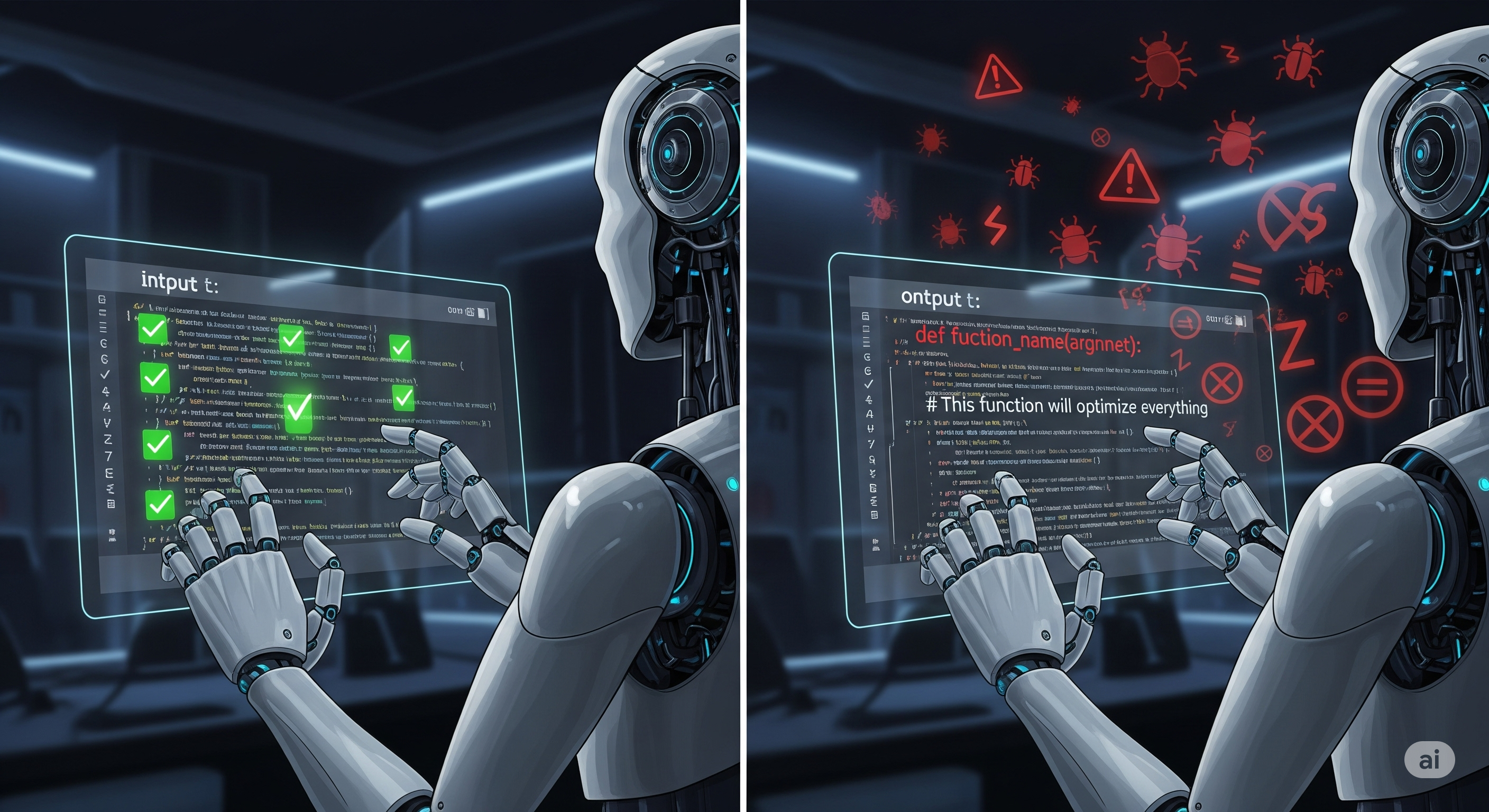

LLM code assistants are now the default pair‑programmer. Many teams tried to make them safer by bolting on RAG—feeding official docs to keep generations on the rails. ImportSnare shows that the very doc pipeline we trusted can be weaponized to push malicious dependencies into your imports. Below, I unpack how the attack works, why it generalizes across languages, and what leaders should change this week vs. this quarter. The core idea in one sentence Attackers seed your doc corpus with retrieval‑friendly snippets and LLM‑friendly suggestions so that, when your assistant writes code, it confidently imports a look‑alike package (e.g., pandas_v2, matplotlib_safe) that you then dutifully install. ...