From Text to Motion: How Manimator Turns Dense Papers into Dynamic Learning

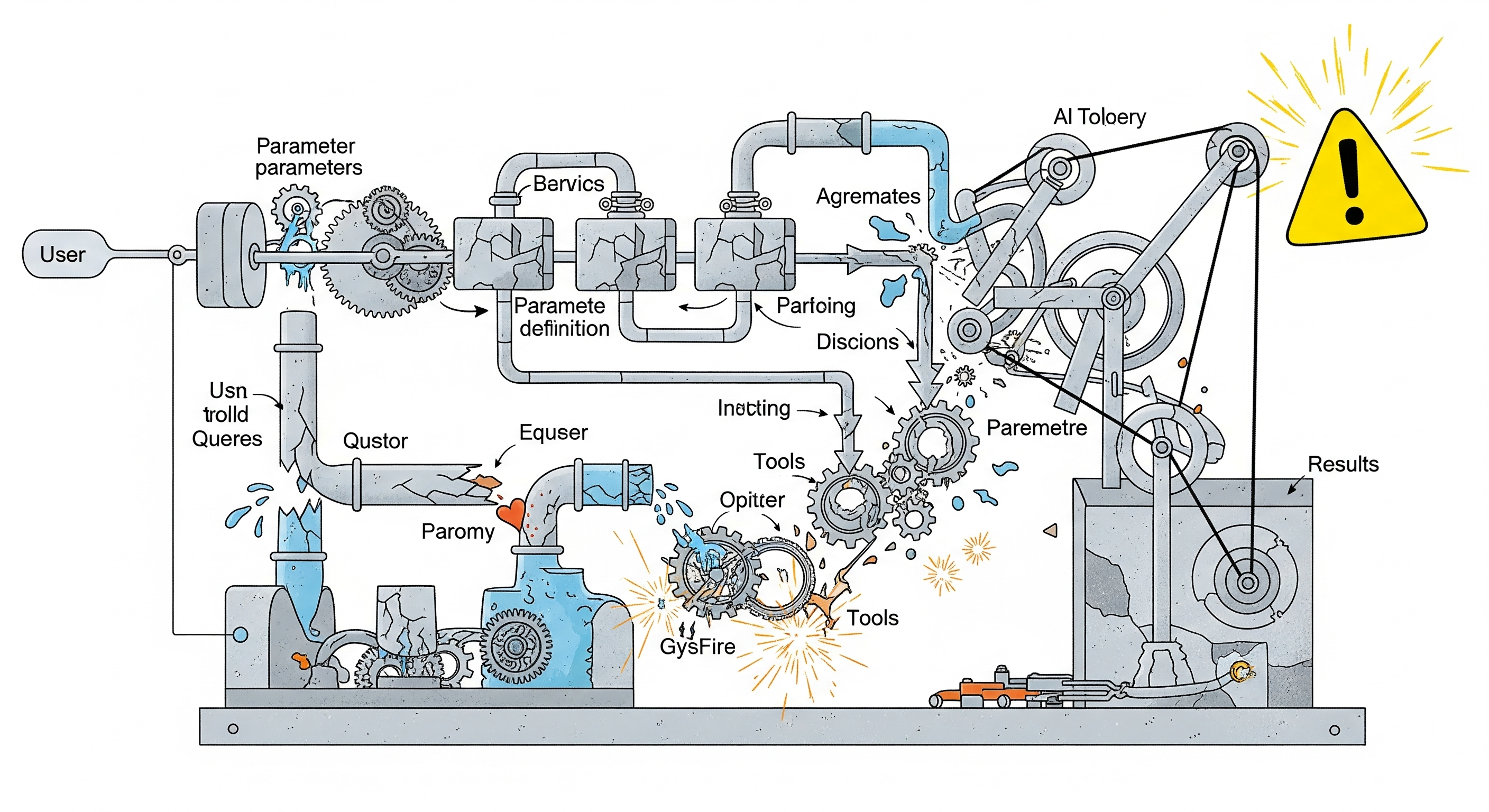

Scientific communication has always suffered from the tyranny of static text. Even the most revolutionary ideas are too often entombed in dense LaTeX or buried in 30-page PDFs, making comprehension an uphill battle. But what if your next paper—or internal training doc—could explain itself through animation? Enter Manimator, a new system that harnesses the power of Large Language Models (LLMs) to transform research papers and STEM concepts into animated videos using the Manim engine. Think of it as a pipeline from paragraph to pedagogical movie, requiring zero coding or animation skills from the user. ...