When Data Comes in Boxes: Why Hierarchies Beat Sample Hoarding

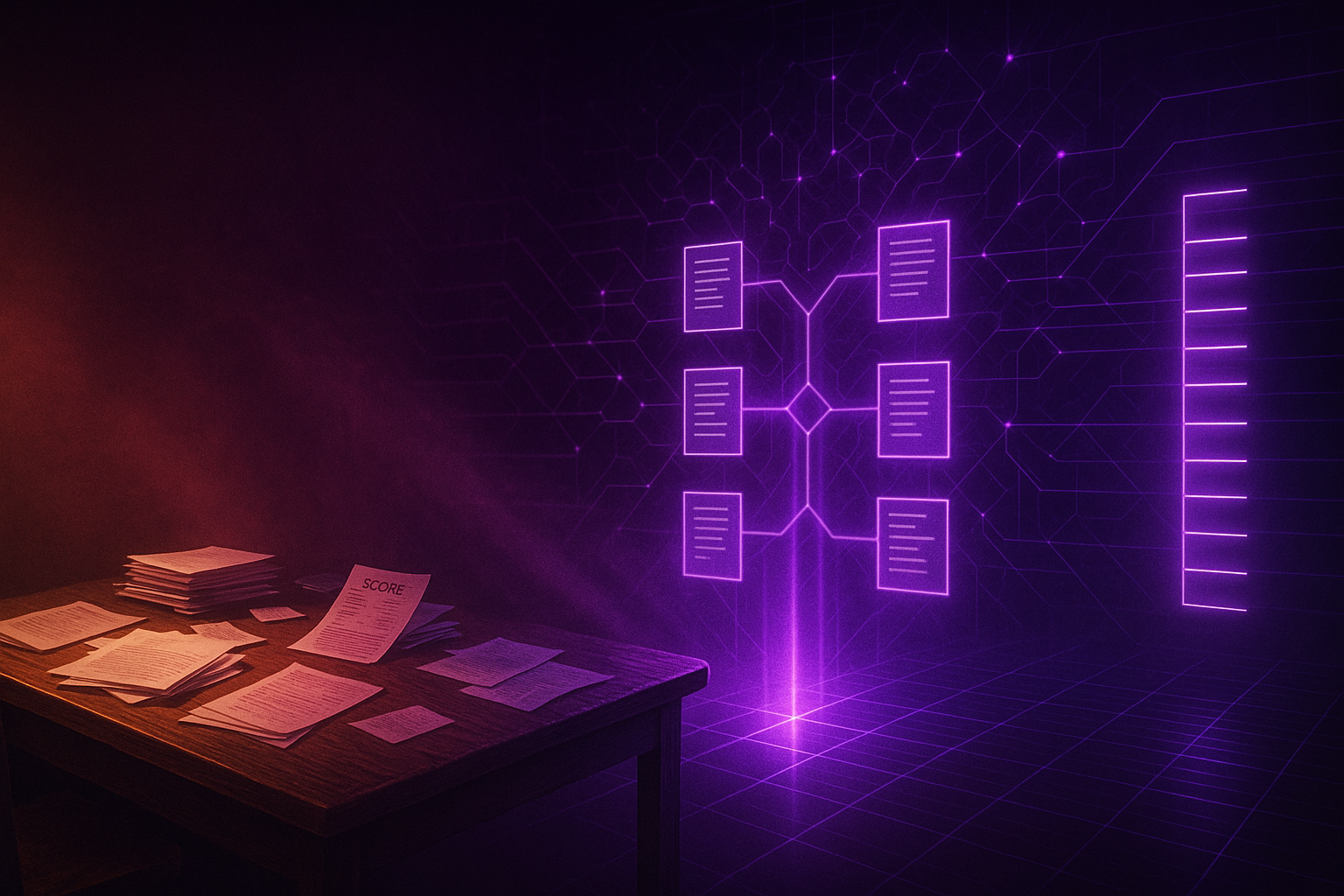

Opening — Why this matters now Modern machine learning has a data problem that money can’t easily solve: abundance without discernment. Models are no longer starved for samples; they’re overwhelmed by datasets—entire repositories, institutional archives, and web-scale collections—most of which are irrelevant, redundant, or quietly harmful. Yet the industry still behaves as if data arrives as loose grains of sand. In practice, data arrives in boxes: datasets bundled by source, license, domain, and institutional origin. Selecting the right boxes is now the binding constraint. ...