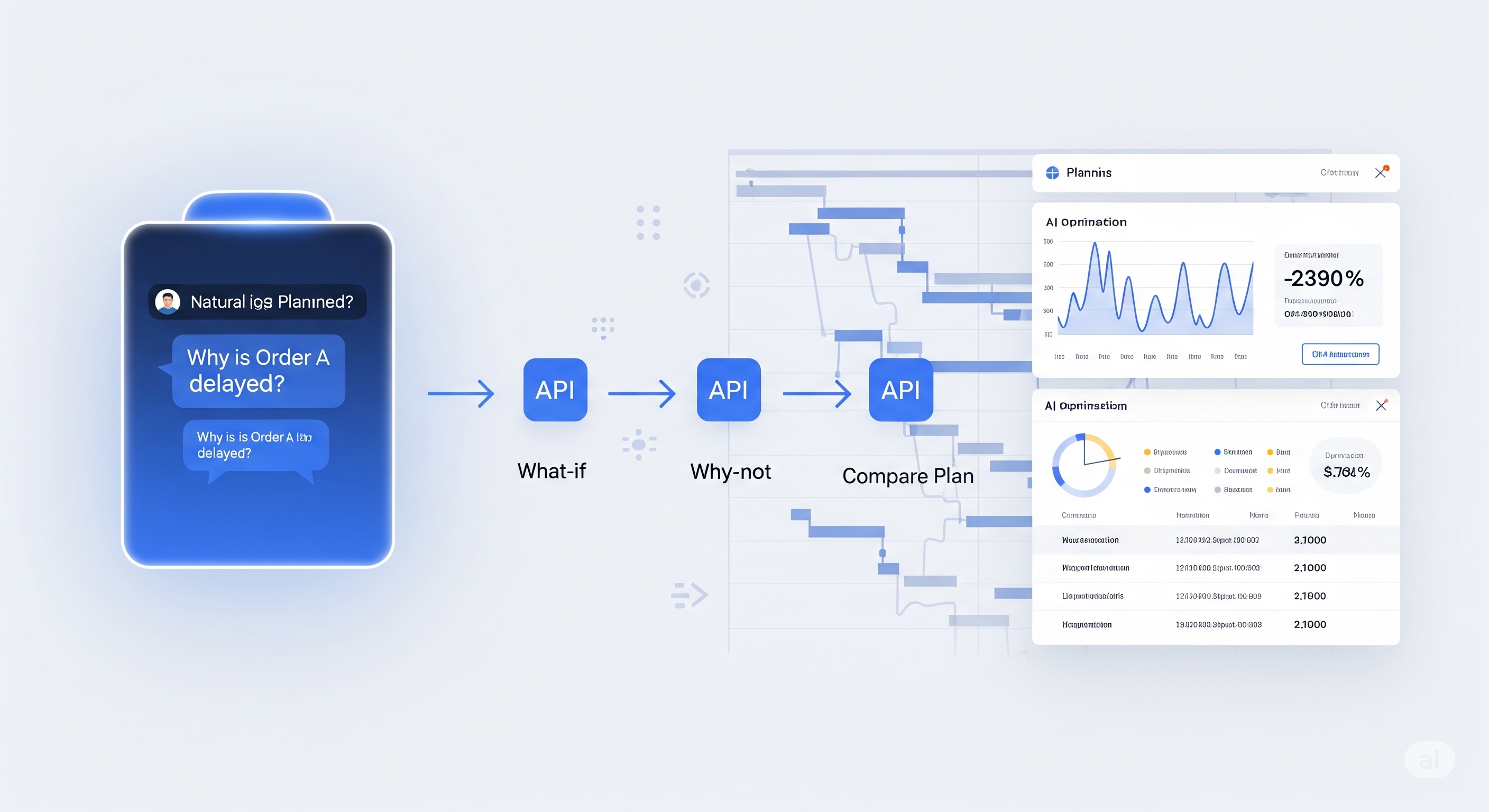

Planners, Meet Your Smart Sidekick

Imagine asking, “Why wasn’t Order A scheduled for production yesterday?” and getting not just an answer, but a causal breakdown, an alternative plan, and a visual comparison — all without involving your operations research (OR) consultant. That’s exactly what SMARTAPS delivers. Built by Huawei researchers, SMARTAPS is a tool-augmented LLM interface for interacting with Advanced Planning Systems (APS) using natural language. It doesn’t try to replace optimization solvers — it simply makes them accessible. In doing so, it redefines how planners interact with complex decision-making models. ...