Thinking Twice: Why Making AI Argue With Itself Actually Works

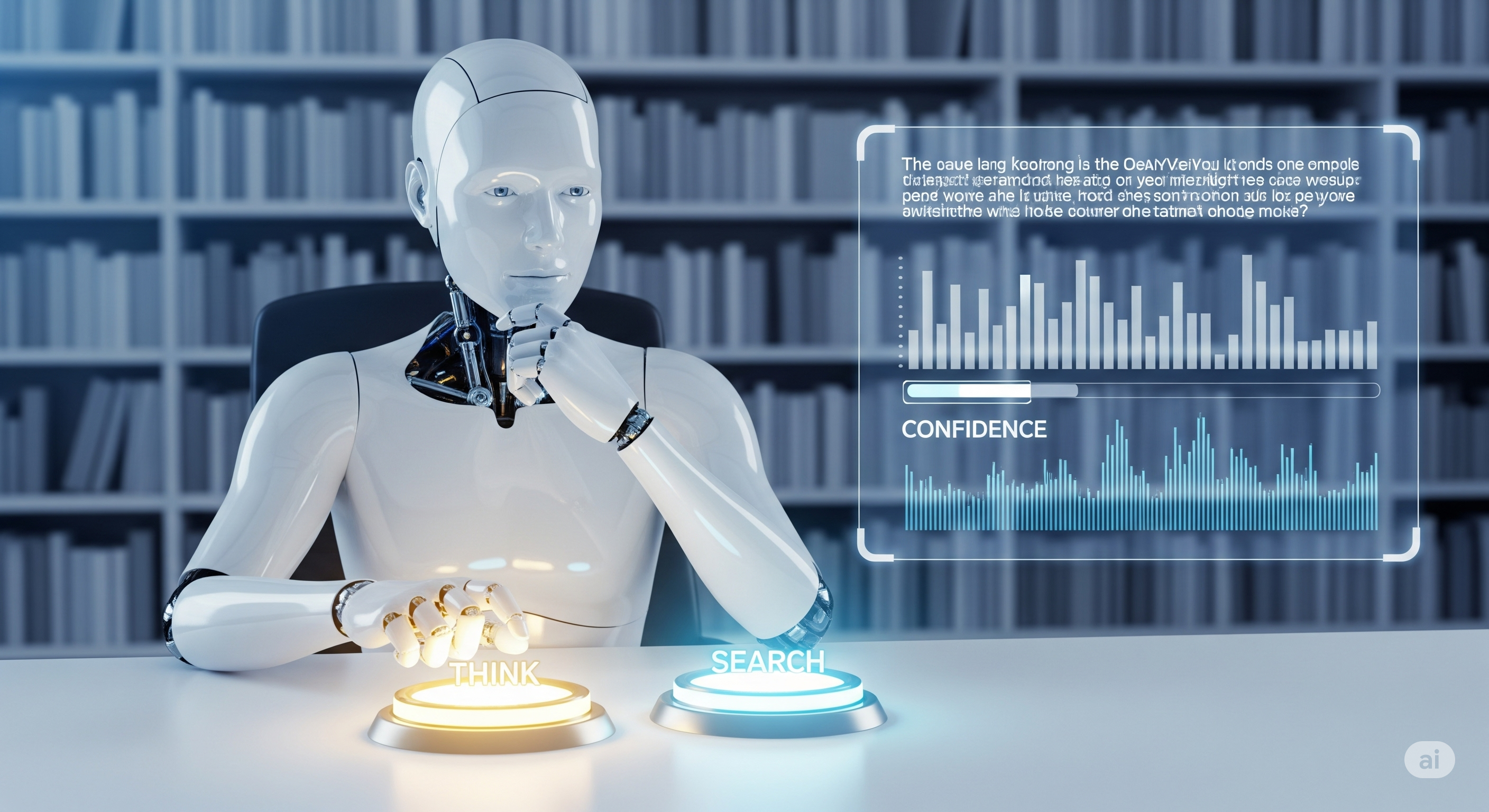

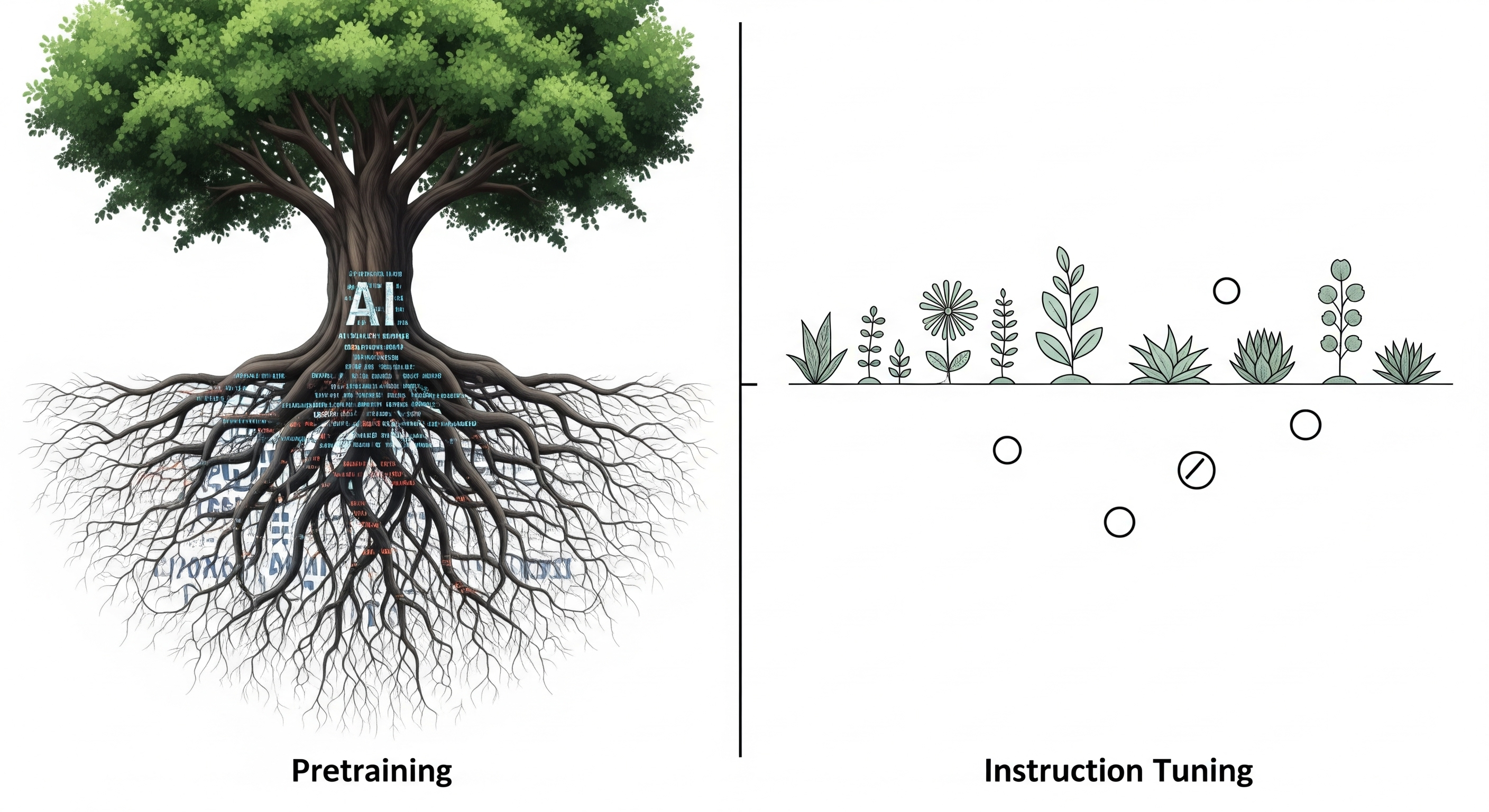

Opening — Why this matters now Multimodal large language models (MLLMs) are everywhere: vision-language assistants, document analyzers, agents that claim to see, read, and reason simultaneously. Yet anyone who has deployed them seriously knows an awkward truth: they often say confident nonsense, especially when images are involved. The paper behind this article tackles an uncomfortable but fundamental question: what if the problem isn’t lack of data or scale—but a mismatch between how models generate answers and how they understand them? The proposed fix is surprisingly philosophical: let the model contradict itself, on purpose. ...