Guardrails Before Gas: Secure Plan‑Then‑Execute Agents for Real Work

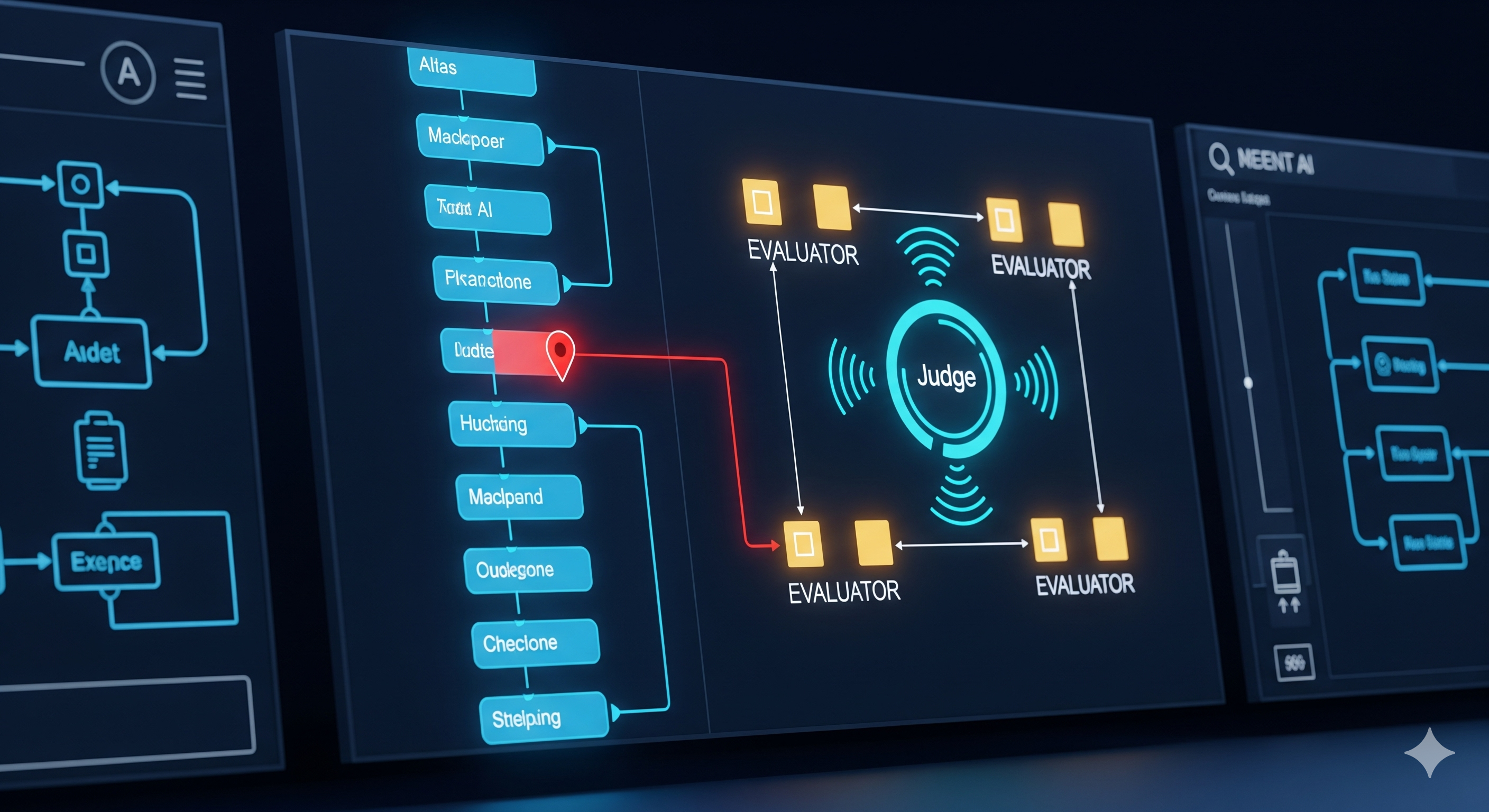

TL;DR Plan‑then‑Execute (P‑t‑E) agents separate strategy from action: a Planner writes a machine‑readable plan; an Executor carries it out. This simple split dramatically improves predictability, cost control, and—crucially—security. Hardened correctly (least‑privilege tools, sandboxed code, human sign‑offs), P‑t‑E becomes an enterprise‑grade pattern rather than a lab demo. Why today’s agents need a spine, not vibes Reactive patterns like ReAct feel nimble because they “think, act, observe, repeat.” But that short‑horizon loop is exactly what makes them fragile in production: they meander, retry the same failing step, and are easy to hijack by indirect prompt injection embedded in web pages or PDFs. P‑t‑E locks the control‑flow before the agent ingests untrusted data. The plan becomes an auditable artifact and the execution stage can be cheap, parallel, and tightly permissioned. ...