Fast Minds, Cheap Thinking: How Predictive Routing Cuts LLM Reasoning Costs

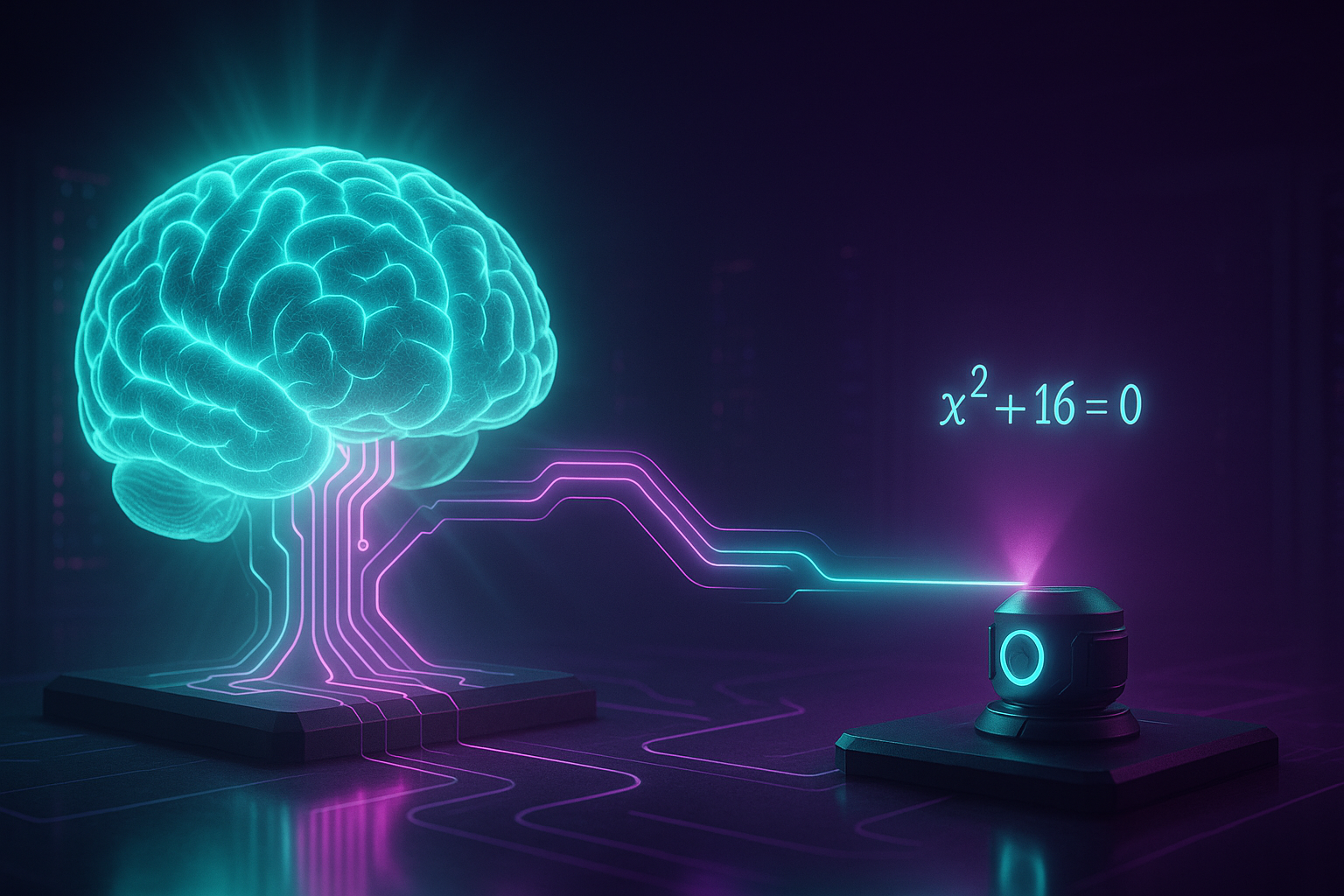

Opening — Why this matters now Large reasoning models like GPT-5 and s1.1-32B can solve Olympiad-level problems — but they’re computationally gluttons. Running them for every query, from basic arithmetic to abstract algebra, is like sending a rocket to fetch groceries. As reasoning models become mainstream in enterprise automation, the question is no longer “Can it reason?” but “Should it reason this hard?” ...