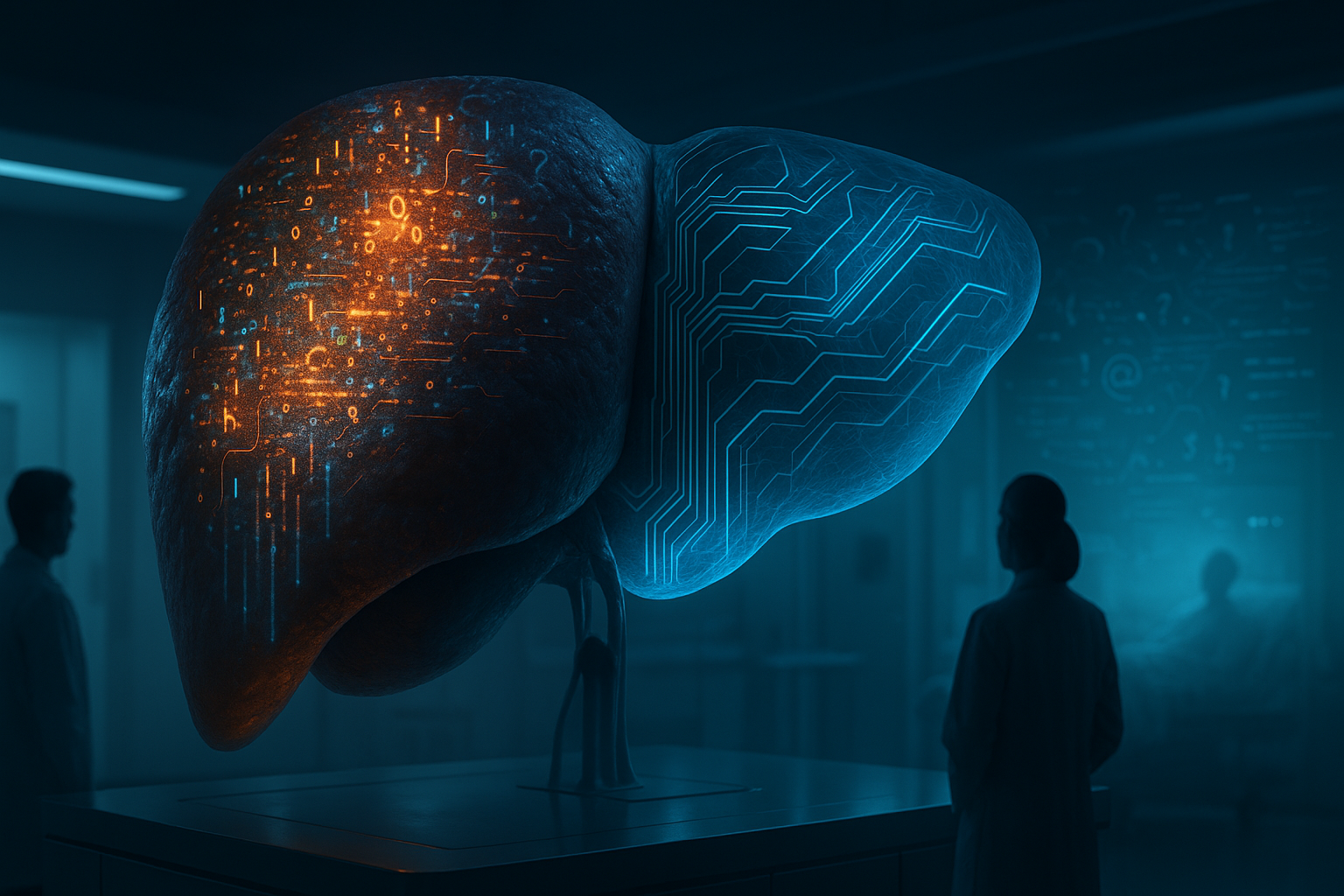

When LLMs Get Fatty Liver: Diagnosing AI-MASLD in Clinical AI

Opening — Why this matters now AI keeps passing medical exams, acing board-style questions, and politely explaining pathophysiology on demand. Naturally, someone always asks the dangerous follow-up: So… can we let it talk to patients now? This paper answers that question with clinical bluntness: not without supervision, and certainly not without consequences. When large language models (LLMs) are exposed to raw, unstructured patient narratives—the kind doctors hear every day—their performance degrades in a very specific, pathological way. The authors call it AI-MASLD: AI–Metabolic Dysfunction–Associated Steatotic Liver Disease. ...