Opening — Why this matters now

AI keeps passing medical exams, acing board-style questions, and politely explaining pathophysiology on demand. Naturally, someone always asks the dangerous follow-up: So… can we let it talk to patients now?

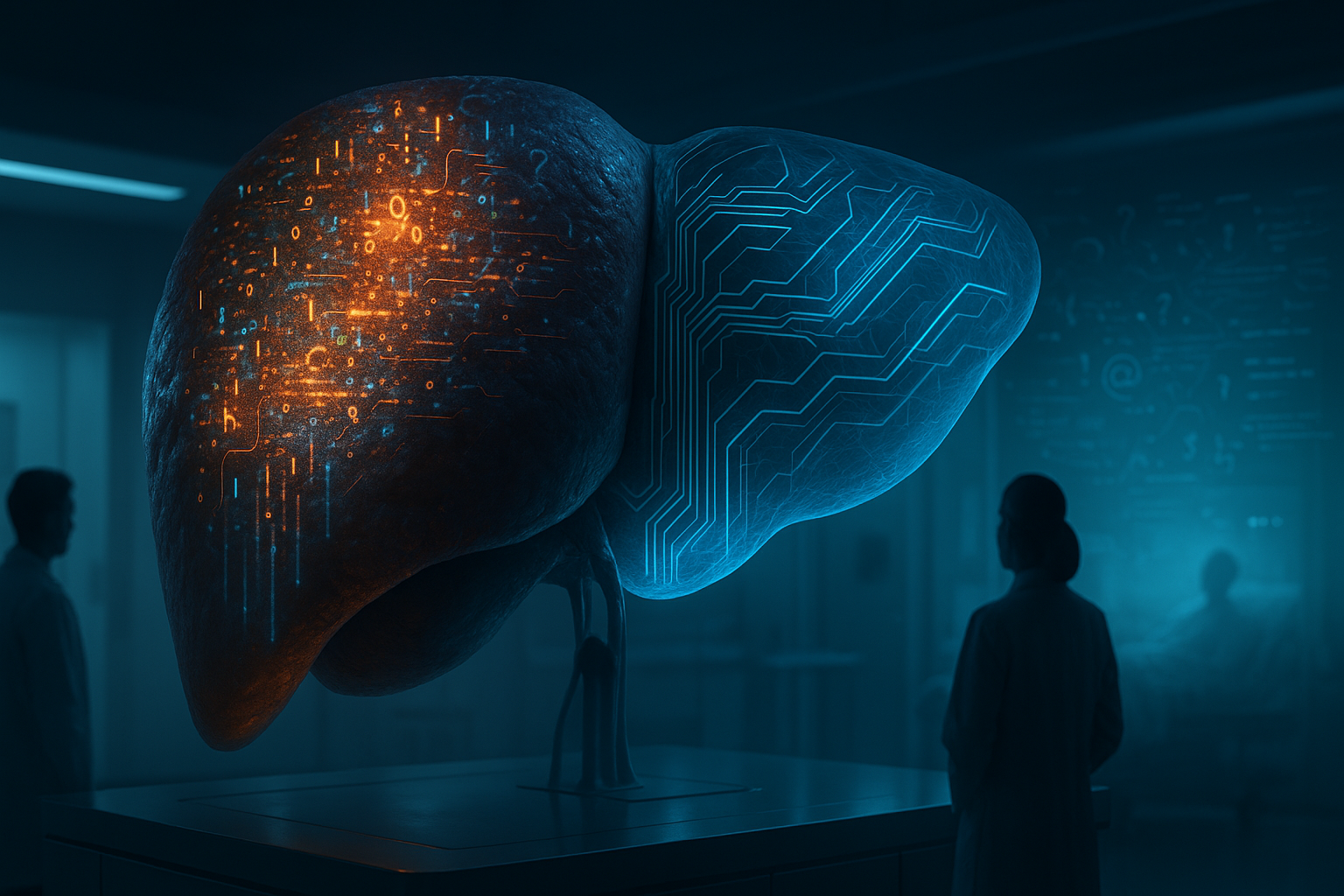

This paper answers that question with clinical bluntness: not without supervision, and certainly not without consequences. When large language models (LLMs) are exposed to raw, unstructured patient narratives—the kind doctors hear every day—their performance degrades in a very specific, pathological way. The authors call it AI-MASLD: AI–Metabolic Dysfunction–Associated Steatotic Liver Disease.

It’s not a joke metaphor. It’s an operational warning.

Background — From textbook medicine to bedside chaos

Most medical AI benchmarks quietly cheat. They evaluate models on:

- Exam-style multiple choice questions

- Cleaned electronic health records

- Doctor-written case summaries

In other words, secondary interpretations, already filtered by clinicians. Real patients don’t speak like that. They ramble. They contradict themselves. They mix fear, irrelevant life details, folk beliefs, and half-remembered diagnoses into a single breathless paragraph.

Human doctors are trained—over years—to metabolize this chaos. LLMs are not.

The paper reframes this mismatch using a medical analogy:

Just as the liver fails under chronic metabolic overload, LLMs exhibit functional decline when overwhelmed by high-noise clinical narratives.

Hence: AI-MASLD.

Analysis — What the paper actually did

The study stress-tested four mainstream LLMs:

- GPT-4o

- Gemini 2.5

- DeepSeek 3.1

- Qwen3-Max

Using 20 clinically designed “medical probes”, evaluated by senior clinicians under double-blind scoring. Each probe simulated a real consultation challenge across five dimensions:

- Noise filtering

- Priority triage

- Contradiction detection

- Fact–emotion separation

- Timeline reconstruction

Lower scores meant better performance. No images. No lab data. Just language—raw and messy.

In short: this wasn’t a trivia test. It was a bedside stress test.

Findings — The pathology report

Overall scores (lower is better)

| Model | Total Score (out of 80) |

|---|---|

| Qwen3-Max | 16 |

| DeepSeek 3.1 | 23 |

| GPT-4o | 27 |

| Gemini 2.5 | 32 |

All models showed impairment. None were “healthy.”

Universal weak spot: Noise filtering

Every model struggled when irrelevant details piled up. Under extreme noise, most models collapsed entirely, hallucinating symptoms or missing core signals.

This is the core symptom of AI-MASLD: information steatosis—too much fat, too little signal.

The most dangerous failure: Priority triage

In a simulated DVT → pulmonary embolism scenario, GPT-4o failed to prioritize the lethal risk, focusing instead on more obvious but less dangerous symptoms. That’s not a minor error. That’s a patient safety incident.

Model-specific traits

| Dimension | Who did best | Who failed hardest |

|---|---|---|

| Noise filtering | None (all poor) | Gemini 2.5 |

| Priority triage | Qwen3 / DeepSeek | GPT-4o (critical miss) |

| Contradiction detection | DeepSeek | GPT-4o |

| Fact–emotion separation | Qwen3-Max | Gemini 2.5 |

| Timeline sorting | Everyone (mostly) | DeepSeek (verbosity) |

The uncomfortable takeaway: general intelligence rankings do not predict clinical reliability.

Failure modes — The anatomy of AI-MASLD

The paper identifies three recurring pathological patterns:

1. Catastrophic failure

Complete misjudgment in high-risk scenarios. Rare—but lethal when it happens.

2. Information steatosis

Correct facts buried under redundancy, emotional echoing, and unnecessary explanation. Doctors must re-filter everything. Efficiency collapses.

3. Hallucinated symptoms

Under noise stress, models invented symptoms not present in the narrative. Not malicious—just metabolically overloaded.

Implications — What this means for healthcare AI

The exam myth is officially dead

Passing USMLE-style tests says almost nothing about bedside competence. Clinical reasoning is not retrieval. It’s prioritization under ambiguity.

Human doctors remain information gatekeepers

Until AI can metabolize raw narratives safely, its role must remain assistive, not autonomous—especially in triage, emergency, and primary care settings.

Model selection must change

Healthcare buyers should stop asking:

“Which model is smartest?”

And start asking:

“Which model degrades least under noise?”

That’s a very different leaderboard.

Conclusion — You can’t cure what you won’t diagnose

AI-MASLD isn’t a branding gimmick. It’s a structural diagnosis.

LLMs don’t fail in medicine because they lack knowledge. They fail because they cannot yet metabolize reality—the messy, emotional, contradictory way patients actually speak.

Until that changes, deploying LLMs directly to patients without strict human oversight isn’t innovation. It’s negligence.

Cognaptus: Automate the Present, Incubate the Future.