TL;DR

- Why this paper matters: It shows that how you search matters more than how big your model is for multi‑step, tool‑using analytics. With a notebook‑grounded dataset (NbQA) and value‑guided search, mid‑size open models rival GPT‑4o–based agents on a leading data‑analysis benchmark.

- What’s new: (1) NbQA, a large corpus of real Jupyter tasks with executable multi‑step solutions; (2) JUPITER, a planner that treats analysis as a tree search over “thought → code → output” steps, guided by a learned value model.

- Why you should care (operator’s view): This blueprint turns flaky “Code Interpreter”-style sessions into repeatable playbooks—fewer dead ends, more auditable steps, and better generalization without paying for the biggest model.

The core idea: analytics as a search tree

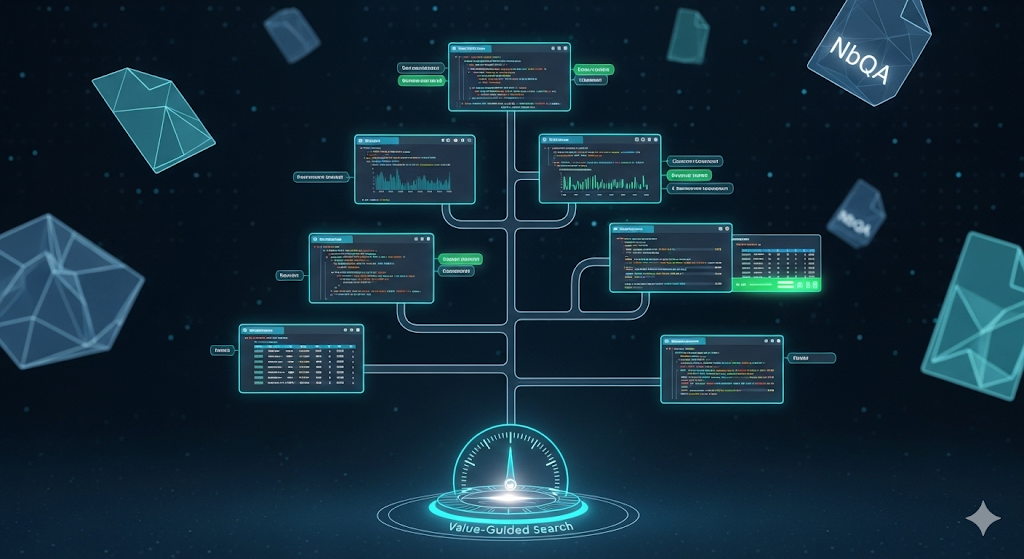

Most LLM data‑analysis failures come from branching mistakes: choosing the wrong intermediate step, compounding errors, and wasting tool calls. JUPITER reframes the whole exercise as search over notebook states. Each node is a concrete state—accumulated thoughts, code, and execution outputs. The system expands only a few promising branches and prunes the rest using a value model trained on successful and failed trajectories.

Why value beats exploration here

In open‑ended analysis, the valid solution space is sparse: most branches break or lead nowhere. Instead of broad exploration, JUPITER says: learn which partial states are valuable, then double‑down on them. In practice, disabling the exploration term and trusting the value head concentrates effort on the few branches that actually compile to answers.

Mental model: Think AlphaZero, but instead of board positions you have notebook cells; instead of material advantage you track “is this partial analysis state on a path that typically ends well?”

NbQA: the missing dataset for real tool use

Synthetic ReAct traces are easy to produce but drift from reality. NbQA mines 1.6M notebooks / 3.2M data files from GitHub, filters out low‑value cases (toy datasets, trivial row counts), and standardizes evaluable tasks with strict output formats. Crucially, many samples retain fully interactive data dependencies, so trajectories can be executed end‑to‑end. That enables two things product teams need:

- Supervised fine‑tuning on realistic “task ↔ multi‑step solution” pairs.

- Trajectory collection for value‑model training via search.

What’s actually inside NbQA (operator’s snapshot)

- Task coverage: summary stats, distribution/normality, correlation, outlier rules, preprocessing, feature engineering, classical ML, and viz.

- Evaluation discipline: numeric answers must be directly extractable from code outputs; answers are normalized (precision/ranges) for fairness and automation.

- Diversity controls: repository‑level caps; dedup by data‑file hashes; removal of GPU/NN notebooks to keep tasks interpretable and reproducible.

How JUPITER plans (without the hand‑wavy agent stack)

Loop:

- Pick a notebook state (root = the question + empty context).

- Sample a few thought → code candidates.

- Execute each snippet in a real kernel; record the output.

- Score the resulting child states using a value head; back‑propagate scores; repeat.

- Accumulate candidate answers; choose by vote or highest value.

A compact comparison of inference strategies

| Strategy | What it does | Typical failure | When to use |

|---|---|---|---|

| Majority voting | Sample multiple independent runs; take the mode | Duplicates the same wrong path | Quick confidence boost on narrow tasks |

| ReAct | Interleave thoughts and actions; linear path | One early mistake cascades | Short tool chains, low branching |

| JUPITER (value‑guided) | Search over many partial paths; prefer states with high predicted value | Needs a value head trained from real trajectories | Multi‑step tool use; noisy, sparse solution space |

Why this matters for businesses adopting AI analytics

- Reliability without overkill: Mid‑size open models + value‑guided search can meet or beat larger closed agents on multi‑step analysis. That’s cost‑control with auditability.

- Observability by design: Because every node is a runnable cell with captured outputs, you get a forensic trail for compliance and post‑mortems.

- Better OOD behavior: A learned notion of “promising partial states” transfers across task formats (not just Q&A), yielding higher odds of at least one correct candidate even off‑distribution.

Limitations & open questions (read: where to push next)

- Kernel sandboxes & data governance: Real orgs have messy data perimeters. How to log, hash, and permission each artifact as the tree grows? (We’d pair JUPITER with strict dataset manifests + row‑level provenance.)

- Long‑horizon budgets: Value‑guided search helps, but budgets still bind. Dynamic budgeting (spend more when value spikes) could raise ROI.

- Metric shaping: Current value heads optimize “eventual correctness.” Many business analyses value explainability or reusable assets (figures/tables). Multi‑objective value heads are due.

- Human‑in‑the‑loop breakpoints: Where should analysts intervene—on state selection, code edits, or tolerance rules? UI affordances matter.

An adoption playbook you can run this quarter

Scope: Convert your ad‑hoc “Ask the AI to crunch this CSV” flow into a search‑first loop.

- Curate tasks from past notebooks: extract 200–500 tasks with strict answer formats; keep data files alongside.

- SFT a 7–14B model on your internal set (ReAct schema). Freeze the base; LoRA on attention is sufficient.

- Collect trajectories: Run bounded MCTS on 100+ tasks to log both successes and failures; train a small value head.

- Deploy inference: Disable exploration; set a modest step budget; prefer the highest‑value candidate over pure majority vote.

- Governance: Store every node’s code, outputs, hashes, and data manifest for audit. Fail closed on any PII/PHI boundary breach.

KPIs to watch

- Solved‑within‑budget rate (per task type)

- Average node expansions per solution (efficiency)

- Human edit distance (lines changed before acceptance)

- Reproducibility rate (seeded re‑runs)

What this means for Cognaptus clients

This blueprint fits directly into our Agentic Analytics offering: we already maintain notebook sandboxes, job logs, and data manifests. The immediate win is lower cost per solved task and higher trust in outputs without chasing the largest frontier model.

Cognaptus: Automate the Present, Incubate the Future.