TL;DR

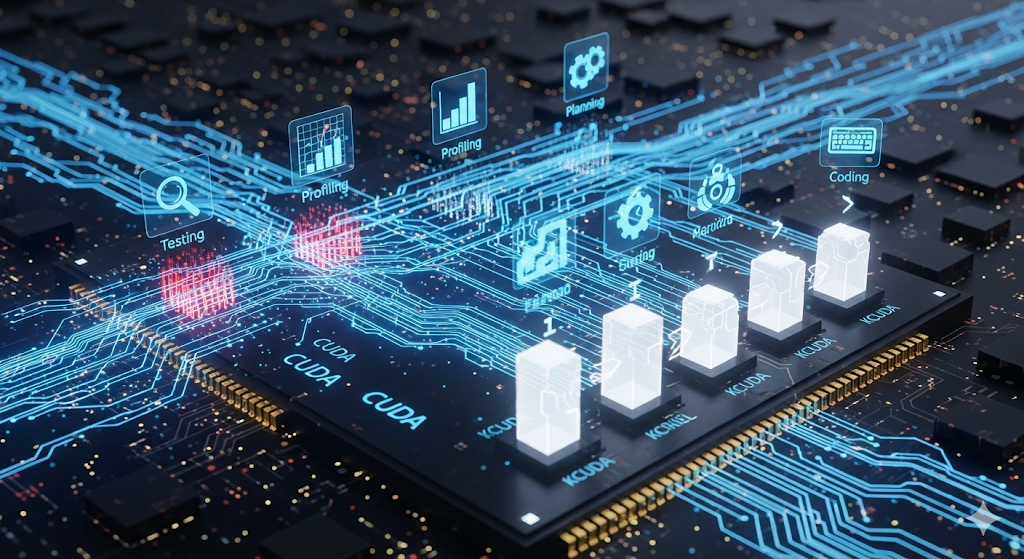

Astra is a multi‑agent LLM system that optimizes existing CUDA kernels instead of generating them from PyTorch. On three production‑relevant SGLang kernels, it delivered 1.32× average speedup (up to 1.46×) without fine‑tuning—just structured zero‑shot prompting. The win isn’t a single trick; it’s a division of labor: testing, profiling, planning, and coding each handled by a specialized agent that iterates toward faster, still‑correct kernels.

Why this matters for business readers

GPU efficiency is the new gross margin. If your serving stack pushes trillions of tokens per day, a 25–45% kernel‑level speedup compounds into:

- Lower unit cost per 1K tokens (fewer GPU‑hours for the same traffic)

- Higher throughput on the same fleet (delay capex)

- Lower energy per request (sustainability + cost)

Most orgs already run kernels that are “good enough,” not peak. Astra shows a practical path to unlock latent performance without a ground‑up compiler effort.

What Astra changes

Unlike prior work (e.g., translating PyTorch modules → CUDA), Astra starts from your existing CUDA and applies an agentic optimization loop:

-

Testing Agent builds a robust suite of shape/value cases and validates equivalence.

-

Profiling Agent times candidates on representative shapes (post‑warmup) and reports deltas.

-

Planning Agent decides what to try next, balancing correctness with the hottest bottlenecks.

-

Coding Agent rewrites kernels accordingly.

This decomposed loop avoids a classic failure mode: a single agent over‑fits to bad tests or misleading profiles. In head‑to‑head trials, Astra’s multi‑agent setup beat a single‑agent baseline 1.32× vs 1.08× average speedup at equal iteration budgets.

What actually got faster? (Concrete tricks that generalize)

Astra’s gains came from classic HPC patterns that LLMs, when properly scaffolded, can re‑discover and apply consistently:

- Hoist loop‑invariants: Move expensive math (exp/divide/normalize) out of inner loops.

- Warp‑level reductions: Prefer

__shfl_down_syncregister shuffles, finalize in shared memory. - Vectorized memory ops: Use

__half2loads/stores to double effective bandwidth on FP16. - Fast‑math intrinsics: Replace divides with reciprocal×multiply; use

__expf, fused mul/add.

These are not model‑specific hacks—they’re portable ideas you can expect across attention, norms, and activation fusions.

Results at a glance

| Kernel | Computation (informal) | LoC (Base→Opt) | Time µs (Base→Opt) | Speedup |

|---|---|---|---|---|

| merge_attn_states_lse | Weighted merge of two value blocks with stabilized log‑sum‑exp | 124 → 232 | 31.4 → 24.9 | 1.26× |

| fused_add_rmsnorm | Residual add + RMSNorm + scale | 108 → 163 | 41.3 → 33.1 | 1.25× |

| silu_and_mul | SiLU(x) ⊙ g (FP16‑friendly) | 99 → 157 | 20.1 → 13.8 | 1.46× |

| Average | — | 110 → 184 | 30.9 → 23.9 | 1.32× |

Note: Shapes drawn from LLaMA‑class configs; warm‑ups run before timing. Speedups were shape‑robust, not over‑tuned to a single case.

Where single‑agent systems stumble

Single agents must juggle four skills (testing, profiling, planning, coding). That invites coupled errors—e.g., weak test generation → misleading profiles → harmful rewrites. In Astra’s study, the most complex kernel slowed down under a single‑agent setup due to unrepresentative test inputs. Specializing agents de‑couples these errors and raises the floor on result quality.

Integration realities (and what to do now)

Astra’s current workflow required manual pre‑ and post‑processing to lift kernels out of SGLang and patch optimized versions back. That’s normal in production frameworks with deep dependencies. A practical adoption plan:

Week 1–2: Pilot

- Pick 2–3 hot kernels (fusion ops, attention mixes, norms) with clear perf ceilings.

- Create stand‑alone harnesses (inputs, correctness checks, warm‑ups, Nsight templates).

- Run a multi‑agent loop for 5–10 iterations per kernel.

Week 3–4: Productize

- Build a small kernel registry with AB switchers and shape telemetry.

- Add canary deploys and rollback on correctness drift.

- Track cost/throughput/latency and p95/p99 at the service boundary, not just µs in microbenchmarks.

Quarterly: Scale

- Template the agent loop for your common kernel archetypes.

- Maintain a shape corpus derived from real traffic; refresh monthly.

- Automate patching for target frameworks (vLLM, PyTorch custom ops, Triton where applicable).

A quick buyer’s guide for CTOs

If you’re choosing among code‑gen agents or compiler stacks for inference cost control:

- Use multi‑agent LLMs when you have existing CUDA you can legally modify, want near‑term gains, and can invest in a correctness + profiling harness.

- Use compiler stacks (TVM/Triton/Mlir) when you need broader op coverage, cross‑hardware portability, or shape‑specialized autotuning at scale.

- Hybrid: Let compilers set a strong baseline, then point agents at the 5–10 kernels dominating your bill.

Checklist: make your kernels agent‑ready

- Minimal, dependency‑light kernel harness per op

- Deterministic test vectors + tolerances (FP16/TF32 aware)

- Warm‑up & repetition policy (e.g., 20 warm‑ups, 100 reps)

- Nsight Compute recipes for roofline, memory transactions, warp efficiency

- Shape corpus sampled from production traffic

- AB gating + rollback hooks

The bigger picture

We’ve argued in prior Cognaptus pieces that agentic specialization beats monolithic prompts for hard engineering problems. Astra validates that stance in GPU land: give models focused roles, guardrails, and real feedback, and they’ll re‑discover decades of HPC wisdom at industrial speed.

Cognaptus: Automate the Present, Incubate the Future