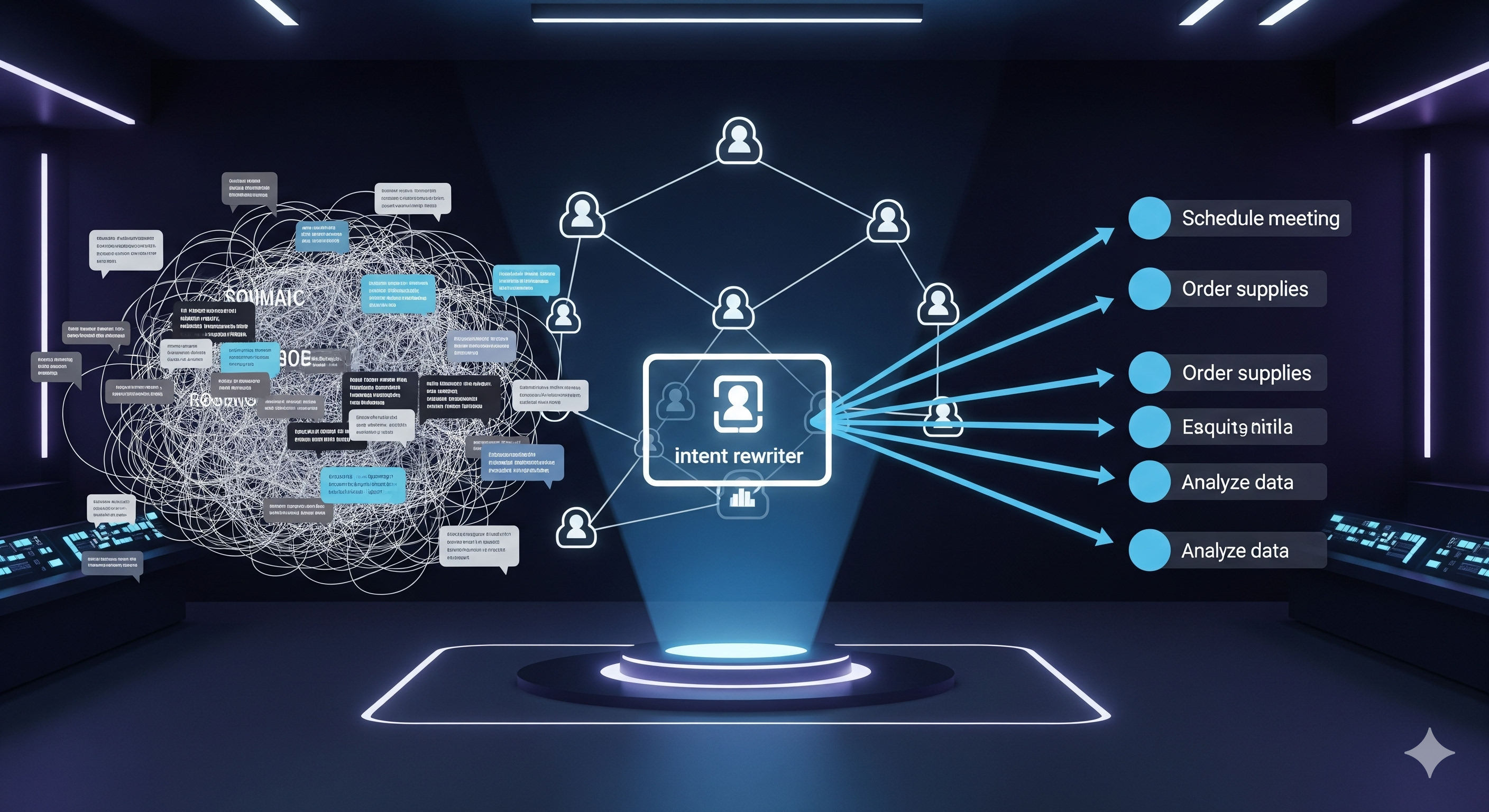

When assistants coordinate multiple tools or agents, the biggest unforced error is planning off the raw chat log. RECAP (REwriting Conversations for Agent Planning) argues—and empirically shows—that a slim “intent rewriter” sitting between the dialogue and the planner yields better, cleaner plans, especially in the messy realities of ambiguity, intent drift, and mixed goals. The headline: rewriting the conversation into a concise, up‑to‑date intent beats throwing the whole transcript at your planner.

What RECAP actually builds

-

A benchmark of 750 vetted user–agent dialogues spanning cooking, programming, health, travel, restaurants—explicitly crafted to stress real‑world pitfalls like shifted intent, noisy input, underspecification, and multi‑intent (not just slot‑filling toys).

-

A plan evaluator that judges which of two plans better satisfies the user’s most recent goals (with rubrics for latest intent, non‑fabrication, granularity, completeness, and ordering). Plans are compared structurally (node/edge counts, graph‑edit distance) and semantically (BERTScore); preference is measured by humans and an LLM‑as‑judge.

-

Three rewriter families: a Dummy (raw dialogue), a Basic (generic summary), and an Advanced prompt that explicitly extracts the latest, unambiguous intent(s). In head‑to‑head human preference tests, Advanced wins across most challenges (esp. Shifted and Multi‑intent) while avoiding over‑specification when the user is vague.

The results you can hang a roadmap on

-

Advanced rewriter vs. others — Human win‑rates favor Advanced across challenges; ties dominate only when the original intent is already crystal clear. Translation: rewriting adds most value when conversations are long or the user changes their mind.

-

LLM‑as‑Judge works—if fine‑tuned. A fine‑tuned GPT‑4.1 evaluator beats zero‑shot baselines (Acc 65%, F1 0.65 on test), enabling scalable plan preference scoring without hiring an annotation army.

-

DPO‑trained rewriters beat prompts. Training the rewriter with Direct Preference Optimization (DPO) using human plan preferences (DPO:human) further increases plan quality and outperforms Advanced, particularly under Shifted and Multi‑intent scenarios; LLM‑labeled DPO is competitive but less consistent.

Why this matters for Cognaptus‑style agent stacks

Cognaptus builds agentic workflows for ops, analysis, and content generation. RECAP’s lesson is operational: separate “chat understanding” from “plan making.” The rewriter becomes a thin, deterministic interface:

Dialogue (messy) → Intent Rewrite (concise, latest, multi‑goal aware) → Planner DAG (tools & dependencies)

That single boundary improves 4 things our clients care about:

-

Plan correctness: use the latest goals; drop obsolete turns.

-

Plan efficiency: cut fabricated steps; parallelize what’s independent.

-

Cost control: smaller context to the planner, fewer retries.

-

Observability: rewrites are short artifacts you can log, inspect, and A/B.

A simple blueprint to ship this next sprint

1) Slot in a Rewriter micro‑service

- Input: full dialogue trajectory (user & assistant turns)

- Output: JSON with

{ intents: [...], assumptions: [...], scope: {in, out}, recency_cutoff: <turn-id> } - Rule: The rewrite must reflect the latest explicit or strongly implied user goals; log discarded intents.

2) Train for your domain

- Start with an Advanced‑style prompt for zero‑shot.

- Collect plan comparisons from your planner; fine‑tune with DPO using human preferences for the most ambiguous cases. This mirrors DPO:human, which outperformed prompts in RECAP.

3) Measure what matters

Track: (a) human/LLM plan preference; (b) node/edge deltas, GED; (c) execution metrics (tool errors, cycle time, token cost). RECAP’s metric mix is a strong starting point.

4) Guardrails

- Underspecified requests: rewriter must not invent details; prefer clarification tasks. (RECAP shows over‑spec hurts here.)

- Intent drift: rewriter must timestamp the “recency cutoff” so stale asks don’t leak into the plan.

- Multi‑intent: force distinct tracks in the DAG; mark soft vs. hard dependencies.

Quick table: When to rewrite (and how)

| Conversation symptom | Rewriter action | Planner effect |

|---|---|---|

| User reverses decision mid‑thread | Keep only post‑reversal goals; mark earlier intents as obsolete | Removes wasted nodes; avoids stale edges |

| Vague ask (“make it nice”) | Extract constraints + emit Clarify X/Y/Z sub‑tasks | Prevents hallucinated steps |

| Two asks intertwined | Split into intent A, intent B with parallelizable sub‑DAGs | Improves throughput |

| Long chit‑chat + asides | Strip noise; anchor to last explicit instruction | Cuts token cost, reduces fabrications |

A brief comparison with other benchmarks

Previous datasets (e.g., MultiWOZ family, IN3) taught us slot‑filling and clarification, but show limited sensitivity to rewrite quality. RECAP increases realism and conversation length, making planners visibly diverge when given different inputs—exactly the stress we see in production agents.

The takeaway

Rewriting is not a nicety; it’s infrastructure. If your agent stack plans from raw chat, you’re paying a tax in errors and tokens. Adopt a rewriter, evaluate plans with human/LLM preference, and—once you have data—fine‑tune the rewriter with DPO. That’s the shortest path to sturdier plans and happier users.

Cognaptus: Automate the Present, Incubate the Future.