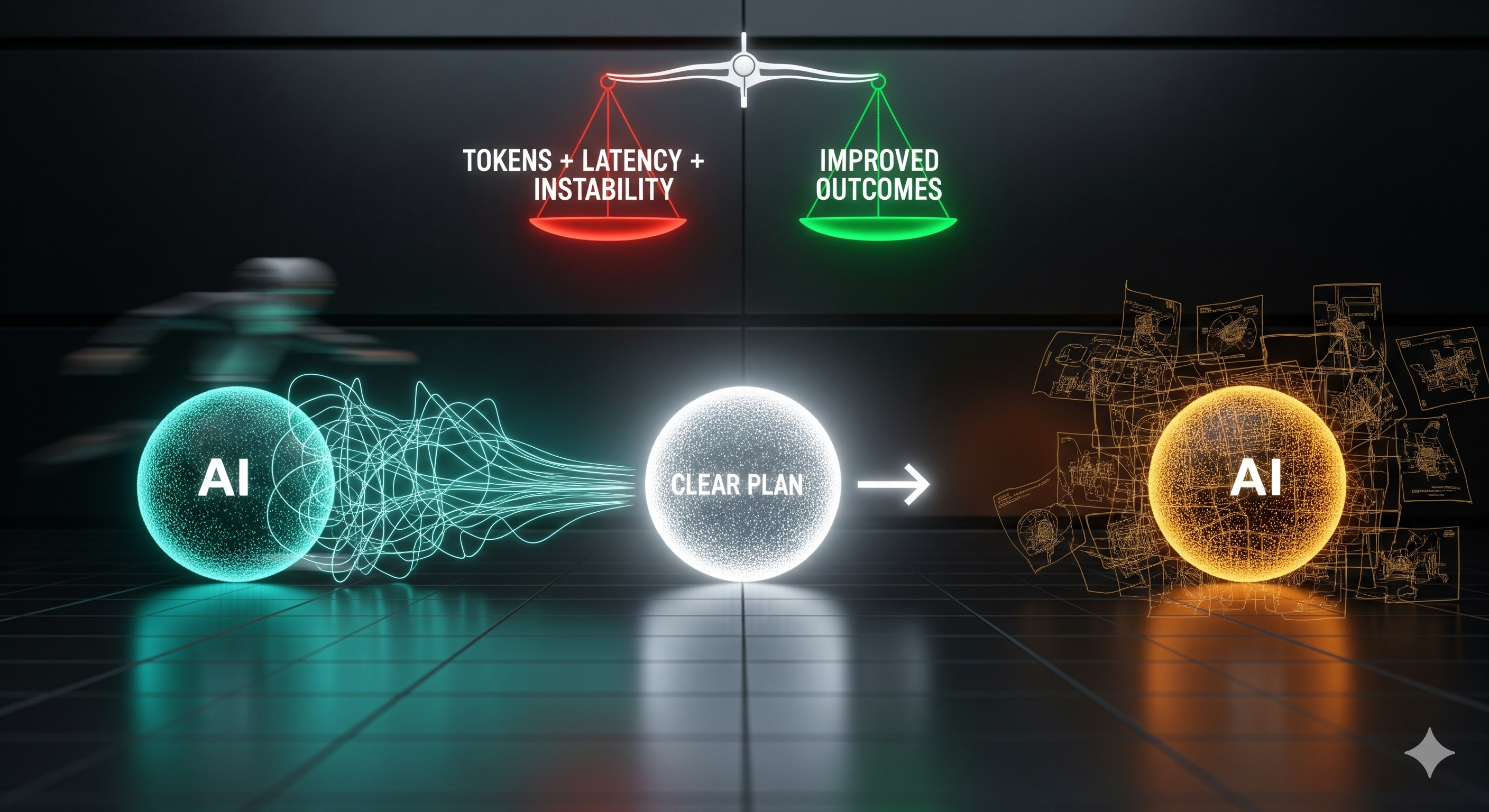

When do you really need a plan? In agentic AI, the answer isn’t “always” (ReAct‑style reasoning at every step) or “never” (greedy next‑action). It’s sometimes—and knowing when is the whole game. A new paper shows that agents that learn to allocate test‑time compute dynamically—planning only when the expected benefit outweighs the cost—beat both extremes on long‑horizon tasks.

Why this matters for operators

Most enterprise deployments of LLM agents are killed by one of two problems:

- Over‑thinking: ballooning tokens, latency, and brittle behavior from incessant replanning.

- Under‑thinking: cheap but shortsighted actions that stall on long tasks.

Dynamic planning addresses both by teaching the agent a meta‑skill: decide when to deliberate.

The core idea in one graphic (described)

Imagine a three‑way split inside a single LLM output:

- a decision to plan or not,

- a plan (optional), and

- the action.

If the model starts its output with a <plan> block, it’s choosing to allocate extra compute now; otherwise it goes straight to the action. No extra modules—just one policy with an output protocol.

A simple cost–benefit model

Dynamic planning says: plan only when Planning Advantage > Planning Cost.

- Planning Advantage ≈ expected improvement in future return if we generate a new plan (vs continuing the old one).

- Planning Cost has three parts: token cost (explicit), latency cost (context‑dependent), and instability cost (implicit): frequent replanning can cause oscillations and backtracking.

Practical takeaway: even if latency isn’t priced, instability is—it shows up as lower task reward and worse throughput.

What the experiments show

Two environments:

- POGS (Partially‑Observable Graph Search): isolates exploration and replanning under uncertainty.

- Crafter (Minecraft‑like survival): long‑horizon, multi‑objective.

Zero‑shot prompting already reveals a Goldilocks curve: planning every k steps peaks at an intermediate k (e.g., ~4 in Crafter). Always‑plan underperforms despite higher token spend. Never‑plan also lags.

Two‑stage training makes this robust:

- SFT priming on trajectories that sometimes plan (diverse planning prompts; plan‑every‑K with K∈[2,12]).

- RL (PPO) to learn when to plan and how to follow/refresh plans.

Results:

- SFT with plans > SFT without plans (better imitation and lower drift from base model).

- SFT+RL (dynamic) learns to plan, execute, and replan sparingly → more sample‑efficient progression.

- After RL, human‑steered plans can lift agents beyond their solo capability (e.g., completing tough Crafter objectives).

Cheat‑sheet: choosing a planning policy

| Policy style | Token cost | Latency | Behavior | Where it shines | Where it fails |

|---|---|---|---|---|---|

| Never plan | Low | Low | Greedy, myopic | Short tasks, low uncertainty | Stalls on long horizons; brittle to surprises |

| Always plan | High | High | Over‑deliberation; oscillations | Toy demos, tiny action spaces | Throughput hit; unstable on real tasks |

| Plan‑every‑K | Medium | Medium | Predictable cadence | Bounded budgets; batch ops | Wrong K across contexts; still wasteful |

| Dynamic (learned) | Adaptive | Adaptive | Plans only when advantage > cost | Long‑horizon, partial‑observability, cost‑sensitive ops | Needs SFT+RL; requires reward signals |

Implementation playbook (Cognaptus view)

1) Output protocol

- Adopt a strict interface: optional

<plan>…</plan>followed by exactly one action. - Enforce parsing and fallbacks server‑side.

2) Data priming

- Generate trajectories that sometimes plan (vary K and planning styles: subgoal, milestone, info‑gathering, efficiency, etc.).

- Keep actions identical across variants so you can isolate the value of explicit plans during SFT.

3) RL objective

- Reward = task return − λ·(plan_token_count). Latency can be priced if wall‑clock matters; instability is naturally penalized via reward.

- PPO is sufficient if your wrapper is robust and the action grammar is strict.

4) Guardrails for stability

- Plan drift is real: high‑level plans last longer; low‑level plans go stale fast. Encourage abstraction in the plan schema.

- Rate‑limit replanning in early training (e.g., minimum step distance between plans) and anneal.

- Add plan‑following checks: penalize “plan–action mismatch” to reduce thrash.

5) Ops & observability Track these operational KPIs per environment:

- Plan rate (% of steps with a

<plan>) - Tokens per achievement / per task minute

- Backtracking ratio (revisits / unique states)

- Plan coherence score (n‑gram or semantic overlap between plan and executed actions)

- Outcome density (achievements per 100 steps)

Business impact: where dynamic planning pays

- Customer ops agents: escalate to plan when the ticket deviates from known paths; otherwise act from SOPs. Outcome: lower handle time and fewer loops.

- RPA + LLM hybrids: plan only at branch points (schema changes, permission errors), keeping happy‑path execution cheap.

- Research copilots: allocate deep reasoning to ambiguous steps (conflicting evidence), skim on routine fact‑gathering.

Rule of thumb: if your long‑horizon task has pockets of uncertainty, dynamic planning will usually beat any fixed cadence at the same or lower spend.

Limitations you should anticipate

- Scaling laws are open: larger models may shift the Goldilocks point.

- Late‑game skills (survival, resource economy) can bottleneck even with good planning—don’t expect planning to fix missing competencies.

- Requires a wrapper that can score rewards or proxy outcomes; purely offline enterprise tasks may need weak‑signal rewards (e.g., checklist completion, SLA hits).

What to try this week

- Wrap your agent with the

<plan> … </plan>protocol. - Produce a small SFT set (≈1k trajectories) with varied sometimes‑plan behavior.

- Add a simple token‑cost penalty (λ≈1e‑4–1e‑3 per token) and run short PPO.

- Ship dashboards for plan‑rate, backtracking, and tokens/achievement.

- A/B against plan‑every‑K and never‑plan baselines at equal token budgets.

If your agent thinks all the time, it overfits to thinking. If it never thinks, it overfits to luck. Teach it to know the difference.

Cognaptus: Automate the Present, Incubate the Future