The Big Idea

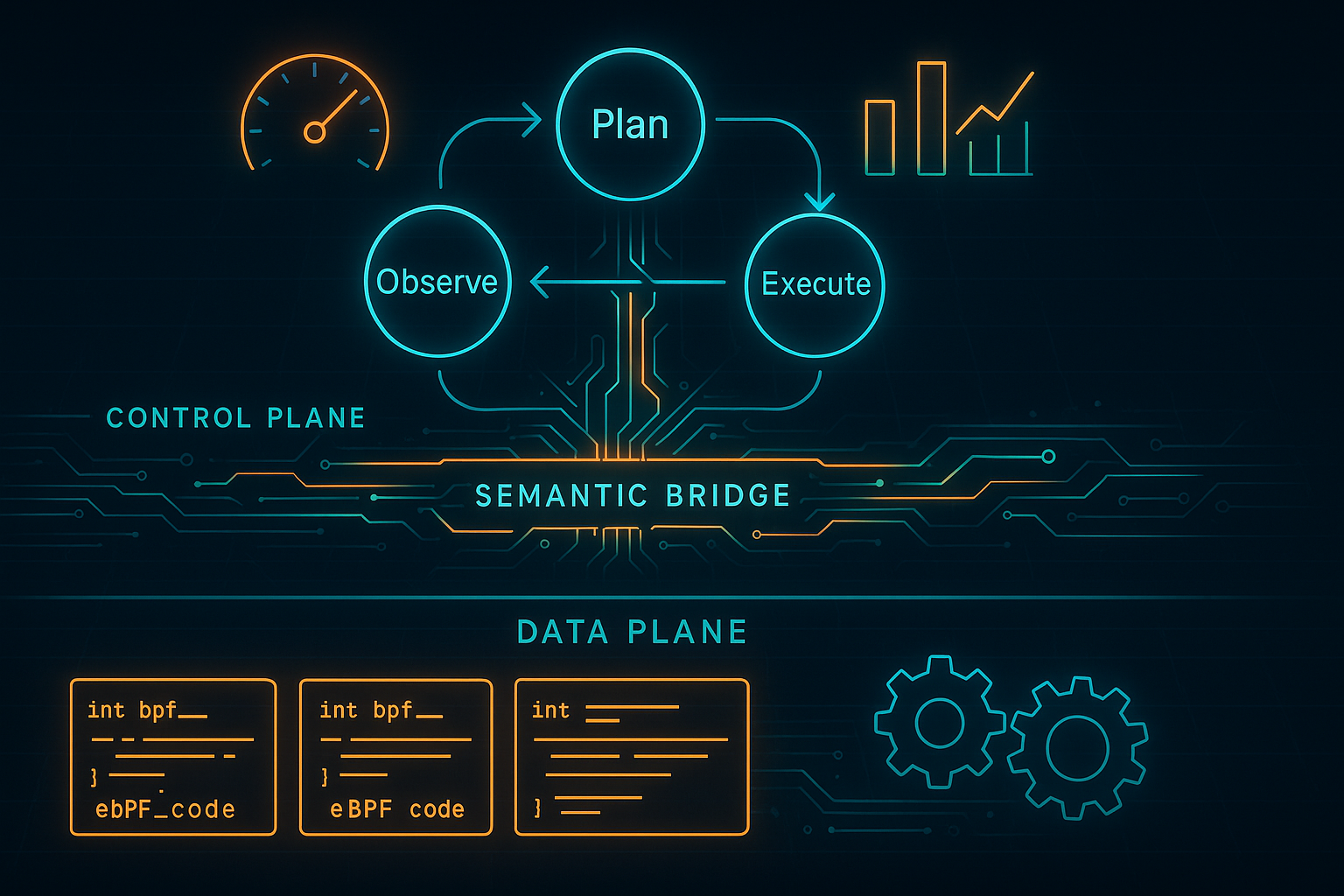

Operating systems have always struggled with a silent mismatch: the kernel’s scheduler doesn’t know what your application actually wants. SchedCP proposes a clean solution—turn scheduling into a semantic control plane. AI agents reason about what a workload needs; the system safely handles how to observe and act via eBPF-based schedulers. This division keeps LLMs out of the hot path while letting them generate and refine policies that actually fit the job.

In short: decouple reasoning from execution, then give agents a verified toolchain to ship real schedulers.

Why This Matters for Business Readers

- Direct performance wins translate to lower compute bills and faster jobs (CI/CD, analytics, media, ML).

- Platform teams get a repeatable way to encode scheduling know‑how without kernel gurus on call.

- Risk is contained: static/dynamic verification, canary rollout, and circuit breakers keep production safe.

Architecture at a Glance

SchedCP acts like an API layer for OS optimization, exposed via MCP. On top sits sched‑agent, a multi‑agent loop of Observe → Plan → Execute → Learn.

What SchedCP Provides (System Side)

| Service | Purpose | What it actually gives you |

|---|---|---|

| Workload Analysis Engine | Cost‑aware observability | Summaries → profiling via perf/top → attachable eBPF probes; feedback metrics post‑deploy |

| Scheduler Policy Repository | Reuse & composition | Vector DB of eBPF schedulers with metadata, semantic search, and promotion hooks |

| Execution Verifier | Safety & correctness | Kernel eBPF verification plus scheduler‑specific static checks, then micro‑VM dynamic tests, signed tokens, and canaried rollouts |

What sched‑agent Does (AI Side)

| Agent | Role | Typical output |

|---|---|---|

| Observation | Build a workload profile | Natural‑language summary + quantified targets |

| Planning | Match/compose policy | Parameterized choice, patches, or new primitives |

| Execution | Validate & deploy | Code artifacts → verifier → gated rollout |

| Learning | Close the loop | Update repository with wins/fail modes; iterate configs |

Design principle that makes this work: LLMs generate policy in the control plane; the scheduler runs natively with zero LLM inference overhead in the data plane.

What “Good” Looks Like (Evidence from the Paper)

- Kernel build: from Linux EEVDF baseline to AI‑tuned schedulers, total 1.79× speedup after 3 iterations.

- schbench (latency & throughput): initial miss, then 2.11× better P99 latency and 1.60× higher throughput after iterative refinement.

- Batch workloads: agents synthesized a custom Longest‑Job‑First eBPF scheduler (not just a param tweak), cutting end‑to‑end time by ~20% on average.

- Operational cost: naive “agent writes a scheduler from scratch” took ~33 min and ~$6. With SchedCP tooling, generation dropped to ~2.5 min and ~$0.5—about 13× cheaper.

Why Not Just RL?

Pretrained RL tuners often need per‑workload/host retraining and can’t synthesize new logic (like LJF). Here, semantic retrieval + code synthesis lets agents jump straight to domain‑appropriate strategies, with verification enforcing safety properties like fairness and liveness.

Practical Takeaways

- Treat scheduling as product surface area. Move from opaque knobs to a governed library of workload‑labeled policies.

- Make iteration cheap. Bake verification, canarying, and feedback into the loop so ops can say “yes” more often.

- Exploit retrieval before generation. Let agents mine a policy repo first; compose only when necessary.

Where This Could Go Next

The same control‑plane pattern applies to cache policies, DVFS, network queuing, sysctl portfolios—and especially to cross‑component co‑optimization (CPU ↔ memory ↔ I/O ↔ power). Expect a wave of application‑aware OS features that are learned once and reused many times.

A Concrete Example (CI/CD)

Your fleet spends 20–40% of build minutes waiting on scheduling inefficiency across spiky dependency graphs. An agent observes parallelism, selects or composes a throughput‑oriented scheduler, validates it in a micro‑VM, and canaries it during work hours with a circuit breaker. The result: faster pipelines, happier engineers, and lower cloud bills—without editing the kernel or teaching DevOps eBPF.

Bottom line: SchedCP shows that the shortest path to an agentic OS is not “bigger LLMs,” it’s better interfaces for them—with safety built in and iteration priced to scale.

Cognaptus: Automate the Present, Incubate the Future