When multi‑turn chats stretch past a dozen turns, users lose the thread: which requests are satisfied, which are ignored, and which have drifted? OnGoal (UIST’25) proposes a pragmatic fix: treat goals as first‑class objects in the chat UI, then visualize how well each model response addresses them over time. It’s less “chat history” and more goal telemetry.

What OnGoal actually builds

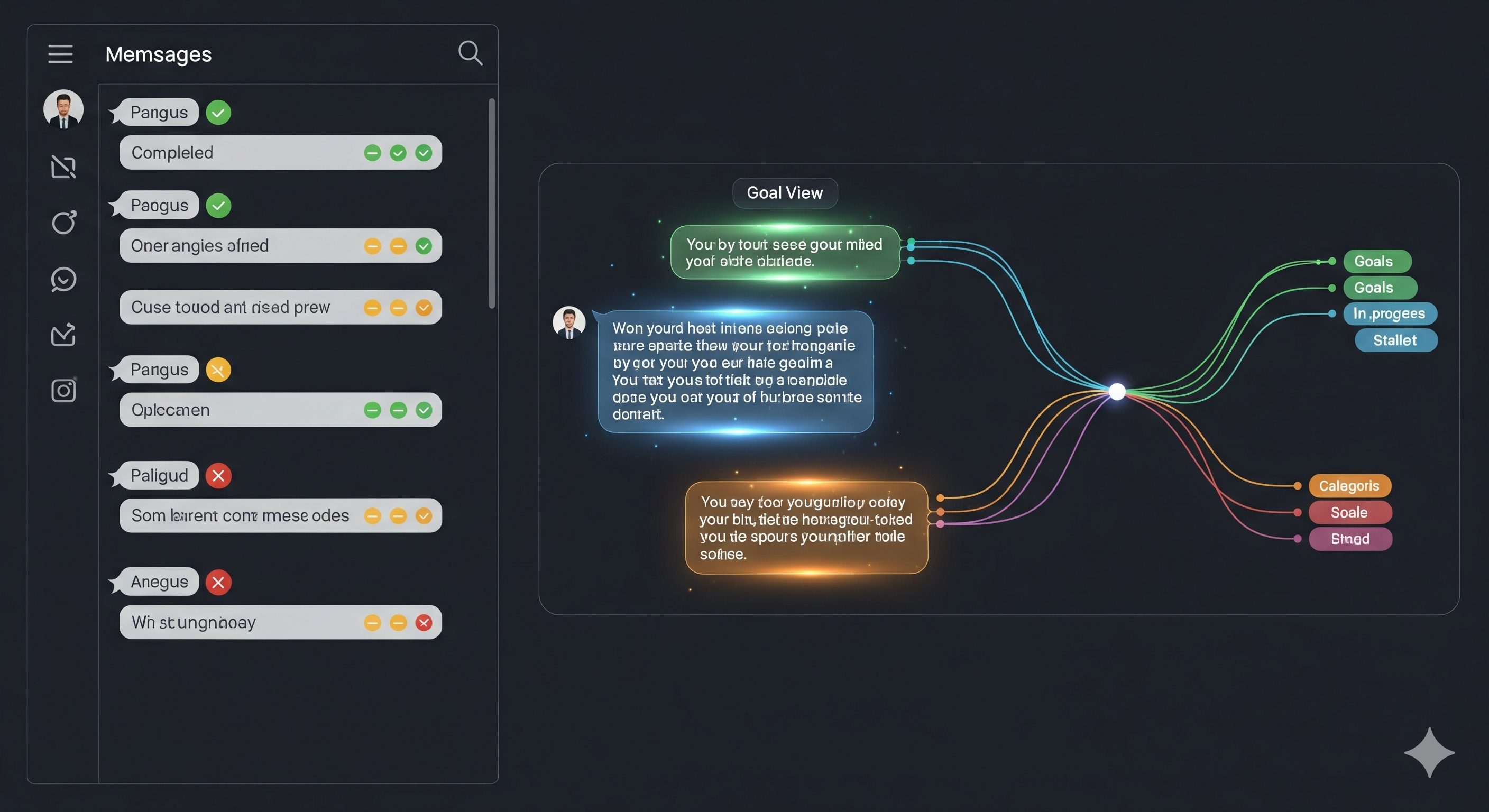

OnGoal augments a familiar linear chat with three concrete layers of structure:

- Inline goal glyphs under each message (user → inferred goals; assistant → evaluations: confirm / ignore / contradict), with one‑click rationales and evidence snippets pulled from the assistant’s text.

- A right‑side Progress Panel with three tabs: Goals (lock/complete/restore goals), Timeline (Sankey‑style infer→merge→evaluate history per turn), and Events (a verbose audit log).

- An Individual Goal view that filters the chat to messages involving one goal, overlaying text‑level highlights: key phrases, most‑similar sentences (repetition), and unique sentences (novelty).

Pipeline, not magic

OnGoal separates the model you’re chatting with from a three‑stage “goal pipeline” that runs in the background (implemented with GPT‑4o in the paper):

| Stage | What it does | Example output | Why it matters |

|---|---|---|---|

| Infer | Parse user turns into goal units (question / request / offer / suggestion). | “Use a formal tone”; “Explain the rationale.” | Makes intent explicit without burdening the user to pre‑structure prompts. |

| Merge | Combine, replace, or keep goals across turns (handles conflicts). | Merge “use humor” + “kid‑friendly” → one composite goal; replace outdated goal. | Prevents goal sprawl and durable contradictions. |

| Evaluate | For each assistant message, tag every active goal as confirm / ignore / contradict and extract supporting spans. | “Confirm: cites 3 sources.” (quotes the lines) | Turns vague impressions into inspectable evidence. |

What the study shows (beyond vibes)

A 20‑participant writing task compared OnGoal vs a baseline chat without goal tracking. Outcomes worth copying:

- Users shifted time from raw reading toward evaluation/review activity—then spent even more time reviewing during validation (post‑task), suggesting the visuals invite deeper, reflective quality checks rather than skimming.

- Lower mental demand & effort were self‑reported, with equal or higher confidence in judging whether goals were met.

- Prompting behaviors diversified: users experimented more (higher variability in goal status across the conversation), using explanations and highlights to target precise fixes rather than repeating monolithic prompts.

- Caveat: participants rated the evaluation stage less accurate than infer/merge. Explanations helped, but the rubrics for confirm/ignore/contradict need domain‑tuning.

Why this matters for builders

Most “AI chat” UIs overload the user to remember tacit goals and audit responses manually. OnGoal’s design pattern transfers that cognitive load into the interface. For enterprise adoption, the ROI shows up as:

- Fewer resets (less “let me start a new chat” churn) ⇒ better session completion rates.

- Faster convergence to acceptable drafts/specs ⇒ measurable time‑to‑task.

- Explainable progress for managers/compliance ⇒ inspectable goal trails and evidence spans.

What to copy into your product next sprint

1) Elevate goals into objects.

- Schema:

{id, text, type, status, evidence[], history[]}. - Types:

{question, request, offer, suggestion}are enough to start.

2) Add opinionated visual scaffolding.

- Inline glyphs w/ tooltips → fast scanning.

- Side panel w/ (a) goal list controls, (b) Sankey timeline, (c) event log.

- Goal‑filtered view with highlights: key phrases, near‑duplicate sentences, and outliers.

3) Separate the “goal pipeline” from the “chat model.”

- Keep the analysis LLM swappable (policy‑ or cost‑tier‑specific) and run it on user turns (infer/merge) and assistant turns (evaluate).

4) Ship guardrails early.

- Allow users to lock critical goals, complete resolved ones, and restore accidentally merged ones.

- Show contradictions with red spans and suggested follow‑ups (auto‑prompt stubs).

5) Treat evaluation like a model—measure & tune it.

- Start with heuristic rubrics (regex + embedding similarity + LLM judge) and track disagreement rate between the evaluator and user clicks.

- Provide “Why did you grade this way?” explanations with linked evidence.

Where I’d push this further

- Local vs global goals: Add paragraph‑scoped goals and scoping sliders (“apply to: whole draft / section / selection”).

- Learning loop: Fine‑tune evaluators on user overrides; maintain per‑workspace rubrics (legal, support, marketing).

- Outcome metrics: Tie goal graphs to business KPIs (resolution time, revision count, compliance hits) so “goal‑truthfulness” maps to cost/time saved.

- Multi‑agent routing: If a goal is repeatedly contradicted, route to a specialized tool/agent and annotate the detour in the timeline.

Bottom line

OnGoal isn’t another prompt‑engineering trick; it’s a UI contract that treats goals as the primary unit of work. The result is a chat that behaves like a progress dashboard—lighter to use, easier to audit, and better at steering the model back on track.

—

Cognaptus: Automate the Present, Incubate the Future