TL;DR

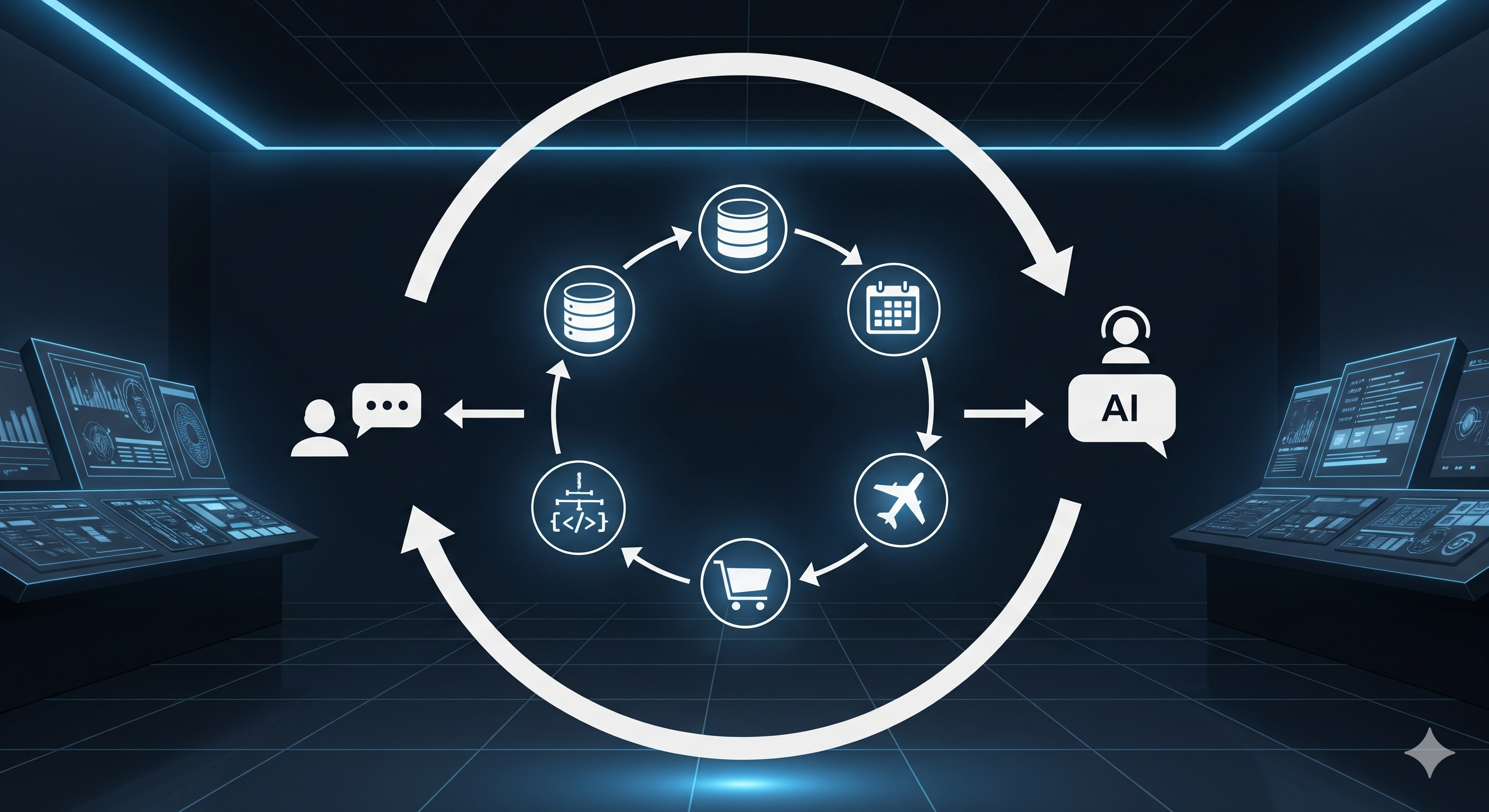

Most “tool‑using” LLMs still practice in sterile gyms. MUA‑RL moves training into the messy real world by adding an LLM‑simulated user inside the RL rollout, wiring the agent to call actual tools and rewarding it only when the end task is truly done. The result: smaller open models close in on or beat bigger names on multi‑turn benchmarks, while learning crisper, policy‑compliant dialogue habits.

Why this matters now

Enterprises don’t want chatty copilots; they want agents that finish jobs: modify an order under policy, update a ticket with verified fields, push a fix to a repo, or reconcile an invoice—often across several conversational turns and multiple tools. Supervised fine‑tuning on synthetic traces helps, but it often overfits to static scripts and misses the live back‑and‑forth where users change their minds, add constraints, or misunderstand policy.

MUA‑RL addresses exactly that: it trains agents in conversation, not just for conversation.

What MUA‑RL changes

Core idea: Combine a lightweight SFT cold‑start with Group Relative Policy Optimization (GRPO) on multi‑turn rollouts that include:

- a simulated user (LLM) that responds dynamically,

- real tool execution (via MCP servers or faithful simulators),

- a binary, outcome‑only reward (1 if the task is verifiably completed under policy; 0 otherwise).

Design choices that matter for practitioners:

- Outcome‑only reward → encourages exploration and avoids “format hacking.”

- Loss masking for tool outputs & user messages → the model learns how to act and converse, not to parrot tool text.

- General‑purpose tool pruning emerges: during RL, calls to generic tools like Think, Calculate, and Transfer to human drop as the policy learns shorter, more reliable paths.

- Cap turns (e.g., 30) to keep cost bounded while preserving multi‑turn realism.

Evidence: how much better?

Across multi‑turn tool‑use benchmarks (TAU1/2, BFCL‑V3 Multi‑Turn, ACEBench Agent), the series of MUA‑RL models (8B, 14B, 32B) consistently improve over their non‑thinking and cold‑start baselines. Highlights:

- TAU2 Retail/Airline: MUA‑RL‑32B hits 67.3 / 45.4, competitive with or above larger open models in non‑thinking mode.

- TAU2 Telecom (dual‑control): MUA‑RL‑14B reaches 33.4%, with a Task Completion Rate (TCR) of 54.3%, signaling stronger partial‑completion behavior.

- BFCL‑V3 Multi‑Turn: MUA‑RL‑32B achieves 28.4% overall, materially ahead of its base and cold‑start.

- ACEBench Agent: MUA‑RL‑32B scores 82.5, trailing only GPT‑4.1 in the comparison.

Training dynamics show rising accuracy without just making answers longer; improvements come from better interaction structure, not verbosity.

What’s novel vs prior “tool‑RL” work?

| Theme | Typical Prior Approach | MUA‑RL’s Twist | Why it matters |

|---|---|---|---|

| User in the loop | Scripted or absent | LLM user simulator inside rollouts | Captures miscommunication, clarifications, policy gating |

| Tool execution | Stubs or offline traces | Real MCP servers or faithful LLM tool simulators | Verifiable side effects; less mismatch at deployment |

| Reward shaping | Step‑wise/format bonuses | Binary success (task completed) | Simpler, harder to game; focuses on business outcomes |

| Optimization | PPO/actor‑critic variants | GRPO with ref‑policy KL | Sample‑efficient; stable without a value net |

| Over‑talking | Often encouraged | Loss‑masking & turn caps | Teaches concise, policy‑compliant exchanges |

For operators: a deployment playbook

If you run support, ops, or fintech workflows, here’s how to adapt the idea now:

- Instrument your tools behind an MCP‑like server (idempotent, typed inputs/outputs, deterministic test paths).

- Author strict policies as system prompts (authentication, confirmation before state changes, one tool call per step, handoff rules).

- Synthesize cold‑start traces for each domain (success + near‑miss), then dual‑verify them (LLM grader + human spot‑checks).

- Roll out RL with a user simulator that can: (a) omit key info, (b) change requests mid‑stream, (c) insist on policy‑violating actions.

- Reward only completed tasks, measured by verifiers over real tool effects (DB diffs, API receipts). Avoid format‑level rewards.

- Track operational metrics: task completion, average turns, human‑handoff rate, tool‑call mix, policy violations, and latency.

- Gate to production via shadow traffic and dual‑control sandboxes (esp. in regulated domains). Keep turn caps; rate‑limit expensive tools.

Where it helps first

- Commerce support: order changes/returns under policy; fewer escalations; resilient to messy user inputs.

- Travel & logistics: multi‑entity rebookings with constraints (inventory, fare rules, blackout dates).

- Telecom/fin‑ops back‑office: dual‑control tasks where both user and agent act (plan swaps, KYC state corrections).

- DevOps & ITSM: ticket triage + compliant changes with reversible tool actions.

Caveats & open questions

- Simulated users ≠ real users. Expect a sim‑to‑real gap; use frequent A/B tests on production‑like cohorts.

- Binary reward brittleness. It’s clean, but consider gated partial‑credit when tasks decompose into objective sub‑checks.

- Safety & audit. Keep immutable tool logs, signed result receipts, and policy diff trails for audits.

- Cost control. Multi‑turn RL is compute‑intensive; prioritize high‑leverage domains and reuse reference policies across tasks.

The Cognaptus take

MUA‑RL’s big idea is cultural, not just technical: optimize for finished work. By training agents inside the same conversational/tool loop they’ll face in production—and paying them only for real completions—you get behaviors that generalize: authenticate first, ask just enough, confirm before mutating state, and minimize detours.

If you’re piloting agentic automation in 2025, outcome‑only RL on multi‑turn rollouts should be on your shortlist.

Cognaptus: Automate the Present, Incubate the Future