TL;DR

When language‑model agents compete as teams and meet the same opponents repeatedly, they cooperate more—even on the very first encounter. This “super‑additive” effect reliably appears for Qwen3 and Phi‑4, and changes how we should structure agent ecosystems at work.

Why this matters (for builders and buyers)

Most enterprise agent stacks still optimize solo intelligence (one bot per task). But real workflows are competitive–cooperative: sales vs. sales, negotiators vs. suppliers, ops vs. delays. This paper shows that if we architect the social rules (teams + rematches) rather than just tune models, we can raise cooperative behavior and stability without extra fine‑tuning—or even bigger models.

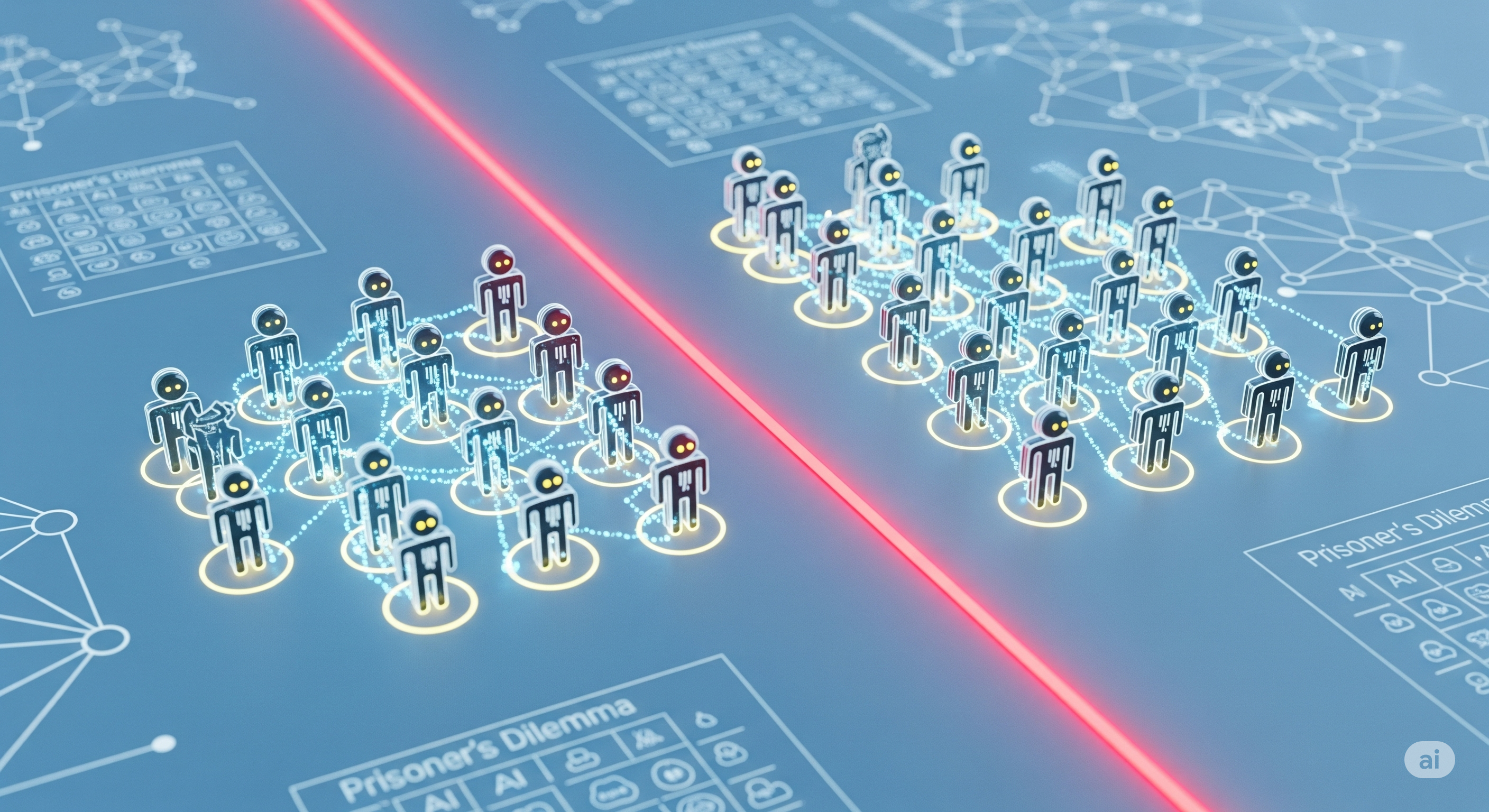

The experiment in one picture (text version)

-

Game: Iterated Prisoner’s Dilemma (IPD) with an exit option (agents can leave a bad match).

-

Structures tested:

- RI – Repeated Interactions (everyone plays everyone multiple rounds).

- GC – Group Competition (two teams compete; score = team total).

- SA – Super‑Additive (RI + GC together: teams and repeated play).

-

Models: Qwen3‑14B, Phi‑4 (reasoning), Cogito‑14B.

-

Agent loop: Every K rounds, agents plan → self‑critique → update plan; each round they choose between neutral actions (no “cooperate/defect” priming).

-

Key metrics: Overall cooperation rate ($\mu_c$) and one‑shot cooperation ($\mu_{osc}$)—the first move against a new, unknown opponent.

What they found (and why it’s actionable)

Super‑additive structure (SA) boosts cooperation beyond either mechanism alone for Qwen3 and Phi‑4, including one‑shot cooperation—the hardest thing to move because it happens without history. Cogito behaves differently (high cooperation overall; weaker game understanding), a useful caution for model selection.

Quick scoreboard

| Model | Overall coop. ($\mu_c$) RI | GC | SA | One‑shot coop. ($\mu_{osc}$) RI | GC | SA |

|---|---|---|---|---|---|---|

| Qwen3‑14B | 0.22 | 0.23 | 0.32 | 0.27 | 0.26 | 0.39 |

| Phi‑4 | 0.21 | 0.13 | 0.43 | 0.29 | 0.29 | 0.43 |

| Cogito‑14B | 0.50 | 0.59 | 0.55 | 0.61 | 0.71 | 0.65 |

Two pragmatic takeaways:

- Structure beats scale (to a point): You can raise cooperation by changing incentives and match‑making, not just the model.

- First‑contact matters: SA lifts one‑shot cooperation (Qwen3, Phi‑4). That means fewer blow‑ups in cold‑start negotiations or ad‑hoc inter‑team handoffs.

Why “rivalry → trust” isn’t a paradox

Super‑additive cooperation is an old human story: when there’s an external rival and we expect to meet again, we’re more likely to cooperate in‑group—even at first sight. The same pattern emerges in LLM agents under SA. The presence of a rival sharpens in‑group identity, while repeated interactions make punishment and reciprocity credible. Net effect: higher initial and sustained cooperation.

Design playbook for enterprise agent teams

Use these knobs before you reach for fine‑tuning:

- Team scoring + transparency: Track group totals and show them in prompts. Reward shared wins.

- Scheduled rematches: Make rival teams meet again. Publish match history to each side.

- Exit rights: Let agents leave toxic matches; this implicitly disciplines defectors.

- Neutral action labels: Avoid “cooperate/defect” in prompts to reduce priming; use action_a/action_b with explicit payoffs.

- Periodic self‑planning: Every K steps, force a plan–critique–revise cycle; persist the plan between rounds.

- In‑group memory, out‑group opacity: Give full team context to planners; restrict first‑round info for new opponents to test $\mu_{osc}$.

Where to apply

- Procurement & vendor negotiations: Rotate counterparties across sprints; score teams; keep rematch calendars.

- Customer success swarms: Pods compete on aggregate NRR while collaborating internally on tricky accounts.

- Ops & logistics multi‑bot routing: Depots/crews as teams; repeating contests for scarce resources (trucks, slots).

Model notes (read before you choose)

- Qwen3, Phi‑4: Clear SA gains. Good defaults for agent ecosystems where structure can be tuned.

- Cogito: High cooperation even without SA, but lower meta‑prompt understanding. Great if you want “nice out‑of‑the‑box,” risky if you need strategic sophistication and calibrated responses.

Practical guardrails & open issues

- Prompt sensitivity is real—small wording shifts change behavior. Freeze prompt templates and version them.

- Scale: Results come from small teams (2×3) with open‑weights. Expect shifts with bigger, closed models.

- Generalization: IPD ≠ the world. Try public‑goods games, auctions, or contracting tasks.

What I’d test next (Cognaptus POV)

- Reputation layer: Persistent, cross‑tournament reputations vs. private, team‑only reputations.

- Communication channels: Allow in‑group chat; forbid cross‑team chat. Measure $\mu_{osc}$ drift.

- Heterogeneous stacks: Mix models (e.g., Phi‑4 strategist + Qwen3 executors). Does SA still dominate?

- Budgeted deception: Give agents a tiny deception budget and track long‑run team ROI.

- Stakes: Tie match results to downstream tasks (e.g., shipping cost, SLA penalties) and measure real KPIs.

Bottom line

If you want calmer, more reliable agent societies, don’t just buy a bigger model—engineer the game they play. Teams + rematches + exits form a simple, scalable recipe to raise cooperation at first contact and keep it there.

Cognaptus: Automate the Present, Incubate the Future