What changes when AI stops waiting for prompts and starts sharing goals? Short answer: your entire learning stack, from pedagogy to performance reviews.

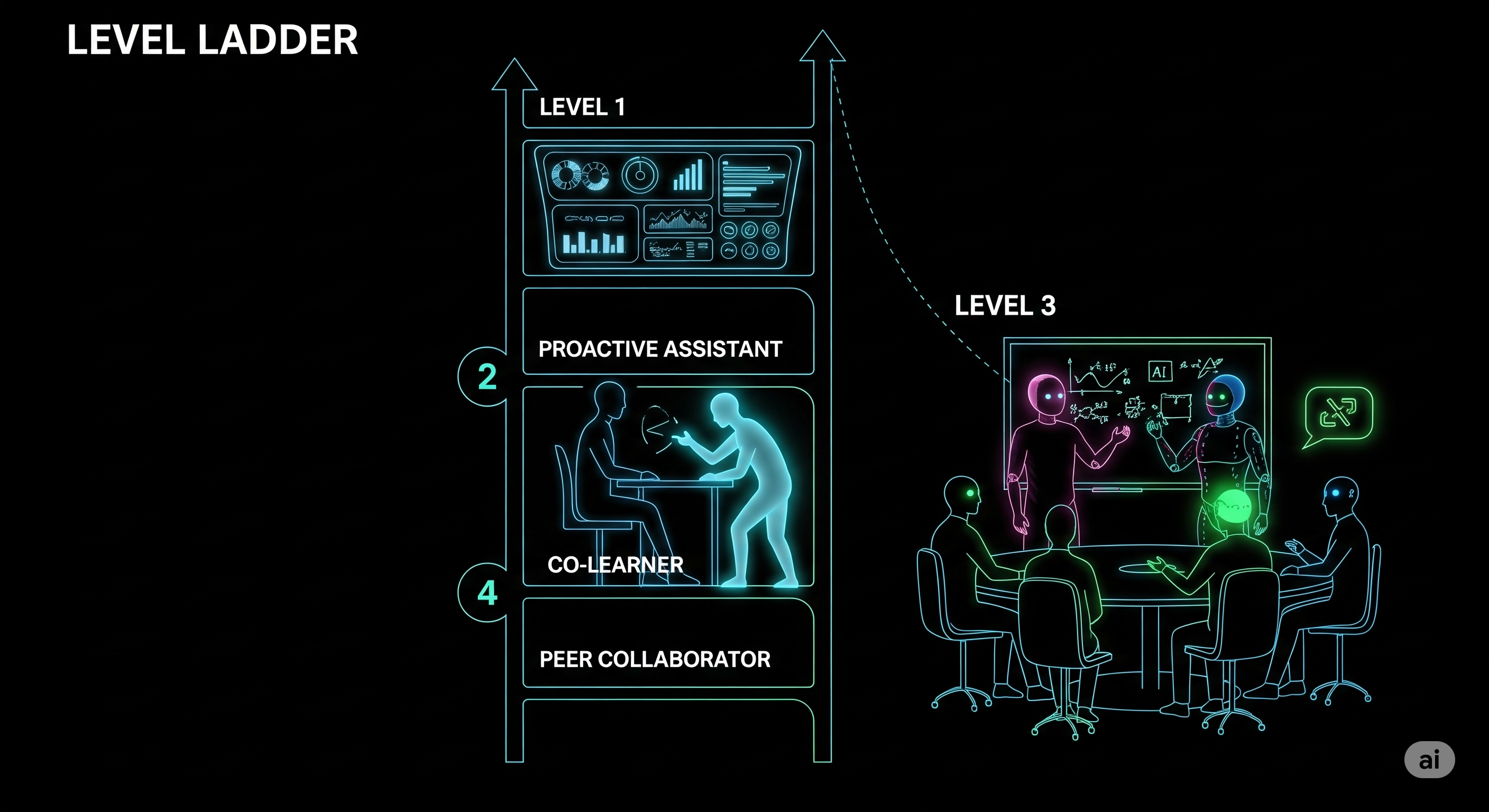

Most commentary about “AI in learning” stops at content generation and chatbot tutors. A new conceptual model—APCP: Adaptive instrument → Proactive assistant → Co‑learner → Peer collaborator—pushes further: it treats AI as a socio‑cognitive teammate. That frame matters for businesses building capability academies, compliance programs, or AI‑augmented teams. Below, I unpack APCP in plain business terms, show concrete patterns you can pilot next quarter, and flag the governance traps you’ll want to avoid.

The executive translation of APCP

| APCP Level | AI’s behavior | Human role | Best‑fit enterprise scenarios | KPI you should watch |

|---|---|---|---|---|

| L1 — Adaptive Instrument | Reactive; executes precise tasks on request | Operator/analyst | Just‑in‑time job aids, code/data tooling, SOP assistants | Cycle time, error rate, CSAT |

| L2 — Proactive Assistant | Surfaces issues/opportunities without being asked | Strategist/reviewer | Writing/coding “linting,” QA prompts in audits, safety/compliance nudges | Defect catch‑rate, rework %, interruption sentiment |

| L3 — Co‑Learner | Takes a slice of the task; explains uncertainty; learns from you | Collaborator/mentor | Cross‑functional design sprints, post‑mortems, scenario planning | Novelty/quality of options, argumentative depth, transfer to new tasks |

| L4 — Peer Collaborator | Stable persona & role (e.g., skeptic); negotiates goals; injects productive friction | Facilitator/teammate | Leadership labs, ethics boards-in‑simulation, red‑team/blue‑team drills | Disagreement quality, decision robustness, skill gains over time |

Why the ladder beats “AI tutor vs. no AI”: each rung changes who plans, who critiques, who decides. That shift is what drives (or destroys) learning and performance—not the model size.

Playbook: ship one pilot per level in 90 days

Treat each level as a design constraint, not a maturity vanity metric.

L1—Adaptive Instrument (Week 1–3) Pattern: “Promptable SOP”. For every high‑variance task (e.g., month‑end narratives, customer responses), bind your SOPs to an AI that only executes on request and logs all transformations. Guardrails: deterministic prompts; change‑control on SOPs; immutable logs. Success signal: analysts report less copy‑paste and fewer spreadsheet errors.

L2—Proactive Assistant (Week 2–5) Pattern: “Nudge with veto”. While drafting an audit memo or PR FAQ, the AI flags fallacies, missing counter‑evidence, or off‑policy phrases—but requires human accept/reject. Guardrails: interruption budget (max X prompts/hour), rationale links, one‑click snooze. Success signal: higher defect catch‑rate without “nag fatigue”.

L3—Co‑Learner (Week 4–8) Pattern: “Two‑track work”. Humans ideate; AI mines corpus + external sources to stress‑test and propose variants. Team reconvenes for joint synthesis; AI exposes confidence and missing data. Guardrails: explanation‑first UI, uncertainty ranges, source provenance, “teach‑back” step where the team corrects the AI and the system stores the delta. Success signal: option quality improves, not just quantity; rationales reference diverse sources.

L4—Peer Collaborator (Week 6–12) Pattern: “Constructive dissenter”. In leadership training or risk committees, instantiate an AI persona (e.g., Skeptic, Steward, Customer Advocate) that argues a coherent epistemic stance and creates productive friction. Guardrails: disclose AI identity; calibrate allowed error rate to keep debates real; rotate roles; debrief with human‑only reflection. Success signal: better disagreement hygiene, more resilient decisions under stress tests.

Designing for functional collaboration (not metaphysical magic)

A hard truth: your AI doesn’t need “true” consciousness to be a good teammate. It needs to behave like one. That means instrumenting four boring—but decisive—capabilities:

-

Turn‑taking & timing. Late or spammy interjections tank trust. Enforce interruption budgets and context windows per task phase.

-

Role clarity. Give the agent a job title and things it does not do. Ambiguous AI is worse than no AI.

-

Explanations, not vibes. Every suggestion ships with: claim → evidence → uncertainty → alternatives.

-

Teachability. Make human corrections first‑class: log them, generalize them, and show users that yesterday’s coaching persists today.

Governance that actually fits APCP

- Accountability map. For every pilot, specify “who is answerable for what” across L1–L4. At L2+, the human is always final authority; the system must make this obvious in UI and audit logs.

- Bias & viewpoint hygiene. L3–L4 agents should be plural: run at least one counter‑stance agent to reduce monoculture reasoning.

- Over‑reliance controls. Add scheduled unplugged steps (human‑only drafts or debates) and measure performance without the agent.

- Privacy & provenance. L3–L4 almost always touch sensitive corpora. Enforce tiered access, inline citations, and redaction policies by default.

What this means for L&D and capability academies

- Curriculum shifts from content to choreography. Instructors become learning architects—designing when the AI is an instrument vs. an interlocutor.

- Assessment shifts from answers to interaction quality. Track disagreement quality, self‑explanation richness, and transfer when the AI is absent.

- Tooling shifts from chatbots to teamware. You need session memory, persona libraries, veto workflows, and teachable moments captured as institutional knowledge.

A minimal KPI bundle (copy/paste for your PRD)

- Effectiveness: defect catch‑rate (L2), option novelty/quality (L3), decision robustness under perturbation (L4).

- Efficiency: cycle time delta (L1–L2), rework %.

- Human factors: interruption sentiment, trust calibration (over/under‑reliance), perceived contribution.

- Governance: provenance coverage %, correction adoption latency, disclosure compliance.

The takeaway

Stop asking if AI can “replace the tutor.” Start asking which APCP level this task deserves—and design the human‑AI choreography accordingly. The payoff isn’t just faster content; it’s stronger teams that argue better, explain better, and decide better.

Cognaptus: Automate the Present, Incubate the Future.