The Market-Moving Puzzle of Fedspeak

When the U.S. Federal Reserve speaks, markets move. But the Fed’s public language—often called Fedspeak—is deliberately nuanced, shaping expectations without making explicit commitments. Misinterpreting it can cost billions, whether in trading desks’ misaligned bets or policymakers’ mistimed responses.

Even top-performing LLMs like GPT-4 can classify central bank stances (hawkish, dovish, neutral), but without explaining their reasoning or flagging when they might be wrong. In high-stakes finance, that’s a liability.

The New Approach: Domain Reasoning + Uncertainty Awareness

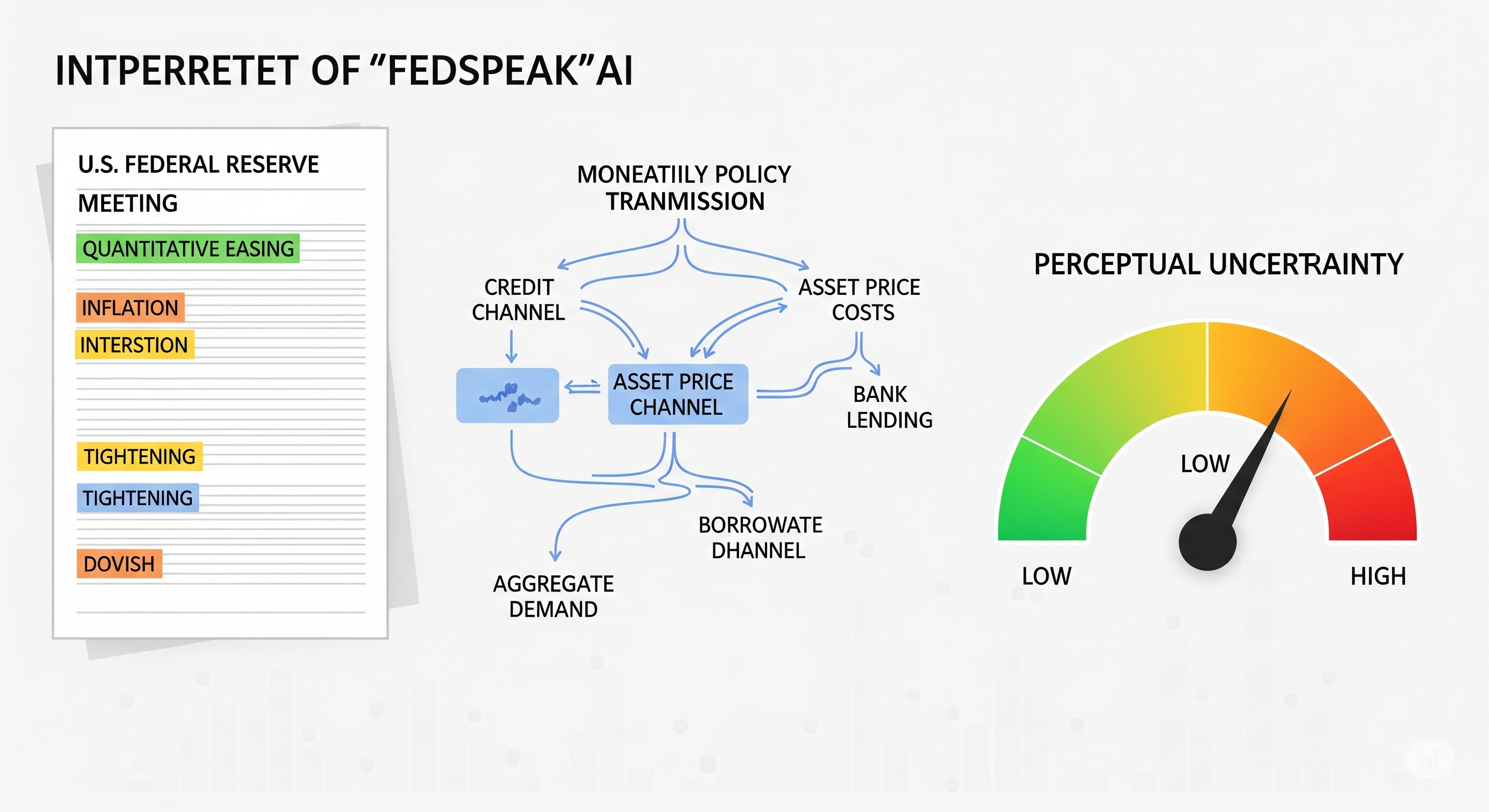

A recent study proposes an LLM-based, uncertainty-aware framework that not only predicts the Fed’s stance but also explains the path it took to get there—and knows when it’s not confident.

1. Embedding Monetary Policy Transmission Paths Instead of feeding the raw text to a model, the system:

- Extracts economic entities (e.g., employment, inflation, credit growth)

- Maps causal and conditional relations between them

- Places these within the monetary policy transmission mechanism (credit, asset price, aggregate demand channels)

- Generates a structured reasoning chain from observed economic conditions to recommended policy stance

Example:

Economic activity strengthened → Credit channel expansion → Wealth effects → Contractionary policy advice

This mirrors how human analysts think, giving the model a knowledge-rich context.

2. Measuring Perceptual Uncertainty (PU) The model quantifies how sure it is about its prediction using:

- Cognitive Risk (CR): Missing domain knowledge

- Environmental Ambiguity (EA): Input uncertainty from vague or conflicting statements

- PU = CR × EA

If PU is high, the model switches from an aggressive decoding strategy to a conservative one, avoiding overconfident mistakes.

Proven Gains in Accuracy and Reliability

On a benchmark of FOMC communications (1996–2022):

- Macro-F1: +6.6% over the best baseline

- Weighted-F1: +6.2% over the best baseline

- Transmission path reasoning was the biggest driver—removing it cut Macro-F1 by 7.9%

Reliability Calibration:

- Low-PU predictions: Macro-F1 = 0.7791

- High-PU predictions: Macro-F1 = 0.2473

- Strong statistical correlation between high PU and model error (p < 1e-5)

Why This Matters for Finance and Policy

- For traders: PU can act as a risk flag in algorithmic strategies, triggering manual review or smaller position sizes.

- For central banks: Can monitor how their words are likely to be interpreted in real time.

- For AI product builders: Shows the power of domain-augmented reasoning over raw zero-shot performance.

By making the model’s reasoning process explicit, financial firms can audit AI predictions—critical for compliance and trust.

Visualizing the Difference

Traditional pipeline: Fedspeak → LLM → Stance label

This framework: Fedspeak → Entity extraction → Transmission path reasoning → PU scoring → Stance label (with confidence)

| Step | Economic Phenomenon | Channel | Path Step | Policy Advice |

|---|---|---|---|---|

| 1 | Strong labor market | Credit | ↑ loan demand → ↑ credit creation | Contractionary |

| 2 | Rising asset valuations | Asset Price | ↑ wealth effects → ↑ spending | Contractionary |

Closing Insight

This isn’t just about predicting the Fed’s stance. It’s about building AI that knows when to speak, when to hedge, and when to defer to humans. In a world where a misplaced word can rattle markets, confidence isn’t just a feeling—it’s a number you can measure.

Cognaptus: Automate the Present, Incubate the Future