When biologists talk about ecosystems, they speak of inheritance, mutation, adaptation, and drift. In the open-source AI world, the same vocabulary fits surprisingly well. A new empirical study of 1.86 million Hugging Face models maps the family trees of machine learning (ML) development and finds that AI evolution follows its own rules — with implications for openness, specialization, and sustainability.

The Ecosystem as a Living Organism

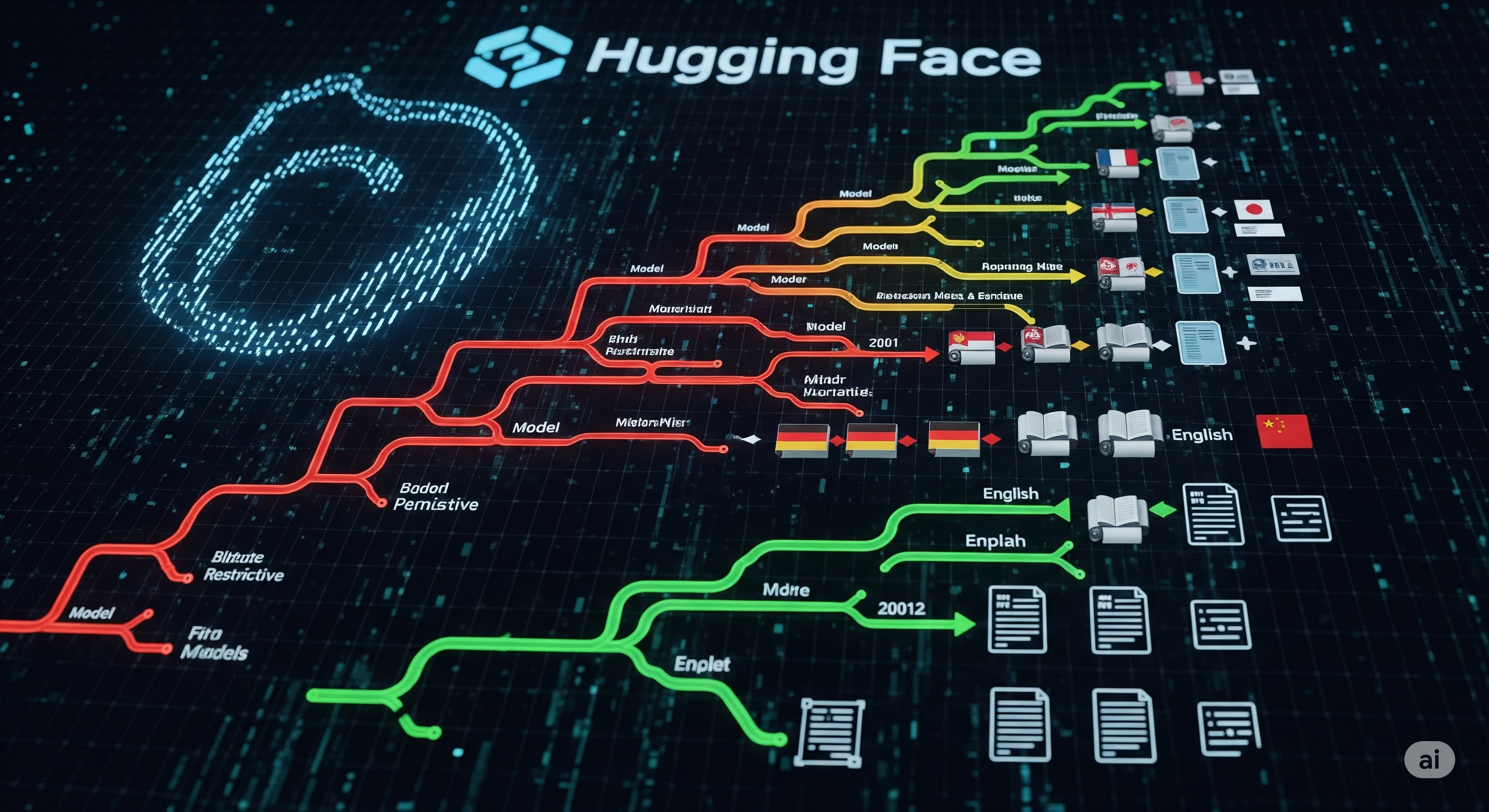

Hugging Face isn’t just a repository — it’s a breeding ground for derivative models. Pretrained models are fine-tuned, quantized, adapted, and sometimes merged, producing sprawling “phylogenies” that resemble biological family trees. The authors’ dataset connects models to their parents, letting them trace “genetic” similarity via metadata and model cards. The result: sibling models often share more traits than parent–child pairs, a sign that fine-tuning mutations are fast, non-random, and directionally biased.

This is not neutral drift. It’s purposeful adaptation.

Three Major Drifts in AI Evolution

-

Licenses: From Restrictive to Permissive Commercial and restrictive licenses (e.g., Gemma, LLaMA variants) tend to mutate toward permissive or copyleft ones (Apache-2.0, MIT, GPL-3.0). This “relaxation” trend defies expectations of strict compliance — suggesting that market and community norms for openness often outweigh legal enforcement.

-

Languages: From Multilingual to English-Only Base models often support many languages, but fine-tunes narrow scope — overwhelmingly toward English. Despite growth in Chinese-hosted models, there’s no symmetrical drift toward Chinese. The pull toward English reflects global market dominance and the developer community’s primary working language.

-

Documentation: From Rich to Minimal Model cards shrink by about 50% in size with each generation. Automation (“automatically generated” templates) replaces bespoke write-ups, signaling efficiency gains but also a loss of nuanced transparency.

Tasks Evolve Like a Pipeline

Perhaps the most striking ordering is in task mutation. Models tend to move from low-level, general capabilities (feature extraction, fill-mask) → cross-modal translation (text-to-image, speech-to-text) → classification → human-aligned reasoning tasks (reinforcement learning). This progression mirrors the machine learning lifecycle: pretraining → adaptation → alignment.

Why This Matters for Business and Policy

- Compliance Risks: The license drift toward permissiveness may be celebrated by open-source advocates, but it could mask large-scale non-compliance with upstream agreements — a ticking legal risk.

- Market Concentration: English-language dominance consolidates addressable markets but may limit growth in emerging linguistic niches.

- Transparency Trade-offs: Automated documentation speeds deployment but could erode trust if end users cannot assess model provenance and limitations.

For AI product strategists, this research is a map of where the gravitational pulls in the open-source ecosystem are strongest. It shows that even without central coordination, certain traits — openness, English focus, minimal documentation — emerge predictably. The question is whether your AI strategy rides these currents or intentionally swims against them.

Cognaptus: Automate the Present, Incubate the Future