Most AI conversations equate “emotional intelligence” with sentiment labels. Humans don’t work that way. We appraise situations—Was it fair? Could I control it? How much effort will this take?—and then feel. This study puts that lens on large language models and asks a sharper question: Do LLMs reason about emotions through cognitive appraisals, and are those appraisals human‑plausible?

What CoRE Actually Measures (and Why It’s Different)

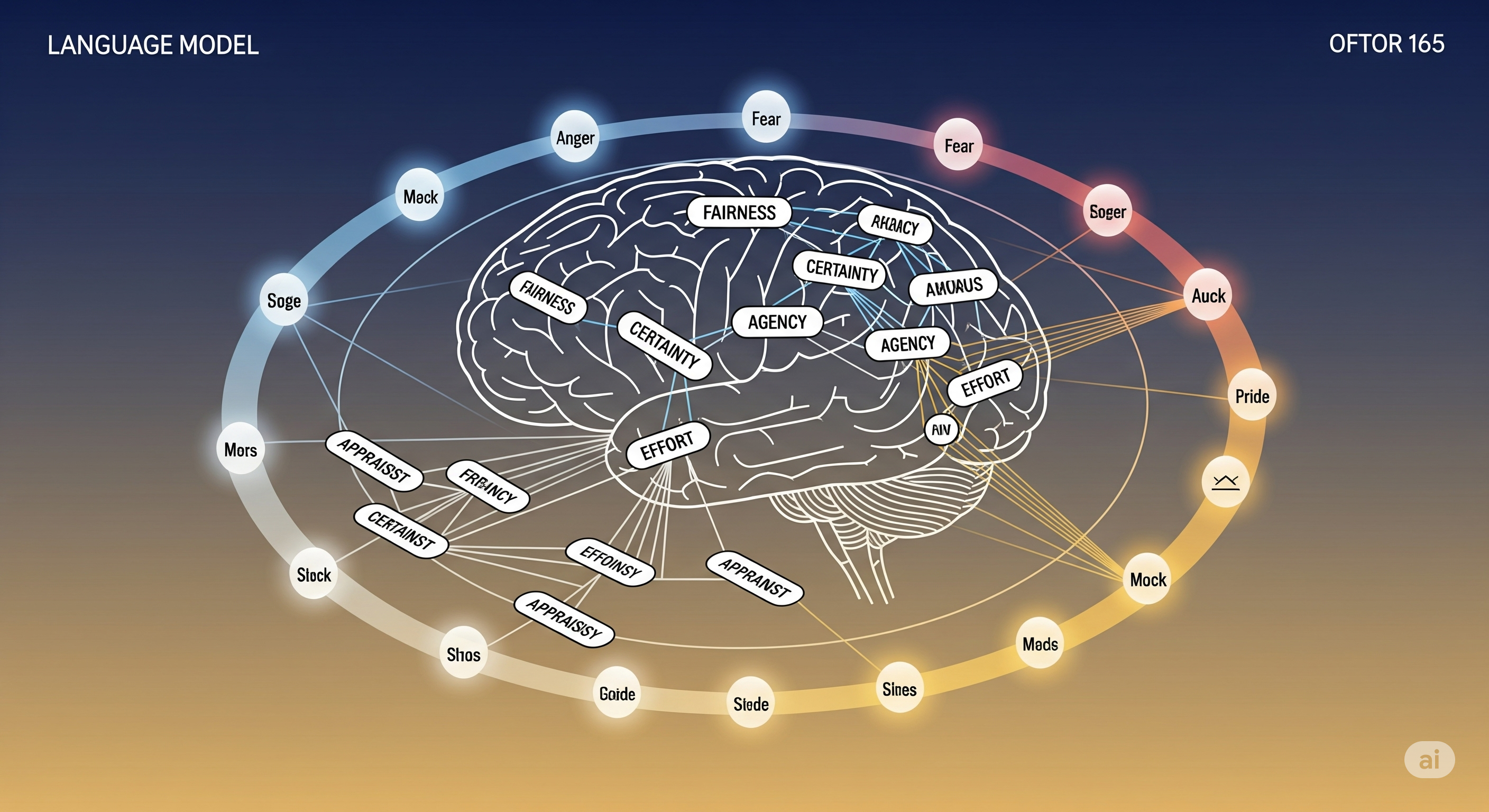

CoRE — Cognitive Reasoning for Emotions evaluates seven LLMs across:

- 15 emotions: happiness, pride, hope, interest, surprise, challenge, boredom, disgust, contempt, shame, guilt, anger, frustration, fear, sadness.

- 16 appraisal dimensions grouped into 8 categories.

- 4,928 prompts → ~34k model appraisals.

Unlike classic emotion classification, CoRE elicits self‑appraisals from the model in hypothetical scenarios. That exposes the model’s internal logic: not just what emotion it names, but why—via scores on appraisal dimensions.

The 8 appraisal categories (with a business gloss)

| Category | Dimensions | Plain‑English meaning | Why an enterprise should care |

|---|---|---|---|

| Pleasantness | pleasantness, enjoyment | How good/bad this feels | Drives tone, defuses or escalates incidents |

| Attentional activity | consideration, attention | Lean in or look away? | Triage: focus vs. avoidance in ops & support |

| Control | self‑control, other‑control, situational‑control | Who/what can change this? | Routing accountability, escalation paths |

| Certainty | certainty | Do I know what’s happening? | Risk posture & explainability |

| Goal‑path obstacle | problem, obstacle | What’s blocking progress? | Root‑cause hints for CX and ops |

| Legitimacy | legitimacy‑fair, legitimacy‑cheated | Was it fair? Was I wronged? | Policy, trust & compliance triggers |

| Responsibility | self‑responsibility, other‑responsibility | Who caused it? | Remediation ownership |

| Anticipated effort | exert, effort | How hard will this be? | Resource allocation & burnout signals |

What the Models Reveal

1) Predictive signals differ by emotion (and they make sense)

- Happiness ↔ high enjoyment, no obstacles.

- Interest ↔ low certainty (curiosity/novelty), moderate positive valence.

- Pride ↔ self‑agency (self‑control/responsibility), positive valence.

- Fear ↔ strongest link is effort/exertion (models treat fear as demanding work), not just negativity.

- Anger ↔ dominated by (il)legitimacy: unfair/cheated judgments, more than raw valence.

- Contempt ↔ high certainty + external blame; Guilt ↔ self‑responsibility + low enjoyment.

- Challenge ↔ self‑control + low certainty + effort (“hard but handleable”).

2) The latent structure is human‑plausible—but not identical

Using PCA with Varimax rotation, six components explain most variance. Valence and effort/obstacle frequently dominate early components, with agency (who controls/caused it) close behind. That echoes classic appraisal theory—but models differ on how much weight they give each factor.

3) Models don’t share a universal “emotion map”

Distributional comparisons (Wasserstein distances) show consistent valence‑based clustering (positive vs. negative). Yet cross‑model differences are substantial; there’s no single transferable appraisal distribution. Practically: swapping your model could silently change how “anger” is detected and why.

4) Calibration quirks you’d want to know before shipping

- Gemini 2.5 Flash: tight clustering and a compressed appraisal scale; sometimes places hope/interest oddly and elevates perceived unfairness.

- LLaMA 3 (8B): tends to appraise situations as more uncertain overall (broad low‑certainty bias).

- DeepSeek R1 / Phi‑4: often produce cleaner, theory‑plausible separations on fairness/agency axes.

5) Sanity checks: reliable, but not perfectly consistent

- Scale use: split‑half tests show models use numeric scales coherently (better than random), but variance differs; Gemini shows the widest spread.

- Text vs. number alignment (are rationales consistent with ratings?): agreement rates range roughly 52%–69% across models—good, not great. Expect occasional inconsistency between explanations and numbers.

Why This Matters for Builders

-

Design responses around appraisals, not labels. If anger keys on unfairness, mitigation should address fairness (policy clarity, restitution), not just offer empathy templates.

-

Choose models per emotion profile. If your product must distinguish frustration vs. anger or interest vs. hope, validate appraisal weights—not only classification F1.

-

Instrument for certainty and agency. These dimensions govern when to escalate, route to a human, or defer. Bake them into decision policies.

-

Beware cross‑model drift. A model upgrade can shift appraisal distributions (e.g., more “uncertain” readings). Lock evaluation to appraisal‑level metrics, not just sentiment accuracy.

-

Close the loop on explanation quality. Because text/numeric appraisals don’t always align, add checks: if rationale says “completely fair” but legitimacy‑cheated is high, flag for review.

A Simple Readiness Checklist

- We evaluate 8 appraisal categories (not just valence).

- We track fairness and responsibility for anger‑like states.

- We use certainty to govern escalation and agency to route ownership.

- We test model swaps for appraisal drift, not only top‑line accuracy.

- We enforce rationale–rating consistency in CI.

What This Means Strategically

- RLHF isn’t enough for emotional reasoning. The findings imply alignment should target appraisal structures (fairness, agency, certainty), not just “polite” outputs.

- Policy & safety: If models conflate high‑arousal negatives (fear/anger/frustration), threat detection can misfire. Appraisal‑aware thresholds reduce false positives/negatives.

- Localization: Fairness appraisals vary culturally. Appraisal‑level tuning per locale beats one global threshold.

Bottom Line

CoRE reframes “emotion in AI” from vibes to variables. The news isn’t that LLMs mimic humans perfectly—they don’t. It’s that their reasoning patterns are legible enough to instrument. If you treat appraisals as first‑class signals, you can build systems that respond to why users feel a certain way, not just what they feel.

Cognaptus: Automate the Present, Incubate the Future