In data-scarce domains, the bottleneck isn’t computing power — it’s labeled data. Semi-supervised learning (SSL) thrives here, using a small set of labeled points to guide a vast sea of unlabeled ones. But what happens when we bring quantum mechanics into the loop? This is exactly where Improved Laplacian Quantum Semi-Supervised Learning (ILQSSL) and Improved Poisson Quantum Semi-Supervised Learning (IPQSSL) enter the stage.

From Graphs to Quantum States

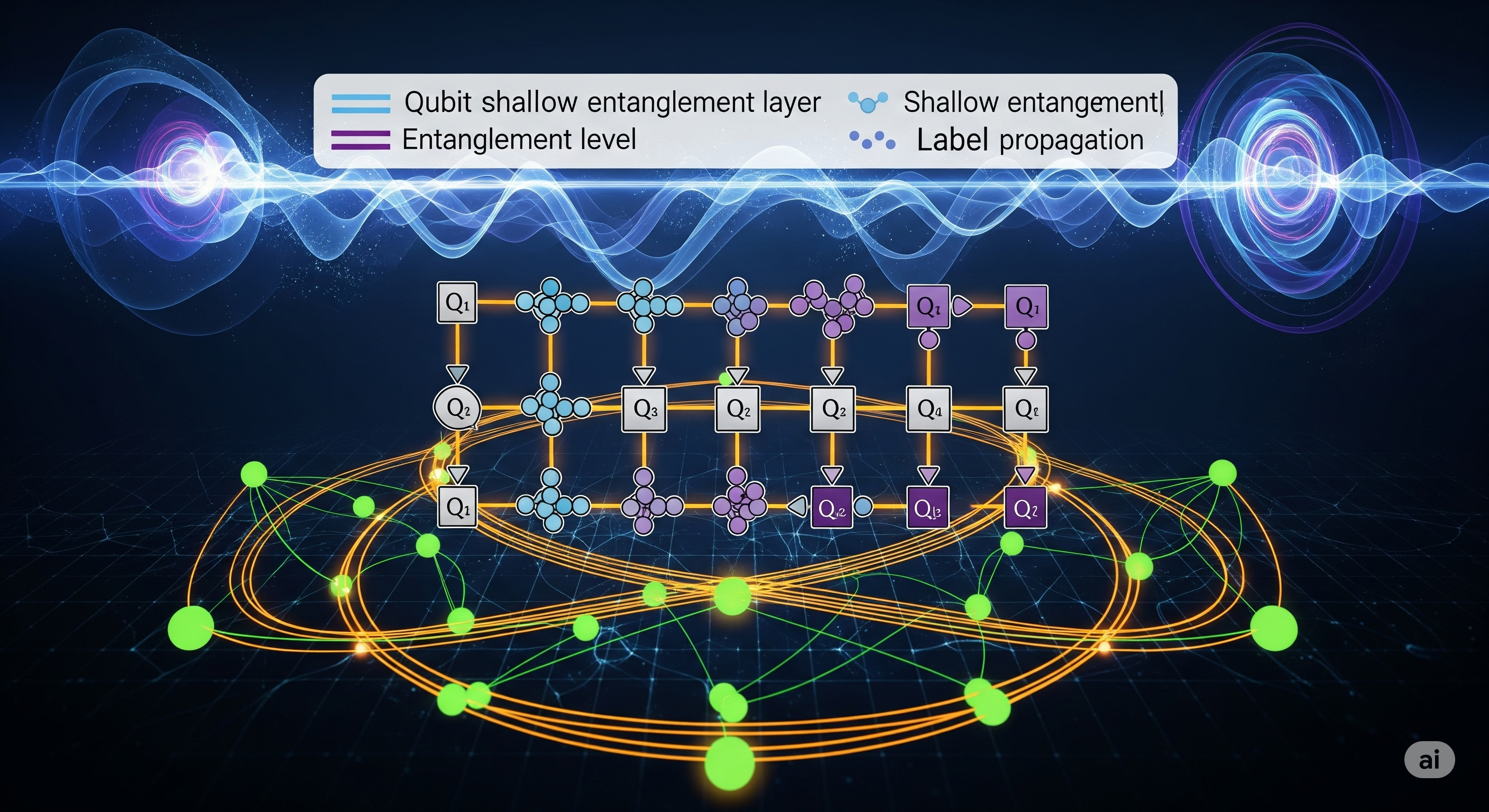

Both ILQSSL and IPQSSL operate in the graph-based SSL paradigm, where data points become nodes and similarity measures define edges. The twist is how they embed this graph structure directly into quantum states using QR decomposition. By decomposing graph-derived matrices into orthogonal (unitary-compatible) components, they map structure into quantum circuits without violating the unitarity constraints of quantum computing.

This embedding allows quantum circuits to perform label propagation — diffusing known labels through the graph while exploiting quantum effects like superposition and entanglement to explore the space more expressively than classical methods.

The Two Quantum Engines

- ILQSSL refines the Laplacian-based label propagation process through targeted hyperparameter tuning, balancing moderate entanglement with circuit depth to avoid noise degradation.

- IPQSSL improves Poisson learning by stabilizing its iterative updates with regularization terms, ensuring faster convergence and better adaptation to class imbalance.

Both integrate into variational quantum circuits, where tunable parameters are optimized to minimize classification error.

Outperforming the Classical Benchmarks

Across four benchmarks — Iris, Wine, Heart Disease, and German Credit — the results are striking:

| Dataset | Best Classical Accuracy | IPQSSL Accuracy | Gain |

|---|---|---|---|

| Iris | 91% | 97% | +6% |

| Wine | 72% | 94% | +22% |

| Heart Disease | 53% | 83% | +30% |

| German Credit | 71% | 77% | +6% |

On structured datasets like Iris and Wine, the gains confirm the quantum models’ ability to exploit well-defined feature boundaries. On complex, noisy datasets like Heart Disease, the margin is even more pronounced — showing resilience where classical methods falter.

The Circuit Design Trade-off

The authors didn’t stop at accuracy metrics. They examined entanglement entropy and Randomized Benchmarking (RB) across different circuit depths and qubit counts:

- More qubits → Higher entanglement (more expressive power) but increased susceptibility to noise.

- More layers → Gradual fidelity loss without consistent accuracy gains, highlighting the noise–expressivity trade-off in NISQ-era hardware.

- Optimal designs leaned toward shallow-to-moderate depths and moderate qubit counts, tailored to dataset complexity.

Business and Research Implications

For industries constrained by label availability — from clinical diagnostics to fraud detection — these findings signal a future where quantum-enhanced SSL can unlock more value from the same data. But they also serve as a warning: indiscriminately scaling quantum circuits can erode performance.

The practical takeaway? Balance is everything — in circuit design, in hyperparameter tuning, and in deciding when to go quantum. As hardware matures, combining these quantum models with error mitigation and adaptive encoding could push their edge even further.

Cognaptus: Automate the Present, Incubate the Future