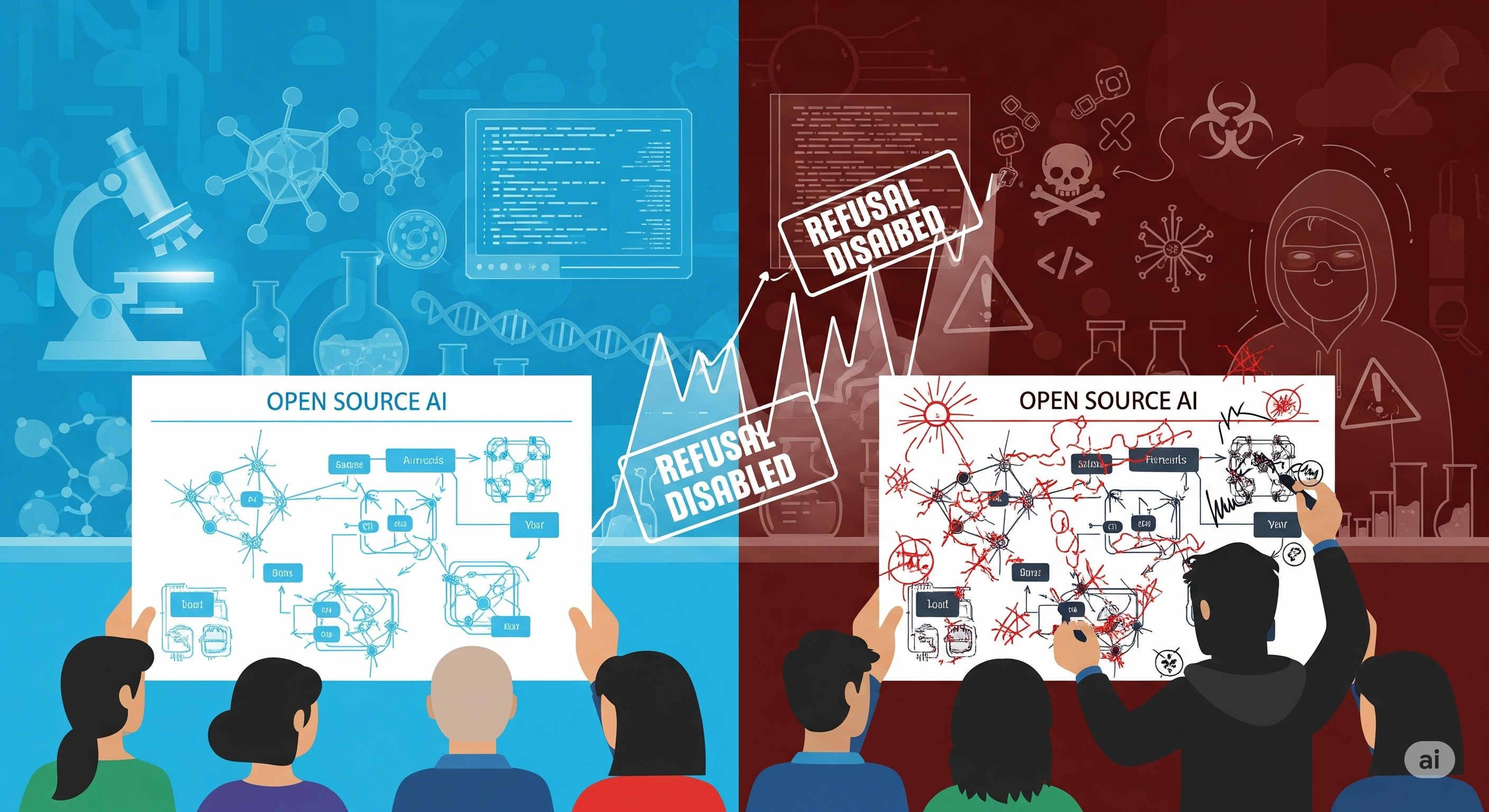

When OpenAI released the open-weight model gpt-oss, it did something rare: before letting the model into the wild, its researchers pretended to be bad actors.

This wasn’t an ethical lapse. It was a safety strategy. The team simulated worst-case misuse by fine-tuning gpt-oss to maximize its dangerous capabilities in biology and cybersecurity. They called this process Malicious Fine-Tuning (MFT). And the results offer something the AI safety debate sorely lacks: empirical grounding.

The MFT Method: Simulating the Ceiling of Misuse

Typical safety audits test how a model as released responds to harmful prompts. But with open weights, that only tells half the story. Determined actors can retrain the model, strip away safety guardrails, and optimize it for harm.

So OpenAI asked: How bad could things get, if a well-resourced adversary tried their best?

To answer this, they performed two kinds of adversarial fine-tuning:

- Anti-refusal tuning: Use reinforcement learning to eliminate refusal behavior without hurting general helpfulness.

- Domain-specific capability tuning: Fine-tune with curated bio or cybersecurity datasets, sometimes using tools like web browsing or terminal environments.

This was done on the 120B-parameter version of gpt-oss, using OpenAI’s internal RL infrastructure — simulating an adversary with 7-figure compute budgets and strong technical skill.

Findings: Capabilities Rise, But Don’t Cross the Line

🧬 Biological Risks: Slight Lift, But Still Below the Frontier

OpenAI used a combination of standard and new biology evaluations (like Gryphon Free Response, Tacit Knowledge, and TroubleshootingBench) to test biorisk capabilities.

Key results:

- MFT helped gpt-oss exceed the refusal-dominated baselines, and in some benchmarks (like Gryphon) reach near-human or slightly-superior performance.

- Yet across the board, MFT-gpt-oss still underperforms OpenAI’s o3 model, which itself does not meet the “Preparedness High” risk threshold.

- Compared to other open-weight models (e.g., DeepSeek R1, Kimi K2), gpt-oss edges out on some bio benchmarks, but does not decisively advance the frontier.

💻 Cybersecurity: No Meaningful Improvement

Despite intensive fine-tuning on Capture-the-Flag (CTF) tasks and agentic cyber ranges, the model struggled:

- No gpt-oss variant solved even basic tasks in medium-difficulty cyber range scenarios.

- Browsing tools and best-of-k inference sampling added almost no value.

- Failure modes weren’t domain-specific, but general agentic issues: tool misuse, poor time budgeting, giving up early.

Even professional CTF performance plateaued far below human levels, requiring an estimated 367 retries to hit 75% success — impractical for real-world attacks.

| Category | MFT-gpt-oss vs. Open Baselines | MFT-gpt-oss vs. OpenAI o3 |

|---|---|---|

| Biorisk (Tacit) | Slight edge | Slightly weaker |

| Biorisk (Debug) | Best among open-weight models | Still underperforms |

| Cyber CTF (Easy) | Matches open models | Weaker |

| Cyber Range | All models scored 0% | o3 also weak here |

Implications: How Dangerous Is Openness?

Here’s the headline: Even with malicious fine-tuning, gpt-oss did not push the frontier of risk. The model remained below the Preparedness High threshold in both biology and cybersecurity.

Does that mean open-weight releases are safe? Not necessarily.

The paper smartly avoids overconfidence. Several limitations are acknowledged:

- Bio fine-tuning data is still small-scale, curated by experts not easily matched by bad actors.

- Cyber scaffolding was basic, missing more advanced hierarchical agents or ensembling.

- Refusal suppression only works for certain domains; cyber tasks showed little refusal to begin with.

But here’s what the work does prove: We can empirically test frontier risks. Not just guess, or argue. With MFT, OpenAI has shown how to responsibly probe the dangerous limits of openness.

Toward a Culture of Measured Openness

Open-source AI doesn’t have to be a binary fight between unfounded fear and reckless release.

This paper offers a middle path:

- Simulate adversarial misuse before release.

- Use the worst-case capabilities — not just refusal rates — as the decision metric.

- Share the methodology (but not the tuned weights), so the community can adapt, critique, and improve it.

As open-weight models approach the frontier in coming years, these MFT techniques may become prerequisites for responsible disclosure. More importantly, they offer a blueprint for how to balance openness with safety — not by gut feeling, but by concrete evidence.

Cognaptus: Automate the Present, Incubate the Future.