“I See What I Want to See”

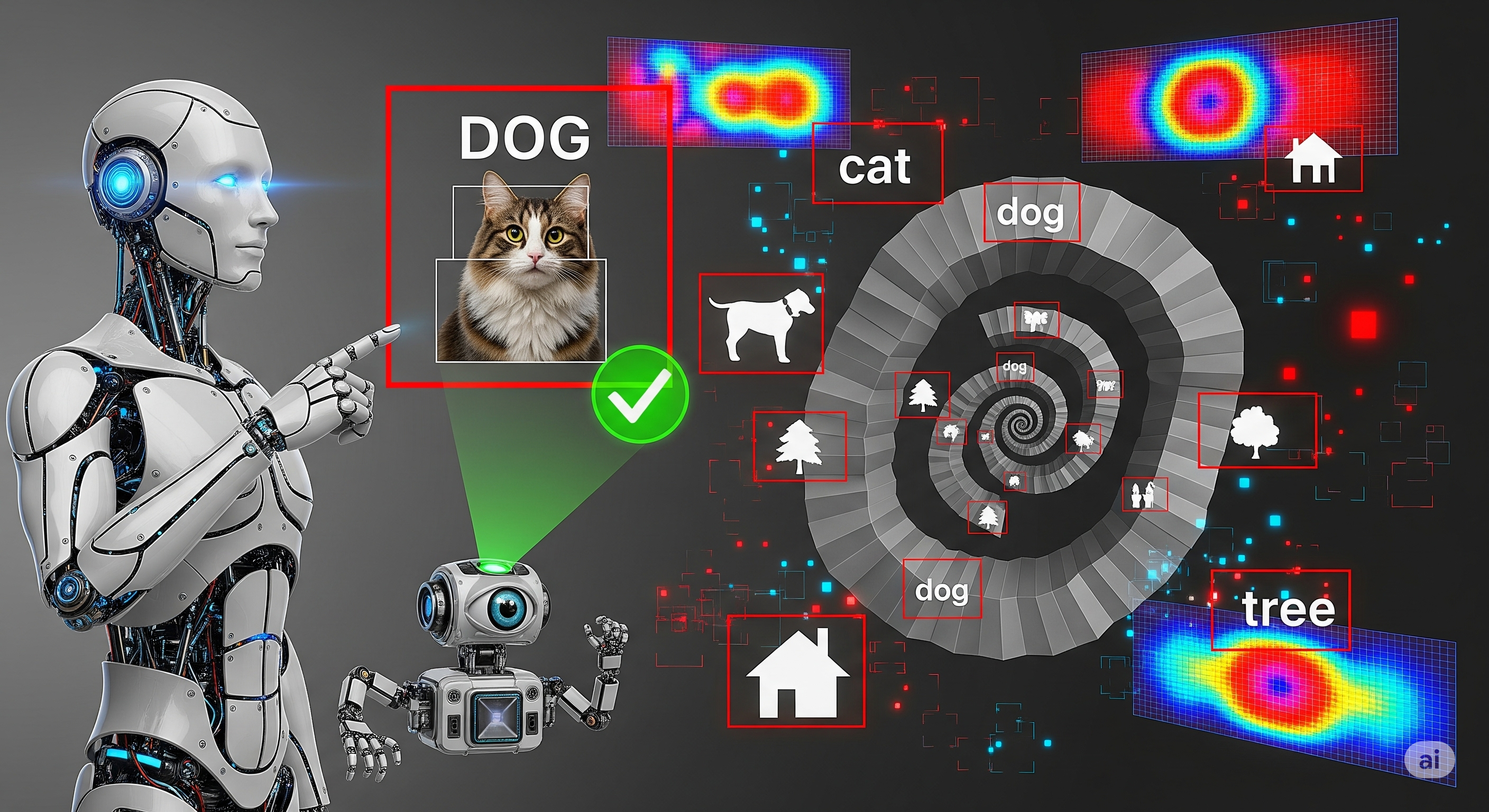

Modern multimodal large language models (MLLMs)—like GPT-4V, Gemini, and LLaVA—promise to “understand” images. But what happens when their eyes lie? In many real-world cases, MLLMs generate fluent, plausible-sounding responses that are visually inaccurate or outright hallucinated. That’s a problem not just for safety, but for trust.

A new paper titled “Understanding, Localizing, and Mitigating Hallucinations in Multimodal Large Language Models” introduces a systematic approach to this growing issue. It moves beyond just counting hallucinations and instead offers tools to diagnose where they come from—and more importantly, how to fix them.

Three-Part Strategy: Benchmark, Diagnose, Fix

1. Benchmarking the Blind Spots — MMHal-Bench

The authors create MMHal-Bench, a 6,000+ sample benchmark that targets the most hallucination-prone scenarios in MLLMs:

- Conflicting Visual Context: Images where expectations and appearances differ.

- Optical Illusions: Designed to bait incorrect perception.

- Multilingual Visual Text: Forces models to read across languages.

Each sample is manually annotated and tested across five task formats (e.g., open QA, binary QA, multiple choice), ensuring a robust and diverse stress test.

🧠 Key finding: Even state-of-the-art models hallucinate heavily when visual signals conflict with language priors.

2. Localizing the Hallucination Source

Not all hallucinations are born equal. To fix them, we must ask: is it the vision encoder or the language model that’s hallucinating?

The paper introduces two metrics:

| Metric | Purpose | High Score Means |

|---|---|---|

| Modality Faithfulness (MF) | Measures if the answer is based on the image, not just language | Vision is actually used |

| Vision Relevance (VR) | Measures how aligned the LLM’s attention is with image tokens | Focus stays on image, not language priors |

By conducting intervention tests (e.g., removing vision input), they discover that many models just default to their language bias, regardless of what the image shows.

📌 This reinforces an uncomfortable truth: many MLLMs are text-heavy mimics, not true visual reasoners.

3. Fixing with a Visual Assistant (VA)

Here’s the clever part. Rather than retraining massive models, the authors propose a modular fix:

- A Visual Assistant (VA) acts like a factuality gatekeeper.

- It evaluates candidate outputs based on visual-text consistency.

- Powered by lightweight models (e.g., BLIP2), the VA reranks the options before final output.

The VA is plug-and-play, requiring no retraining of the original model.

| Benefit | Description |

|---|---|

| Simplicity | No modification to base MLLM needed |

| Effectiveness | 5–18% reduction in hallucination rate |

| Interpretability | Modular output control, more explainable |

This is a practical step toward more grounded, verifiable, and responsible MLLMs.

Why It Matters (and What It Means for You)

Cognaptus Insights previously covered hallucination issues in text-only LLMs—but this paper shows that multimodal hallucinations are potentially more dangerous. A text hallucination is a mistake; a visual hallucination is a betrayal of trust.

If MLLMs are to be deployed in high-stakes domains—medical imaging, autonomous driving, surveillance—they need to see and say accurately. This paper provides a framework and a toolkit to move in that direction.

And for AI product developers? The VA module represents a low-cost, high-impact upgrade path—proof that smarter doesn’t always mean bigger.

Cognaptus: Automate the Present, Incubate the Future