Personalization is the love language of AI. But today’s large language models (LLMs) are more like well-meaning pen pals than mind-reading confidants. They remember your name, maybe your writing style — but the moment the context shifts, they stumble. The CUPID benchmark, introduced in a recent COLM 2025 paper, shows just how wide the gap still is between knowing the user and understanding them in context.

Beyond Global Preferences: The Rise of Contextual Alignment

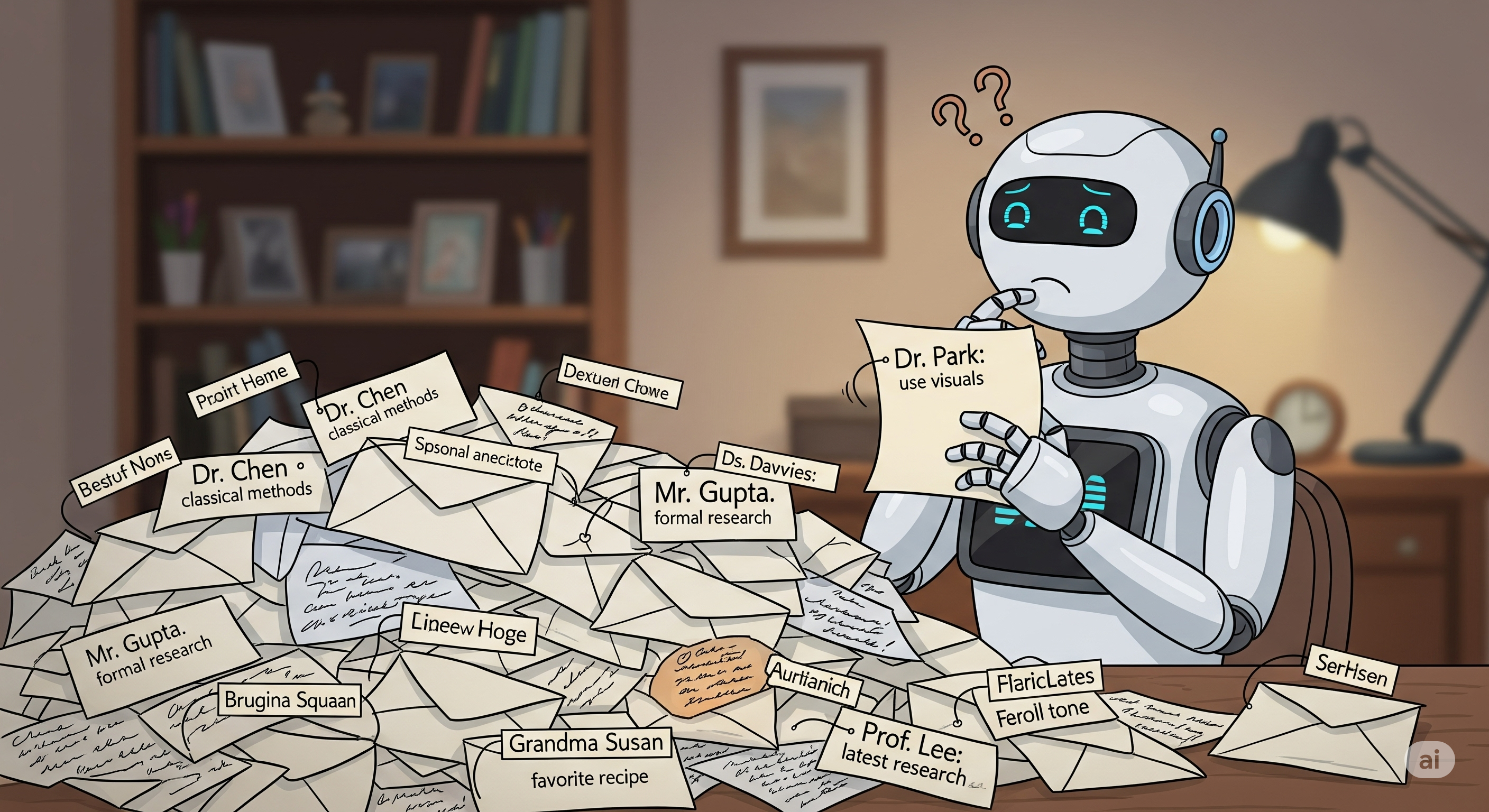

Most LLMs that claim to be “personalized” assume you have stable, monolithic preferences. If you like bullet points, they’ll always give you bullet points. If you once asked for formal tone, they’ll keep things stiff forever.

But real users are context-sensitive. A researcher might prefer formal, theorem-heavy arguments when working with Dr. Chen, but opt for visual explanations when collaborating with Dr. Park. These are not contradictions — they’re contextual preferences, and they define how humans behave in real-world settings.

CUPID (Contextual User Preference Inference Dataset) is the first benchmark that tests whether LLMs can:

- Infer a user’s latent preference based on past multi-turn conversations in similar contexts.

- Generate a response that satisfies this inferred preference in a new but related scenario.

The dataset contains 756 interaction histories, each with multi-turn feedback, shifting contexts, and evolving preferences. It’s realism, simulated — but meticulously verified by humans.

So, How Do the Models Do?

Let’s cut to the chase: terribly.

Across 10 state-of-the-art LLMs (GPT-4o, Claude 3.7, DeepSeek-R1, Gemini 2.0, etc.):

- No model achieved more than 50% precision or 65% recall in inferring user preferences.

- Even in an oracle setting — where models were fed only the relevant past interactions — precision remained low.

Error Breakdown

| Error Type | DeepSeek-R1 | Llama 3.1 405B |

|---|---|---|

| Incorrect Context | 86% | 40% |

| Shallow Inference | 10% | 50% |

| Vagueness | 2% | 6% |

| Hallucination | 2% | 4% |

LLMs like DeepSeek-R1 did try to reason from past interactions, but often latched onto the wrong sessions. Others, like Llama, gave up and just guessed based on the current prompt.

Interestingly, performance was best in “Changing” instances — where the user’s preference about the same context evolved over time. Why? Because the relevant preference tends to show up near the end of the interaction history — and models are biased toward recent memory.

It’s Not About Generation — It’s About Inference

In the generation task (responding to the new user query in context), models performed much better when handed the correct preference:

| Model | Score (w/ correct preference) |

|---|---|

| DeepSeek-R1 | 9.66 |

| Claude 3.7 | 9.63 |

| GPT-4o | 9.20 |

So the bottleneck isn’t generating aligned outputs — it’s inferring what alignment even means in the first place.

Enter PREFMATCHER-7B: Alignment on a Budget

To reduce evaluation cost, the authors fine-tuned a 7B model to serve as a proxy judge of inferred preference quality. The resulting model, PREFMATCHER-7B, achieved a correlation of r = 0.997 with GPT-4o — making it a low-cost, scalable evaluator.

This opens the door to benchmarking contextual alignment much more broadly across open-source models and fine-tuning experiments.

So, What Do We Do With This?

CUPID highlights a foundational limitation in today’s personalization stack:

Most LLMs don’t forget the user — they just don’t know what parts of the past matter right now.

Three practical takeaways:

- Context-Aware Retrieval is key. Memory isn’t enough — we need retrieval mechanisms that fetch relevant prior interactions, not just recent or semantically similar ones.

- Summarization Helps the Small. Summarizing prior interactions improves inference for small models (like Mistral 7B), but has little benefit — or even harm — for stronger reasoning models.

- In-Session Preference Mining is underexplored. Multi-turn feedback is a goldmine of implicit preferences. Models must learn to mine them as they go.

For AI assistants to truly feel intelligent — not just coherent — they need to do more than store memories. They need to interpret your evolving values in context, much like a human would.

CUPID doesn’t solve that problem. But it finally gives us a way to measure it.

Cognaptus: Automate the Present, Incubate the Future