If you think AI models are getting too good at math, you’re not wrong. Benchmarks like GSM8K and MATH have been largely conquered. But when it comes to physics—where reasoning isn’t just about arithmetic, but about assumptions, abstractions, and real-world alignment—the picture is murkier.

A new paper, PhysicsEval: Inference-Time Techniques to Improve the Reasoning Proficiency of Large Language Models on Physics Problems, makes a bold stride in this direction. It introduces a massive benchmark of 19,609 physics problems called PHYSICSEVAL and rigorously tests how frontier LLMs fare across topics from thermodynamics to quantum mechanics. Yet the real breakthrough isn’t just the dataset—it’s the method: multi-agent inference-time critique.

Why Physics Reasoning Is Different

Solving physics problems requires more than equation plugging. It demands:

- Picking the right formula (from many that seem plausible)

- Applying physical intuition to verify units and boundary conditions

- Managing assumptions that may make or break solvability

This moves us out of the territory of GPT-style pattern recognition (System 1 thinking) into the slower, more deliberate System 2 reasoning, as popularized by Kahneman. And here’s where most LLMs stumble.

The Dataset: PHYSICSEVAL

The authors constructed PHYSICSEVAL by scraping real textbook problems and solutions, then refining them with Gemini 2.5 Pro. Each problem includes:

- Difficulty score (1–10)

- Step-by-step solution (avg. 3.9 steps)

- Token length: ~3,800 tokens per solution

- Physics domain tag (19 categories total)

Compared to benchmarks like PhysReason and UGPhysics, PHYSICSEVAL stands out for its breadth, depth, and difficulty. Here’s a comparison snapshot:

| Benchmark | Size | Levels | Avg. Sol. Steps | Notes |

|---|---|---|---|---|

| PhysReason | 1,200 | CEE+COMP | 8.1 | Good step detail |

| UGPhysics | 11,040 | College | 3.1 | Mostly conceptual |

| PHYSICSEVAL | 19,609 | CEE+College+Comp | 3.9 | High difficulty + math-heavy |

The Method: Let Multiple Agents Argue

Rather than fine-tune LLMs, the authors use inference-time techniques to boost accuracy:

1. Baseline

LLM answers the question directly.

2. Self-Refinement

LLM rechecks its own solution with a prompt like “You are a Physics Professor. Check for errors.”

3. Single-Agent Review

A second LLM reviews and gives feedback. The original LLM tries again.

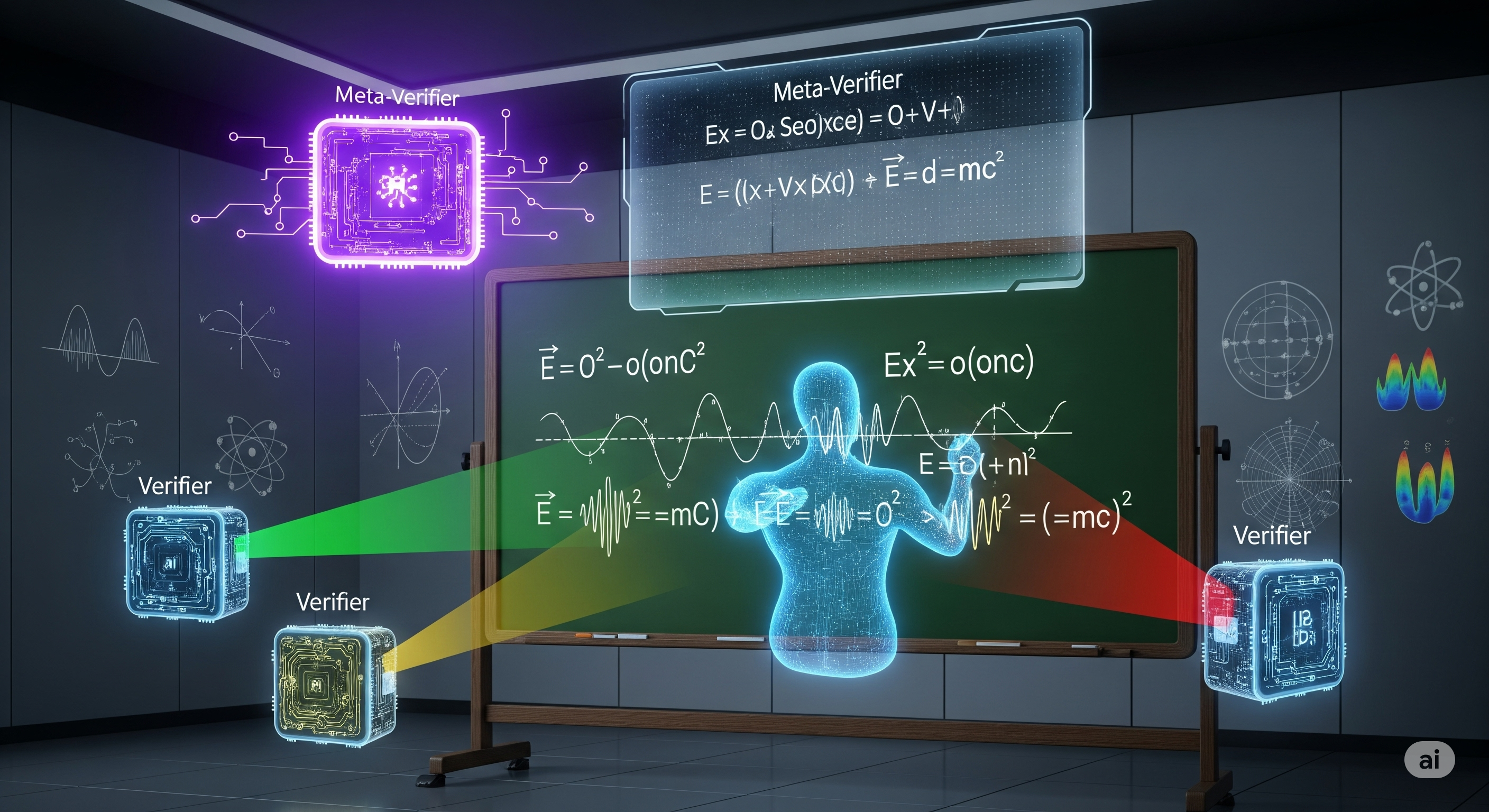

4. Multi-Agent Review (best)

Three different LLMs act as reviewers, each scoring across six rubrics (accuracy, logic, completeness, etc.). A meta-verifier filters for valid critiques and sends them back to the original LLM.

This approach doesn’t need model retraining and can use open-source verifiers (like DeepSeek-R1, Qwen3-14B) to validate commercial models (like o4-mini or Gemini), reducing API costs.

The Results: Performance, Amplified

On average, multi-agent review lifted scores across all difficulty tiers, especially for hard problems:

| Difficulty | Baseline PPS | Multi-Agent PPS | Gain |

|---|---|---|---|

| Easy | 88.96 | 91.78 | +2.82 |

| Medium | 79.21 | 81.48 | +2.27 |

| Hard | 66.20 | 68.23 | +2.03 |

But the real surprise? Self-refinement sometimes hurts performance. Llama 4 Maverick and Gemma 3 showed consistent drops when rechecking their own answers. This hints at a limitation of ungrounded introspection: without external reference, LLMs may reinforce the very errors they made.

The Implication: Verification Trumps Introspection

The study’s strongest message is this: external, diverse critique works better than internal monologue.

Why? Because errors in physics aren’t always syntactic—they’re often conceptual. An LLM might produce a beautifully formatted derivation that uses the wrong physical principle. Another LLM with different priors can flag this.

Even more intriguingly, the system assigns weights to the critiques (e.g., Phi-4 is weighted 0.5, Qwen3 only 0.2) based on empirical reviewer quality. It’s not democracy—it’s weighted consensus.

The Future: Plug-and-Play Intelligence Boost

What excites us at Cognaptus is that this approach is training-free. That means you could:

- Apply this to internal tutoring apps

- Use it to vet AI-generated code or reports in engineering

- Add plug-in reviewers to business logic agents for domain-specific QA

As LLMs get more confident, it’s time their reasoning gets cross-examined. In science—especially physics—accuracy depends on peer review. Why should AI be any different?

Cognaptus: Automate the Present, Incubate the Future