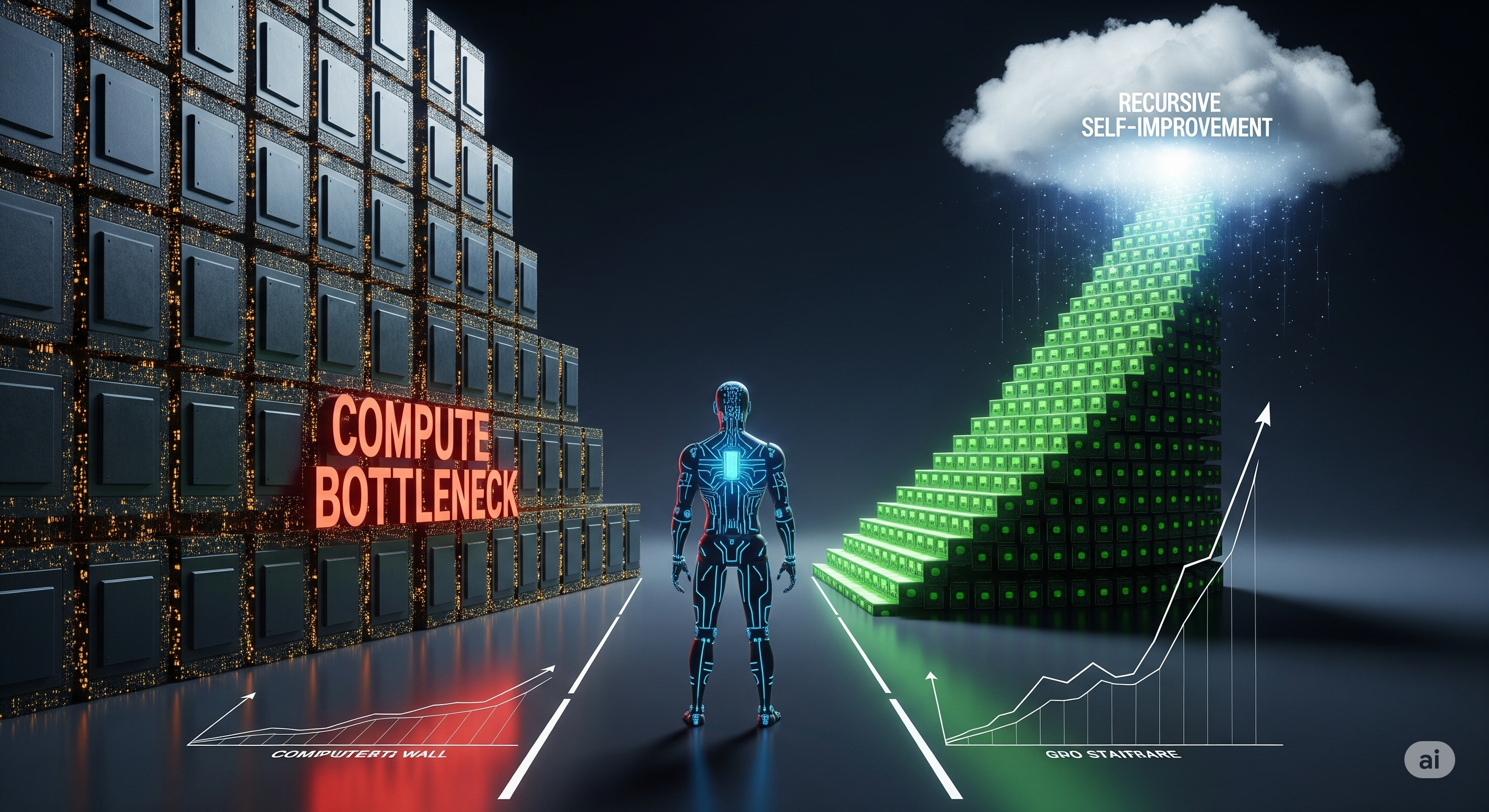

Is artificial intelligence on the brink of recursively improving itself into superintelligence? The theoretical path—recursive self-improvement (RSI)—has become a cornerstone of AGI forecasting. But one inconvenient constraint looms large: compute. Can software alone drive an intelligence explosion, or will we hit a hardware ceiling?

A new paper by Whitfill and Wu (2025) tackles this with rare empirical rigor. Their key contribution is estimating the elasticity of substitution between research compute and cognitive labor across four major AI labs (OpenAI, DeepMind, Anthropic, and DeepSeek) over the past decade. The result: the answer depends on how you define the production function of AI research.

Two Models, Two Futures

Whitfill and Wu explore two economic models to examine how research compute and labor interact:

1. Baseline CES Model:

- Assumes compute and labor produce research effort via a Constant Elasticity of Substitution (CES) function.

- Yields elasticity estimate σ = 2.58, suggesting they are strong substitutes.

Implication: RSI is plausibly explosive. AI can replace human researchers and scale progress without proportionate compute growth.

2. Frontier Experiments Model:

- Adjusts for the growing compute requirements of near-frontier LLM experiments.

- Yields elasticity estimate σ = -0.10, effectively zero.

Implication: RSI is bottlenecked by compute. Without enough GPUs, smarter AIs can’t make more progress.

Theoretical Takeaway: Why Elasticity σ Matters

In their model, the potential for an intelligence explosion hinges on this substitution parameter:

| Elasticity σ | Explosion Condition | Interpretation |

|---|---|---|

| σ < 1 (complements) | Only if ideas get easier to find | Unlikely: progress stalls at compute wall |

| σ = 1 | Needs idea ease + parallelism | Borderline case |

| σ > 1 (substitutes) | RSI feasible if ideas get somewhat easier or parallelizable | Compute need not scale linearly |

Why the Estimates Diverge

The tension boils down to what counts as the key input:

| Model | Key Variable | Price Trend | Implied Elasticity |

|---|---|---|---|

| CES in Compute | Raw compute cost | Falling fast | Substitution |

| Frontier Experiments CES | Cost per frontier-scale experiment | Rising due to model size | Complementarity |

The baseline model captures a world where smarter experimental design offsets brute force. The frontier model reflects a world where only massive-scale experiments matter—and their cost grows rapidly.

Caution: Small Sample, Big Implications

While the paper is compelling, its conclusions come with caveats:

- Only 4 firms over 10 years—data is sparse.

- Wage and compute cost estimates involve imputation.

- The CES functional form may oversimplify real-world research dynamics.

- It assumes AI didn’t meaningfully contribute to labor pre-2024 (a shaky assumption).

Still, it’s a first-of-its-kind empirical study of AI research’s production function—a welcome shift from purely speculative forecasting.

What This Means for the Future of AI

- If compute and labor are substitutes, then the intelligence explosion scenario is not just plausible—it’s likely, provided cognitive labor (human or AI) grows.

- If they are complements, then access to massive compute remains a limiting factor, making policies like export controls potentially decisive.

Either way, the study sharpens the question: is progress in AI research more about brains or GPUs?

Until we know the answer, the feasibility of runaway RSI remains an open—and urgent—question.

Cognaptus: Automate the Present, Incubate the Future