Chain-of-Thought (CoT) prompting has become a go-to technique for improving multi-step reasoning in large language models (LLMs). But is it really helping models think better—or just encouraging them to bluff more convincingly?

A new paper from Leiden University, “How does Chain of Thought Think?”, delivers a mechanistic deep dive into this question. By combining sparse autoencoders (SAEs) with activation patching, the authors dissect whether CoT actually changes what a model internally computes—or merely helps its outputs look better.

Their answer? CoT fundamentally restructures internal reasoning—but only if your model is large enough to care.

📌 From Black Box to Sparse Box: The Setup

The authors apply sparse autoencoders (SAEs) to the internal activations of two EleutherAI models:

- Pythia-70M (a small model)

- Pythia-2.8B (a moderately large model)

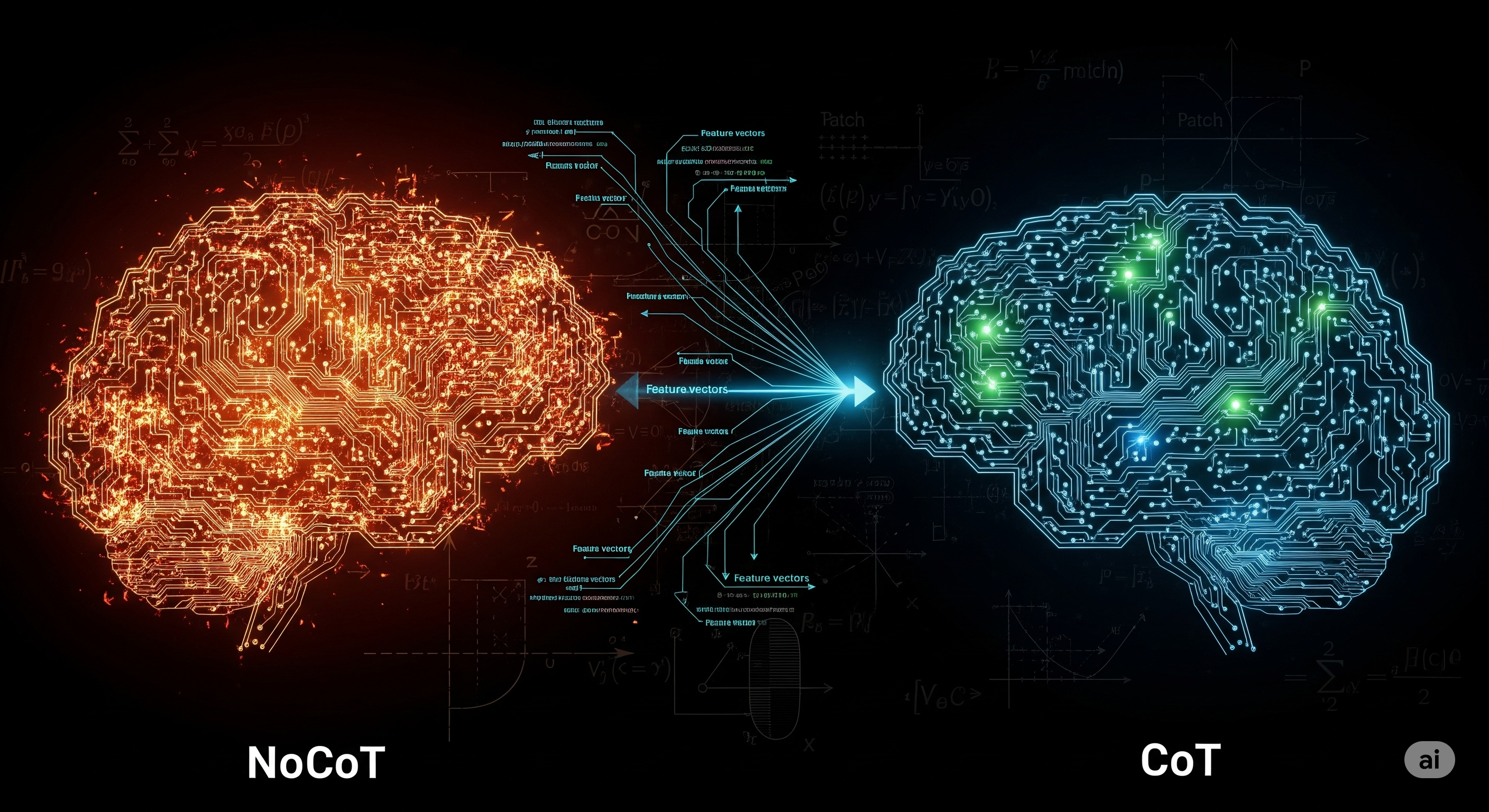

They feed both models the same GSM8K math problems under two prompting modes:

- NoCoT: Just the question

- CoT: Question preceded by step-by-step examples

Then they:

- Train SAEs on hidden activations to extract interpretable, sparse features.

- Perform activation patching: swap CoT features into NoCoT runs to test causal effect.

- Analyze activation sparsity and feature semantics.

🔍 Insight #1: CoT Only Helps If You’re Big Enough

The headline result is a stark scale threshold:

| Effect | Pythia-70M | Pythia-2.8B |

|---|---|---|

| CoT improves feature interpretability | No | Yes (p = 0.004) |

| CoT feature patching boosts output | No | Yes (+1.2 → +4.3 log-prob) |

| CoT induces sparse, modular activations | Mild | Strong |

In other words: CoT doesn’t just need prompting—it needs capacity. In the 70M model, CoT features don’t transfer well and often harm performance. In 2.8B, they cleanly boost output quality and align with more interpretable internal structures.

🔍 Insight #2: Random Features > Top Features

Surprisingly, the most helpful CoT features weren’t necessarily the top-K most activated ones.

In 2.8B, randomly sampled CoT features outperformed the top-K by activation strength.

Why? Because CoT signals are widely distributed across the feature space. High-activation features might overfit to local quirks, while random samples capture a more holistic reasoning trace.

This insight challenges many interpretability pipelines that fixate on the strongest activations. Sometimes, breadth beats strength.

🔍 Insight #3: CoT Makes Thinking Sparse and Strategic

CoT doesn’t just change what the model says—it changes how it thinks. Activation histograms show that CoT:

- Suppresses most neurons, activating only a few key ones.

- Encourages structured sparsity, where some SAE features rely on just a handful of neurons.

- Induces semantic resource allocation, assigning specific neuron subsets to distinct reasoning subgoals.

This kind of internal reorganization is exactly what interpretability researchers hope to see: disentangled, modular, and compositional features that reflect coherent sub-tasks.

🧠 Faithful Thoughts Need Room to Grow

This study adds strong causal evidence to a growing belief: CoT isn’t just a formatting trick. For sufficiently large LLMs, CoT prompts restructure internal representations, making them not only more effective but more interpretable.

But it also draws a cautionary boundary: faithful reasoning requires scale.

Small models might mimic the style of step-by-step reasoning, but lack the capacity to internalize it in a semantically meaningful way. They echo the form without absorbing the function.

📌 Implications for Practice

| If you’re… | Then consider… |

|---|---|

| Designing interpretability tools | Patch in random feature subsets, not just top-K activations |

| Benchmarking LLM reasoning | Use causal tests, not just output correctness |

| Training instruction-tuned models | Reserve CoT prompting for models with sufficient depth (>1B) |

This paper offers a replicable framework to quantify CoT faithfulness at the feature level. With the release of code and data, it’s a template for future analysis across more tasks and models.

Cognaptus: Automate the Present, Incubate the Future.