If you’ve ever tried to run a powerful AI model on a modest device—say, a drone, a farm robot, or even a Raspberry Pi—you’ve likely hit the wall of hardware limitations. Today’s most accurate models are big, bloated, and brittle when it comes to efficiency. Enter knowledge grafting, a refreshingly biological metaphor for a novel compression technique that doesn’t just trim the fat—it transfers the muscle.

Rethinking Compression: Not What to Cut, But What to Keep

Traditional model optimization methods—quantization, pruning, and distillation—all try to make the best of a difficult trade-off: shrinking the model while limiting the damage to performance. These methods often fall short, especially when you push compression past 5–6x.

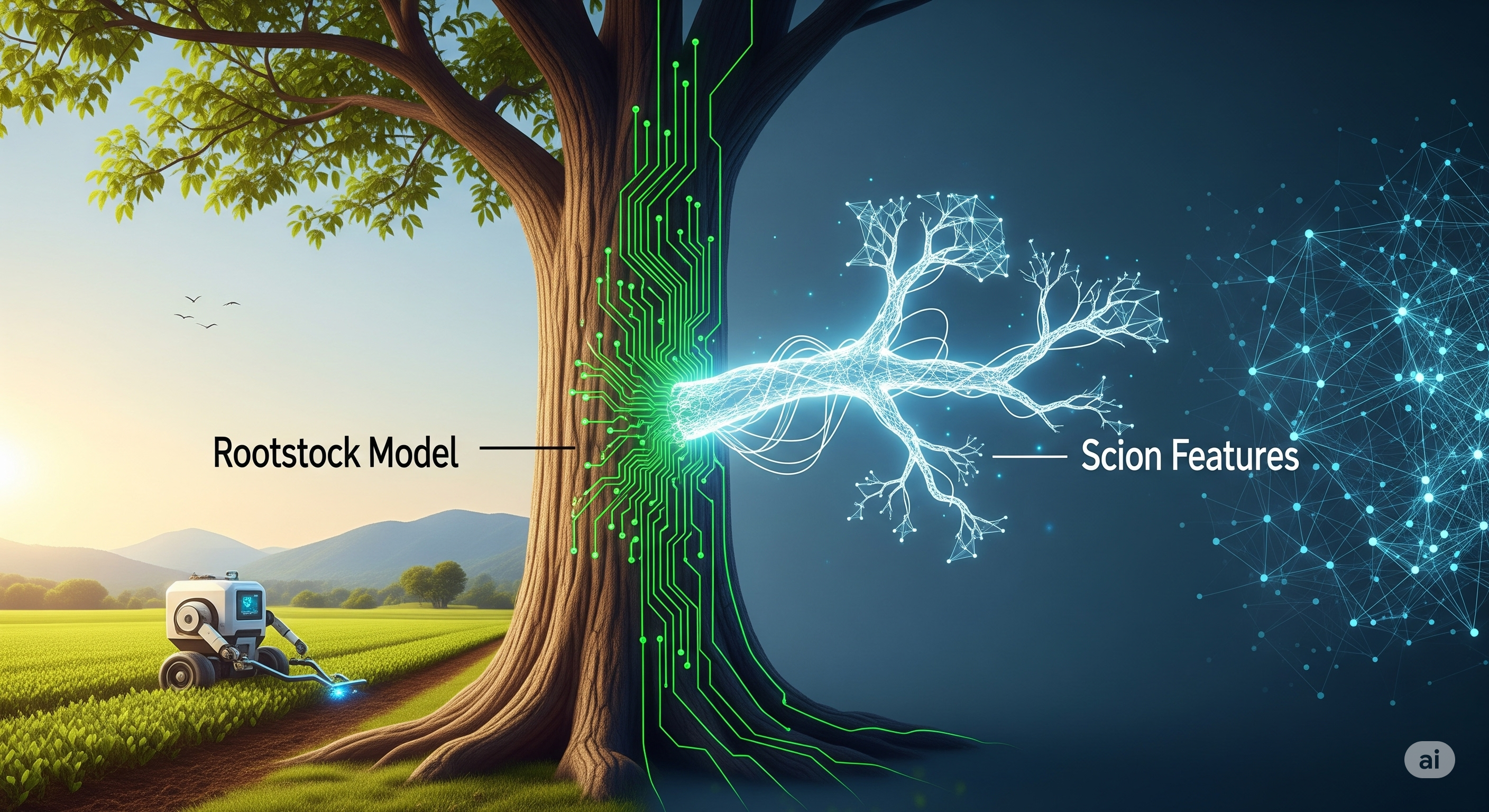

Knowledge grafting flips the script. Instead of asking what to remove, it asks: what’s worth preserving, and how can we transfer it to a smaller body? It borrows from horticulture, where a scion (a branch with desired traits) is grafted onto a rootstock (a robust base). Here, the scion is a set of rich intermediate features from a large donor model, and the rootstock is a lean model purpose-built for efficient computation.

This is not just metaphorical elegance—it’s architectural redesign. The scion’s features are extracted (via global average pooling) and concatenated into the rootstock through new dense layers. No retraining to mimic behavior, no full-layer transfer. It’s direct, modular, and effective.

How Much Leaner? Try 8.7x Smaller

The numbers speak loudly:

| Metric | Donor Model (VGG16) | Rootstock Model |

|---|---|---|

| Total Parameters | 16.88 million | 1.93 million |

| Model Size | 64.39 MB | 7.38 MB |

| Validation Accuracy | 87.47% | 89.97% |

| Test Accuracy | — | 90.45% |

The rootstock model not only becomes 88.54% smaller—it outperforms its donor in generalization, particularly in real-world weed detection tasks. While state-of-the-art models like EfficientNet V2 and Vision Transformers score slightly higher in accuracy (95–97%), they’re 10–45x larger. That trade-off makes them impractical for edge deployment.

The Right Cut for the Right Constraints

One of the paper’s key insights is that the grafting strategy can be tuned to fit different deployment goals:

- Size-Constrained Performance Maximization: Best for RAM-limited devices (e.g., microcontrollers).

- Performance-Constrained Size Minimization: Best for bandwidth-limited settings (e.g., remote agriculture).

This dual-objective formulation formalizes knowledge grafting not just as a technique, but as an optimization framework.

Better Than the Usual Suspects

| Method | Size Reduction | Accuracy Drop | Retraining Cost | Design Philosophy |

|---|---|---|---|---|

| Quantization | 2–4x | ~1.2% | Minimal | Reduce precision |

| Pruning | 3–5x | ~3.6% | High | Delete unimportant weights |

| Distillation | 5–6x | ~5.1% | Very High | Mimic teacher behavior |

| Transfer Learning | 1–2x | Low | Medium | Reuse layers |

| Knowledge Grafting | 8.7x | -0.15% (gain) | Low | Preserve key features |

Crucially, knowledge grafting avoids heavy retraining, making it especially attractive for real-time or on-device model updates. Its minimal training overhead means faster iteration and quicker deployment.

More Than a Shrink Ray—A Blueprint for Edge AI

The initial implementation uses VGG16 on the DeepWeeds dataset, but the authors outline a roadmap to expand grafting in more ambitious directions:

- Automated Feature Selection via genetic algorithms.

- Architecture-Aware Grafting that adapts to branched or modular models.

- Grafting for LLMs with input-dependent routing (think dynamic experts).

- Cross-Model Knowledge Fusion using attention mechanisms between scion and rootstock.

This positions knowledge grafting not just as a workaround for hardware limits, but as a flexible foundation for modular, explainable, and scalable AI.

Knowledge grafting is more than an optimization trick. It’s a reimagination of how deep learning knowledge can be packaged, transferred, and deployed. By thinking in terms of what to graft instead of what to shrink, we open up a new frontier in AI design—where lean doesn’t mean limited, and small can be surprisingly smart.

Cognaptus: Automate the Present, Incubate the Future.