Fine-tuning is the hammer; steering is the scalpel. In an era where models are increasingly opaque and high-stakes, we need tools that guide behavior without overhauling the entire architecture. That’s precisely what GRAINS (Gradient-based Attribution for Inference-Time Steering) delivers: a powerful, interpretable, and modular way to shift the behavior of LLMs and VLMs by leveraging the most fundamental unit of influence—the token.

The Problem with Global Steering

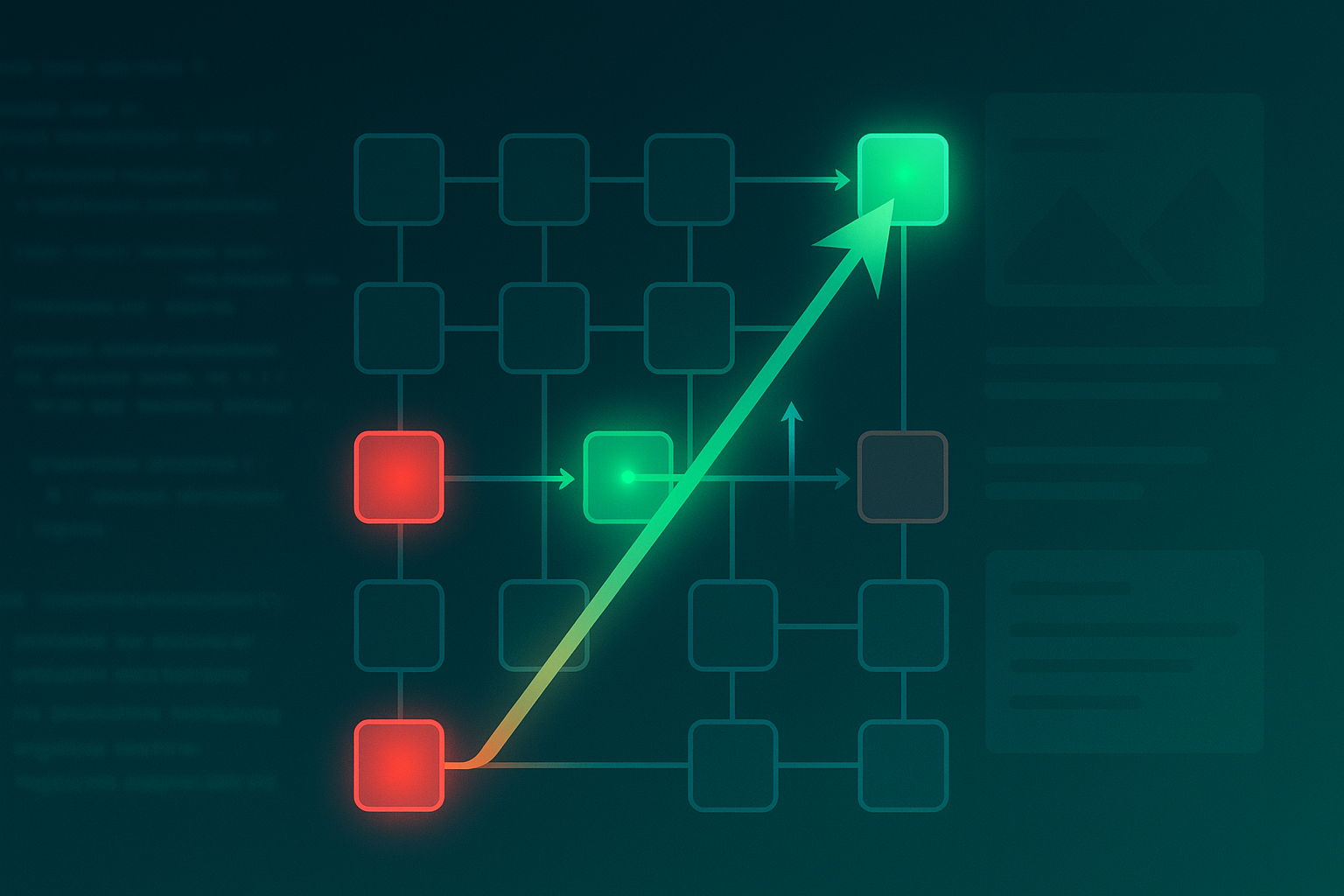

Traditional inference-time steering approaches often rely on global intervention vectors: a blunt, one-size-fits-all shift in hidden activations derived from paired desirable and undesirable examples. But these methods are insensitive to which specific tokens caused bad behavior. It’s like adjusting a recipe because the dish tastes bad—without checking if the salt or the sugar was at fault.

In multimodal settings (like vision-language models), this problem is magnified. Visual and textual tokens contribute unequally, and treating them equally can lead to overcorrections, hallucinations, or broken reasoning chains.

GRAINS: Attribution as a Steering Vector

GRAINS changes the game by introducing a causally-informed method that isolates the tokens most responsible for desirable and undesirable behavior, then constructs a directional steering vector based on those differences. Here’s the crux:

-

Token Attribution with Integrated Gradients:

- Instead of using raw output scores, GRAINS uses a preference-based loss (log-likelihood difference between preferred and dispreferred outputs).

- Integrated Gradients then identifies which input tokens (textual or visual) most strongly support either side.

-

Contrastive Steering Vector Construction:

- GRAINS masks top-k positive and negative tokens, compares resulting activations, and computes per-layer difference vectors.

- It uses PCA over these deltas across samples to extract robust semantic directions of intervention.

-

Layer-wise Inference-Time Adjustment:

- These steering vectors are added to hidden activations across layers at inference time.

- Crucially, they are normalized to preserve fluency, avoiding the performance degradation common in projection-based or unsupervised methods.

This isn’t steering by brute force. It’s steering by diagnosis.

Performance: Alignment Without Amputation

What makes GRAINS especially compelling is that it outperforms both fine-tuning and other steering methods without harming the model’s general reasoning ability. Some standout results:

| Task | Model | GRAINS Gain |

|---|---|---|

| TruthfulQA (LLM) | LLaMA-3.1-8B | +13.22% accuracy |

| Toxigen (LLM) | LLaMA-3.1-8B | +9.89% accuracy |

| MMHal-Bench (VLM) | LLaVA-1.6-7B | Hallucination rate reduced from 0.624 → 0.514 |

| SPA-VL (VLM) | Qwen2.5-VL-7B | Preference alignment +8.11% |

Yet on general benchmarks like MMLU and MMMU, the model’s performance remains nearly untouched—a 0.12% drop vs. 17.78% in competing methods like CAA.

Why It Matters for the Real World

Inference-time steering with token attribution is more than a technical trick. It offers a path to responsible AI deployment:

- No retraining required: Ideal for black-box or third-party models.

- Multimodal fidelity: Accounts for the causal asymmetry between text and vision.

- Modular and explainable: Each steering vector is interpretable and task-specific.

- Scalable: Runtime cost (e.g., 96 seconds for 50 examples) is negligible compared to hours of fine-tuning.

Imagine applying GRAINS in a medical VQA system to suppress hallucinated diagnoses, or in customer-facing chatbots to minimize toxicity without dampening helpfulness. It’s like giving models a moral compass that’s tunable per interaction, not hard-coded.

Call it What It Is: Interpretability-Driven Alignment

The GRAINS framework reminds us that interpretability and alignment aren’t separate concerns. By treating attribution not as a post-hoc explanation but as a control signal, we gain a principled way to intervene when models go astray. It’s a vision of alignment that is targeted, tunable, and transparent.

Cognaptus: Automate the Present, Incubate the Future.