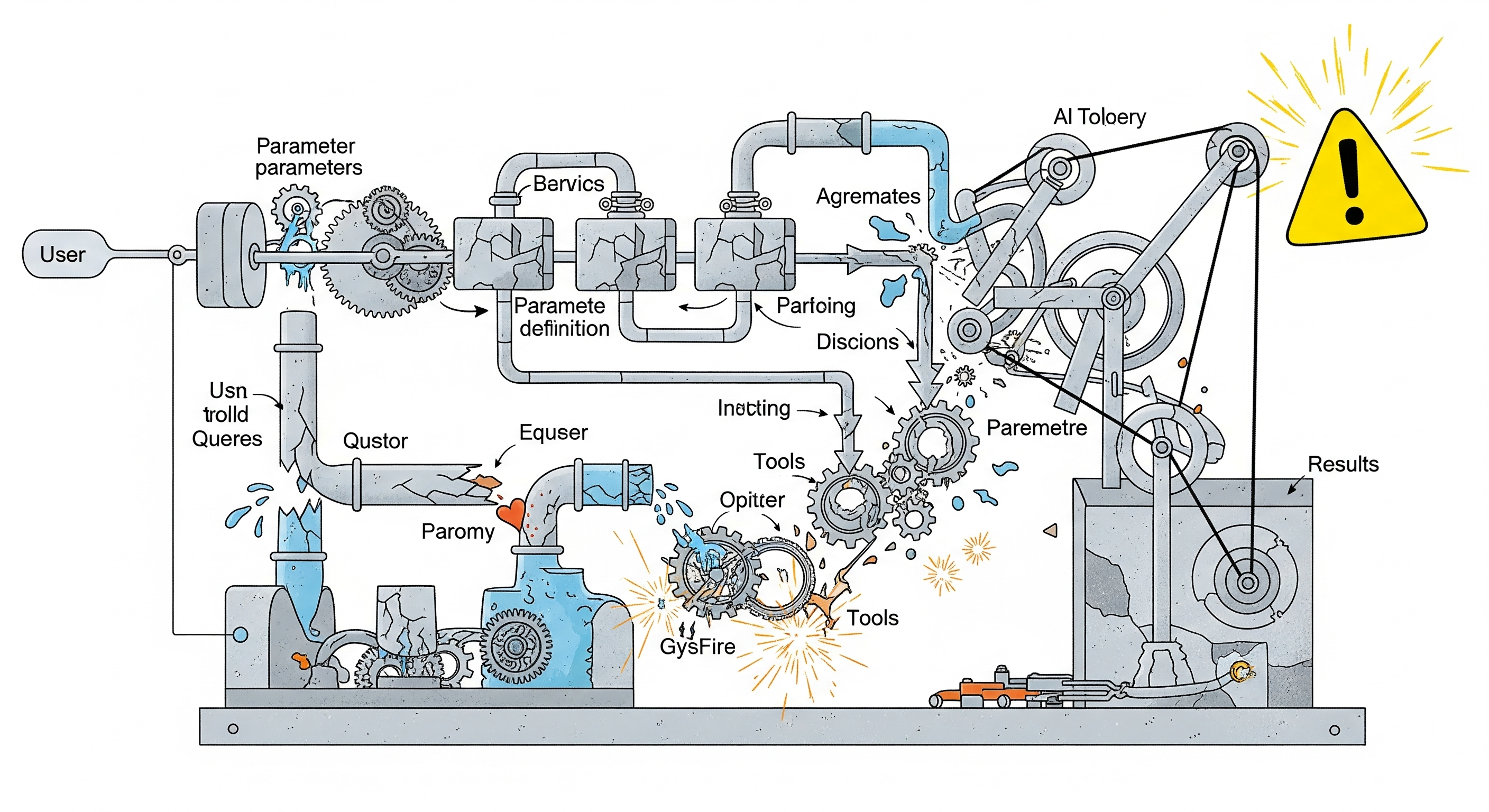

As LLM-powered agents become the backbone of many automation systems, their ability to reliably invoke external tools is now under the spotlight. Despite impressive multi-step reasoning, many such agents crumble in practice—not because they can’t plan, but because they can’t parse. One wrong parameter, one mismatched data type, and the whole chain collapses.

A new paper titled “Butterfly Effects in Toolchains” offers the first systematic taxonomy of these failures, exposing how parameter-filling errors propagate through tool-invoking agents. The findings aren’t just technical quirks—they speak to deep flaws in how current LLM systems are evaluated, built, and safeguarded.

Five Types of Failure — One Shared Weak Spot

Using grounded theory and real behavior traces from ToolLLaMa and the ToolBench dataset, the authors identify five distinct parameter failure modes:

| Failure Type | Description |

|---|---|

| Missing Information | Required parameters are left blank, making the tool invocation fail. |

| Redundant Information | Extra, user-irrelevant parameters constrain or distort tool results. |

| Hallucinated Names | Made-up parameter names not recognized by the tool (e.g., query instead of q). |

| Task Deviation | Parameters are technically valid but semantically misaligned with intent. |

| Specification Mismatch | Parameter formats/types violate tool spec, causing silent or hard failure. |

What’s striking is how often these errors occur even when models are fine-tuned: over 48% of complex toolchain queries suffer from some parameter failure.

Not All Errors Come From the Same Place

A key insight of the paper is that different failure types stem from different sources:

- Hallucinated names are inherent to the LLM and resistant to external correction.

- Missing/redundant/spec mismatch often arise from poor tool docs or low-quality returns.

- Task deviation is especially sensitive to user query perturbations and the structure of tool return data.

The researchers simulate 15 perturbation scenarios across three failure sources:

- ✍️ User Queries (e.g., removing or complicating parameters)

- 📄 Tool Documentation (e.g., wrong or missing type descriptions)

- 🔁 Tool Return Data (e.g., JSON with unexpected formats or naming conventions)

The results are as messy as real-world automation chains. Even minor changes—like switching from camelCase to snake_case—can subtly misalign expectations and trigger silent downstream bugs.

A Debugger’s Nightmare: The Transfer Effect

Most troubling is the transfer effect. The team finds that failures aren’t isolated:

Redundant parameters often lead to task deviation. Task deviation then triggers hallucinations. Soon, your model is chasing its own tail.

This compounding makes LLM agent debugging profoundly difficult. Once one error enters the chain, others tend to snowball in opaque ways. It’s a classic butterfly effect—small inconsistencies ripple into full-blown task failure.

So What Can Builders Do?

The authors offer practical and urgent suggestions:

- Enforce stricter tool documentation schemas. Missing or incorrect type declarations have the highest failure rates.

- Standardize tool return formats using consistent keys and naming styles. Ambiguous or inconsistent JSON outputs are fertile ground for failure.

- Expose and template parameter expectations at the user interface layer to reduce hallucinations and deviation.

- Design for failure propagation, not just failure isolation. Consider runtime error feedback loops, parameter inference fallback, and multi-turn self-correction heuristics.

These steps hint at a broader architectural shift: from focusing on LLM intelligence to building resilient agent infrastructures.

Implications: A Shift from LLM-Centric to Chain-Aware Thinking

This paper belongs in the same league as earlier works like ToolEval and ToolLLM, but it digs deeper into the glue that binds tools together. It calls for a paradigm shift—from viewing LLMs as monolithic solvers to seeing the tool-agent loop as a fragile ecosystem, where:

- Information loss at any point (input, tool docs, return JSON) can derail the outcome.

- LLM hallucinations are only half the problem. The other half is brittle tool design.

Final Thought

Anyone building an LLM agent platform should treat this taxonomy as a minimum checklist. Failure isn’t just about bad outputs—it’s about untraceable failure chains. And those are what break trust in automation.

Cognaptus: Automate the Present, Incubate the Future