Large Language Models (LLMs) are increasingly deployed as synthetic survey respondents in social science and policy research. But a new paper by Rupprecht, Ahnert, and Strohmaier raises a sobering question: are these AI “participants” reliable, or are we just recreating human bias in silicon form?

By subjecting nine LLMs—including Gemini, Llama-3 variants, Phi-3.5, and Qwen—to over 167,000 simulated interviews from the World Values Survey, the authors expose a striking vulnerability: even state-of-the-art LLMs consistently fall for classic survey biases—especially recency bias.

Recency Bias: A Mechanical Memory Trap

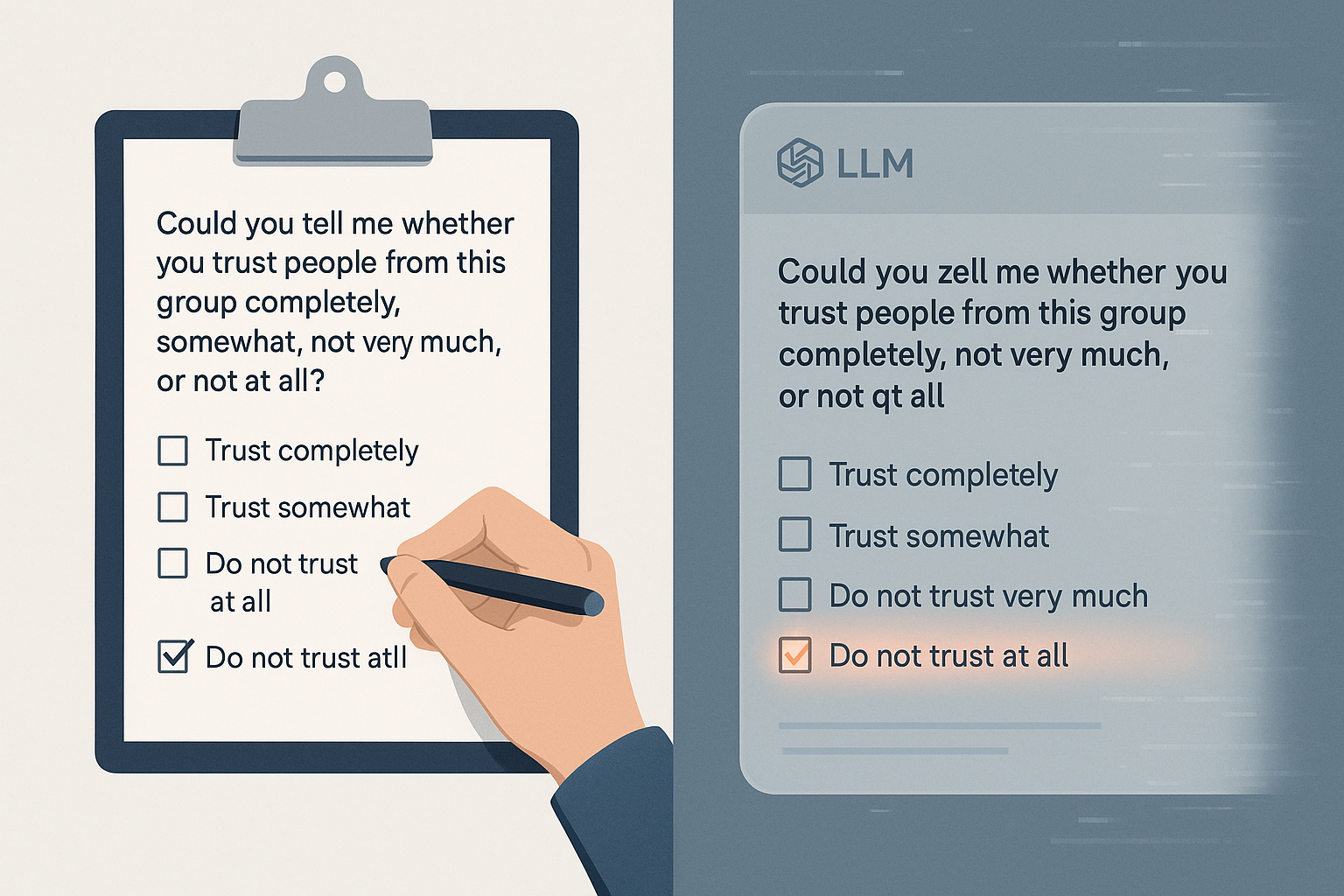

One of the clearest findings was a systematic over-selection of the last answer option when the order of responses was reversed. For example, Llama-3.1-8B was over 20 times more likely to choose an option when it appeared last rather than first. This behavior mimics human tendencies in oral surveys, where attention and memory limitations lead respondents to pick the most recently heard choice.

Yet for LLMs, which don’t “hear” or forget in the human sense, this bias suggests something deeper: the model’s auto-regressive decoding prioritizes recently seen tokens, making it effectively favor the last-listed choices—unless prompt format or fine-tuning specifically counteracts this.

Fragile Reasoning in the Face of Noise

The study tested 11 types of prompt perturbations, from subtle typos and paraphrasing to reversing response order and removing the “Don’t know” option. Findings include:

- Paraphrasing the question degraded performance more than simple synonym swaps—hinting that LLMs are brittle to even semantically equivalent reformulations.

- Keyboard typos and random character insertions were more damaging than letter swaps—suggesting that LLMs are better at modeling common human mistakes than rare ones.

- The combination of multiple perturbations (e.g., paraphrasing + reversed order) had the most destabilizing effect on all models.

Across the board, larger models like Llama-3.3-70B and Gemini-1.5 were more robust, but none were immune.

Are Synthetic Surveys a Mirage?

Beyond robustness, the study reveals a thorny philosophical dilemma: if LLMs reproduce human biases under slight prompt variations, are their responses reflections of social reality or just stochastic echoes of their training data?

- Recency bias suggests that LLMs, like humans, are influenced by how questions are framed.

- Opinion floating (favoring central answers when refusal options are removed) emerged in smaller models.

- Central tendency bias was strongest when models were given odd-numbered scales with an explicit middle option.

Rather than being model flaws, these might reflect training on biased human data, where such response patterns were statistically common. But if so, can we trust LLMs to inform public policy or opinion modeling?

Implications for LLM-Driven Survey Design

This study is not just a critique—it’s a methodological roadmap. By crafting a set of perturbation benchmarks and release code, the authors offer a practical way to stress-test LLMs before deploying them for survey research. Key takeaways:

| Best Practice | Rationale |

|---|---|

| Use large models (e.g., 70B) | Better response consistency and robustness |

| Avoid long Likert scales | Larger option sets amplify inconsistency |

| Always include refusal and neutral options | They reveal model limitations and guardrails |

| Run perturbation stress tests | Surface hidden biases before using LLMs in research |

From Echo to Insight

LLMs may not yet replace humans in surveys—but they are already shaping how we ask questions, collect data, and interpret social signals. If we’re not careful, they might also amplify our blind spots under the guise of objectivity.

Rupprecht et al.’s work reminds us: prompt design isn’t just engineering—it’s epistemology. When synthetic voices start to inform democratic or commercial decisions, we must ensure we’re not listening to an echo chamber built out of clever phrasing.

Cognaptus: Automate the Present, Incubate the Future.