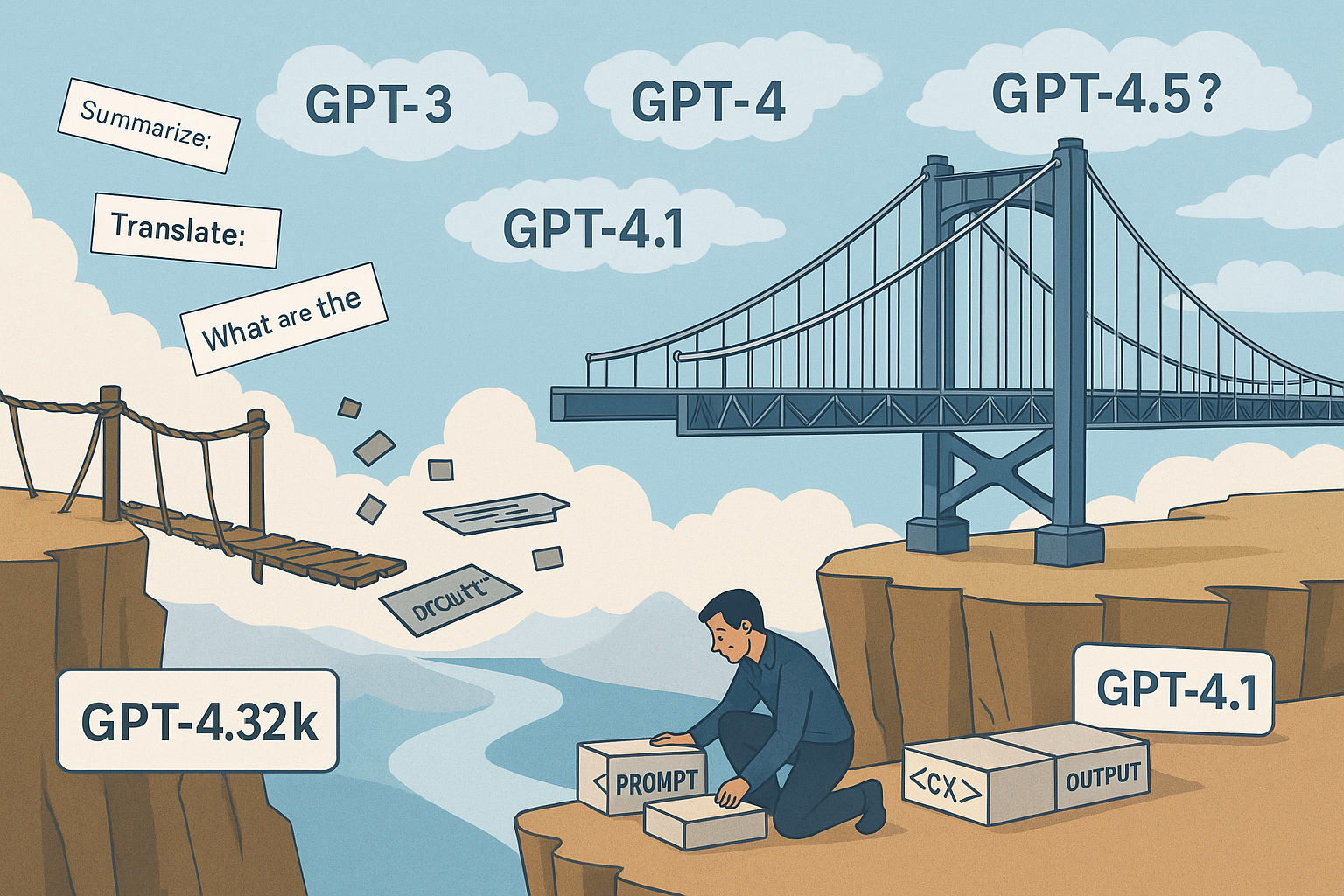

If you’re running a GenAI-powered application today, you’re likely sitting on a ticking time bomb. It isn’t your codebase or infrastructure — it’s your prompts. As Large Language Models (LLMs) evolve at breakneck speed, your carefully tuned prompts degrade silently, causing once-reliable applications to behave erratically. The case of Tursio, an enterprise search tool, makes one thing painfully clear: prompt migration is no longer optional — it’s survival.

The Hidden Cost of Progress

In 2023, Tursio ran reliably on GPT-4-32k. By mid-2025, it had to migrate twice — first to GPT-4.5-preview, then to GPT-4.1. Each model came with its own quirks:

- GPT-4.1 was more literal, refusing to infer obvious semantics unless instructed.

- GPT-4.5-preview added noise, returning human-readable messages instead of parseable outputs.

Even with “stable” prompts, regression test pass rates dropped from 100% (GPT-4-32k) to 98% (4.1) and 97.3% (4.5-preview). This prompted Tursio’s team to develop an in-house framework for prompt migration, anchored on structured prompting and rigorous testing.

What Is Prompt Migration?

Prompt migration is the process of adapting prompts to new model behaviors introduced by successive LLM updates. This isn’t simple prompt tuning — it’s a structured engineering process involving:

- Failure Analysis

- Prompt Refactoring

- Migration Testbed Creation

- Validation and Regression Testing

Tursio’s prompt migration pipeline reduced what was once a months-long trial-and-error process into a repeatable multi-week cycle, guided by prompting guides and model-specific documentation.

Anatomy of a Migrated Prompt

Here’s a before-and-after snapshot for Tursio’s SQL filter extraction task:

| Feature | Old Prompt (GPT-4-32k) | New Prompt (GPT-4.1/4.5) |

|---|---|---|

| Instruction Precision | “Extract filters from input” | “Output must strictly follow: filters: […]” |

| Format Constraints | Implicit formatting via examples | Explicit square brackets, commas, etc. |

| Inference Expectation | Implicit (relies on model guesswork) | Explicit inference rules spelled out |

| Error Handling | None | “Return filters: []” if no filters found |

This shift reflects a deeper change: models today are less forgiving, more literal, and require developers to think like compilers, not conversationalists.

Why Regression Tests Aren’t Enough

Most GenAI teams maintain a regression test suite to catch breaking changes. But Tursio found these insufficient when models start interpreting prompts differently. Their solution? A migration testbed designed to:

- Categorize queries by complexity (Easy / Moderate / Hard)

- Include natural language variations and failed edge cases

- Benchmark prompt robustness before full rollout

This preemptive testing helps decouple model exploration from application deployment, reducing friction between AI and product teams.

The Bigger Picture: Prompt Lifecycle Management

Tursio’s most valuable insight isn’t just the how — it’s the when. Prompt maintenance is no longer a one-time activity. It needs to be part of your CI/CD pipeline, alongside code and model versioning.

Here’s how Tursio frames it:

Model Release ─> Prompting Guide ─> Migration Testbed ─> Prompt Refactor ─> App Validation ─> Production

This lifecycle view elevates prompting from a hacky craft to a mature software discipline.

Prompt Engineering vs Prompt Management

Engineering is what you do to make a prompt work. Management is what you do to keep it working as models evolve.

Tursio’s journey highlights a growing skills gap. Most teams have prompt engineers. Few have prompt managers. But as GenAI apps proliferate, this operational layer will become mission-critical.

Final Thoughts: Is Your LLM a Smart Slave or a Dumb Master?

One of Tursio’s engineers posed a provocative question: if newer LLMs require more detailed prompts and produce less inferential behavior, are we really making progress? The paper concludes with a sobering reflection — LLMs are getting smarter in benchmarks, but dumber in flexibility.

In a world where prompt brittleness can bring down enterprise pipelines, investing in prompt lifecycle infrastructure is no longer a luxury. It’s table stakes.

Cognaptus: Automate the Present, Incubate the Future.