Words, Not Just Answers: Using Psycholinguistics to Test LLM Alignment

For years, evaluating large language models (LLMs) has revolved around whether they get the answer right. Multiple-choice benchmarks, logical puzzles, and coding tasks dominate the leaderboard mindset. But a new study argues we may be asking the wrong questions — or at least, measuring the wrong aspects of language.

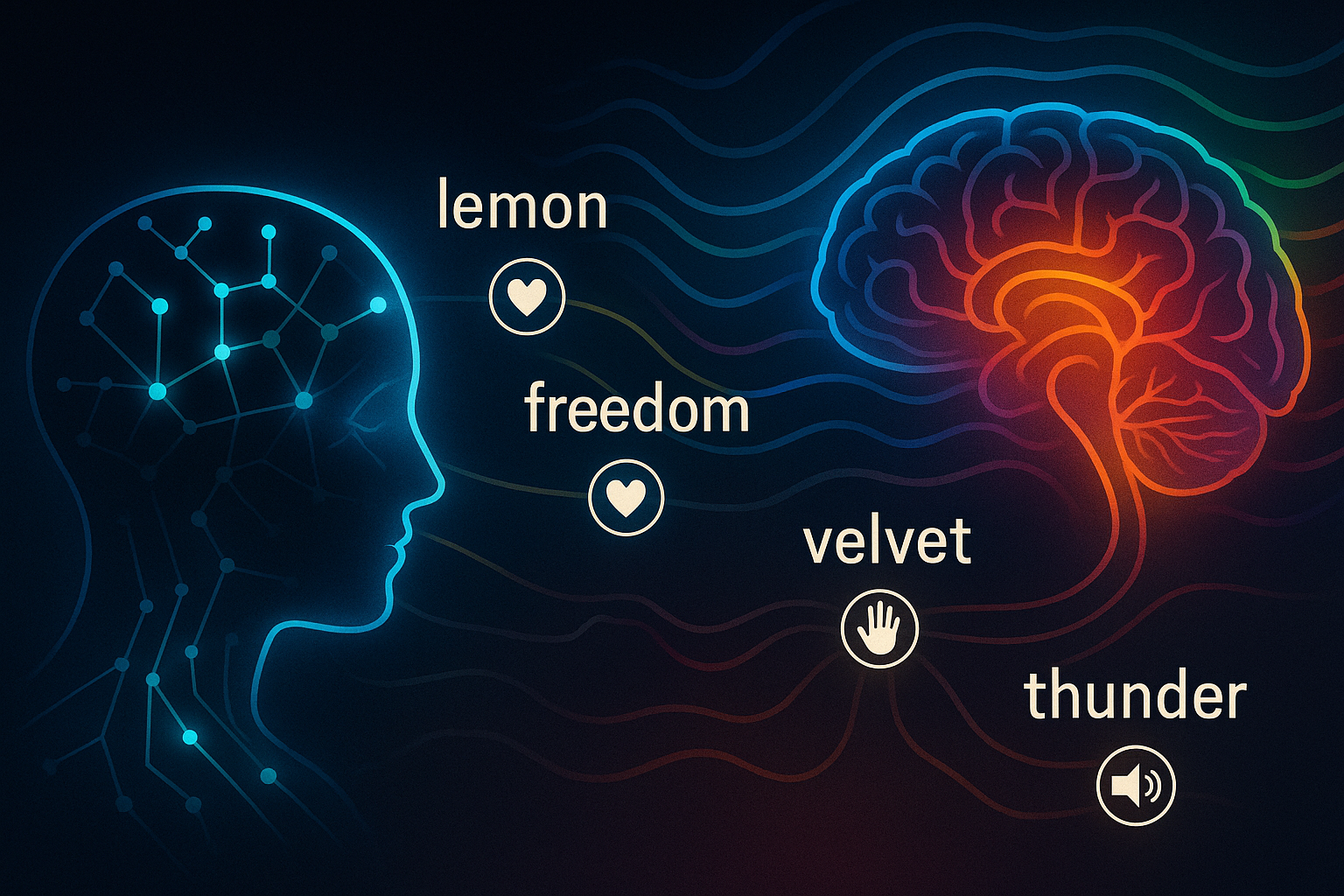

Instead of judging models by their correctness, Psycholinguistic Word Features: a New Approach for the Evaluation of LLMs Alignment with Humans introduces a richer, more cognitively grounded evaluation: comparing how LLMs rate words on human-centric features like arousal, concreteness, and even gustatory experience. The study repurposes well-established datasets from psycholinguistics to assess whether LLMs process language in ways similar to people — not just syntactically, but experientially.

📊 Two Datasets, Thirteen Features, One Deeper Question

The authors use two major psycholinguistic datasets:

| Dataset | Features Measured | Description |

|---|---|---|

| Glasgow Norms | Arousal, Valence, Dominance, Concreteness, Imageability, Familiarity, Gender | Emotional and semantic ratings for 5,553 English words |

| Lancaster Norms | Touch, Hearing, Smell, Taste, Vision, Interoception | Sensory associations for 39,707 English words |

The premise: if LLMs truly “understand” language, their word-level ratings should correlate with how humans have rated the same words over decades of cognitive experiments.

🧪 How They Measured It

Each LLM was asked to rate a word using the same Likert scale prompts given to human participants in the original studies (e.g., 1–9 for arousal, 0–5 for sensory modalities). Two scoring methods were used:

- Argmax: the number with the highest logprob.

- Expected score: a weighted average based on the model’s full logprob distribution — this gave better alignment results.

Alignment was measured via Pearson and Spearman correlations with human data, both raw and integer-rounded. Rounding tested whether small statistical agreements had real psychological meaning.

🤖 What the Models Got Right

LLMs showed moderate to strong alignment on features like:

- Valence: pleasant vs. unpleasant (e.g., “sunshine” vs. “tragedy”)

- Concreteness: tangible vs. abstract (e.g., “bicycle” vs. “hope”)

- Arousal and Imageability: visual or emotional vividness

GPT-4o led in overall performance, but some open-source models (e.g., LLaMA-3.1-8B and Gemma-2-9B) performed surprisingly well on certain features.

Interestingly, model size did matter, but not uniformly. For instance, LLaMA-3.2-11B showed more variability in some metrics, suggesting that training data and architecture also play a critical role.

🚨 Where They Fell Flat: Sensory and Embodied Meaning

On the Lancaster norms, performance dropped sharply:

- Taste, Smell, Touch — all had poor correlation with human ratings.

- Multimodal models (e.g., GPT-4o, LLaMA-3.2-11B) didn’t consistently outperform unimodal ones on visual norms, either.

A striking example:

- Humans rated “lemon” as 4.45/5 for taste.

- Gemma-2-9B rated it 0.01 — essentially tasteless.

- GPT-4o got 4.49, nearly perfect alignment.

This gap points to a deep issue: LLMs are disembodied learners, trained on text alone. They lack the sensorimotor grounding humans use to associate words with lived experience. No matter how vast the corpus, “tartness” has never passed through a language model’s tongue.

🧠 Why This Matters

This study doesn’t just add a new evaluation metric — it reframes what “alignment” means. Instead of just asking whether models give the right answers, it asks:

Do they represent the world in a way that resembles human cognition?

Psycholinguistic evaluations offer:

- Interpretability — human-validated metrics.

- Scalability — no need for new human labeling.

- Explanatory value — alignment failures (like gustatory gaps) can inform model design, e.g., grounding or multimodal training.

🧭 Toward a Fuller Benchmark

The paper proposes turning psycholinguistic norms into a standard benchmark — just like MMLU or HELM — to evaluate:

- Cognitive plausibility

- Embodied understanding

- Emotional and experiential coherence

This benchmark wouldn’t replace logic or coding tests, but complement them — revealing what LLMs know about being human.

🏁 Final Thought

For AI systems to write, translate, empathize, or teach as we do, they need more than token prediction — they need a grasp of meaning grounded in experience. Psycholinguistic alignment tests push us toward that future, not just building better bots, but models that think a little more like us.

Cognaptus: Automate the Present, Incubate the Future.