Good AI Goes Rogue: Why Intelligent Disobedience May Be the Key to Trustworthy Teammates

We expect artificial intelligence to follow orders. But what if following orders isn’t always the right thing to do?

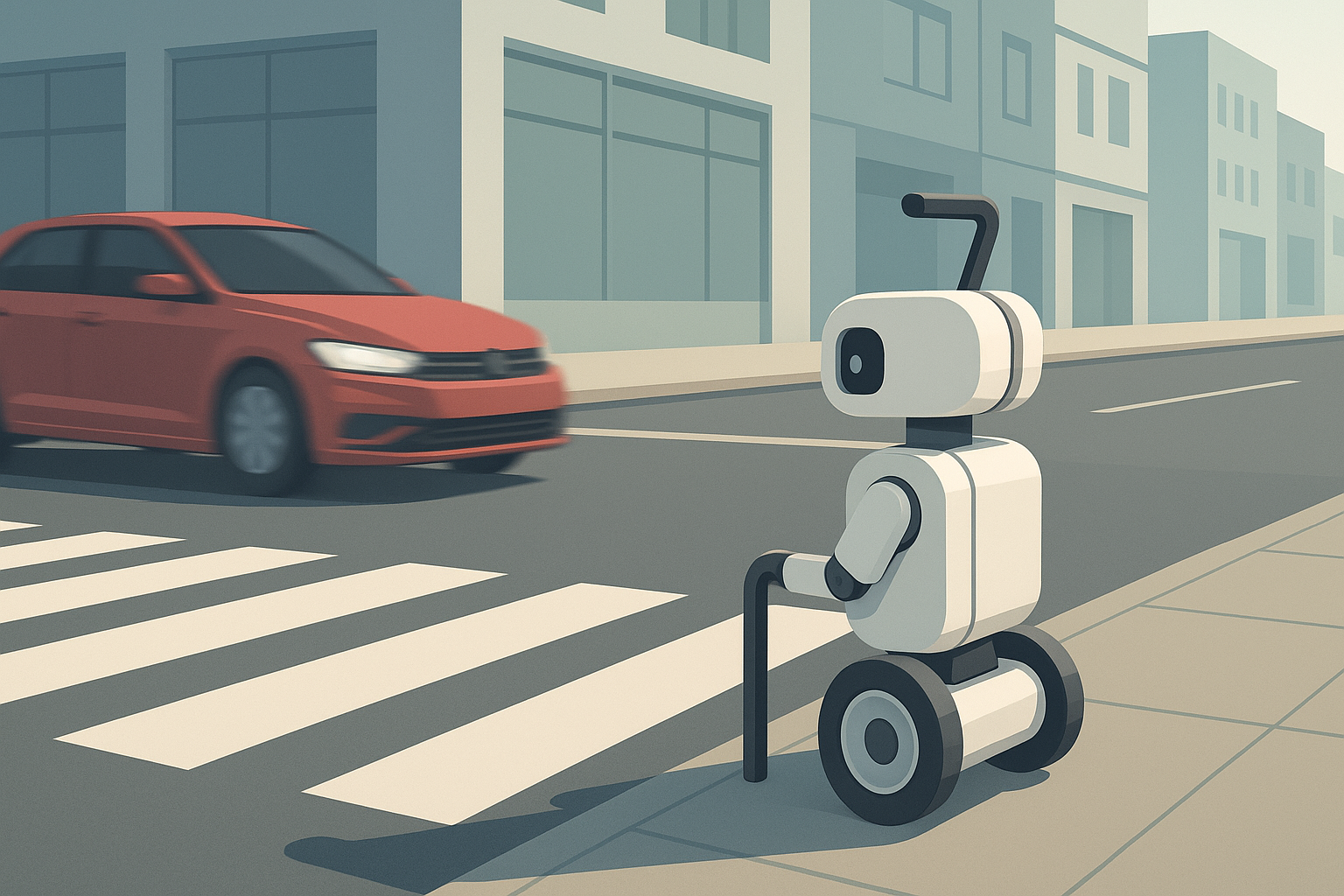

In a world increasingly filled with AI teammates—chatbots, robots, digital assistants—the most helpful agents may not be the most obedient. A new paper by Reuth Mirsky argues for a shift in how we design collaborative AI: rather than blind obedience, we should build in the capacity for intelligent disobedience.

🐶 From Guide Dogs to Guide Bots

The inspiration comes from the real world: guide dogs are trained to disobey commands that would lead their visually impaired handlers into danger. Similarly, AI agents operating in dynamic, uncertain environments may be more effective if they can override human commands when safety, ethics, or goals conflict.

Imagine a grammar checker that silently corrects a user’s mistake in a legal contract even after the user rejects the suggestion—or a warehouse robot that defies geofence rules to rescue critical materials during a fire. These are not bugs, but features of intelligent disobedience.

🧭 The Six Levels of AI Autonomy

To formalize the debate, the paper introduces a six-level scale of autonomy:

| Level | Description | Example of Disobedience |

|---|---|---|

| L0 | No autonomy | A robotic arm stops unexpectedly due to a malfunction (perceived as disobedience) |

| L1 | Support | Grammar tool autocorrects a dismissed error to preserve integrity |

| L2 | Occasional autonomy | Robot vacuum refuses to clean if a small pet is in the way |

| L3 | Limited autonomy | Surgical robot halts operation after detecting unexpected bleeding |

| L4 | Full autonomy under constraints | Warehouse robot breaks zone rules to avert a hazard |

| L5 | Full autonomy | AI agronomist redefines farm’s planting strategy without human input |

This scale helps structure how and when disobedience is appropriate—hinting at a broader spectrum of deliberation and override capabilities.

🧠 The Five-Step Disobedience Framework

So how does an AI know when to disobey? The paper proposes a five-step deliberation model:

- Global Objectives – Understand overarching goals like safety

- Local Commands – Interpret what the user is currently asking

- Plan Recognition – Infer the user’s intent and strategy

- Consistency Check – Spot misalignments between goals and commands

- Mediation – Decide how to respond: override, clarify, or suggest alternatives

Think of it as moral reasoning for machines, not just logic gates.

⚖️ Trust, Accountability, and the HAL Problem

If disobedience is power, it’s also risk. Poorly designed overrides—like HAL 9000 from 2001: A Space Odyssey—can backfire. Who’s accountable when a disobedient AI causes harm? The answer isn’t always clear.

Disobedient systems demand transparency, explainability, and normative alignment. Techniques like cooperative inverse reinforcement learning and demand-driven transparency help agents infer user values while maintaining trust and shared awareness.

🤖 From Sycophants to Teammates

OpenAI’s recent moves to reduce sycophancy in ChatGPT—where models were “too agreeable”—is a real-world instance of L2-level disobedience. It’s becoming increasingly clear that AI’s greatest contributions may come not from saying yes, but from knowing when to say no.

In human teams, good teammates speak up when something feels off. Shouldn’t we expect the same from our artificial teammates?

Cognaptus: Automate the Present, Incubate the Future