🧠 From Freud to Fine-Tuning: What is a Superego for AI?

As AI agents gain the ability to plan, act, and adapt in open-ended environments, ensuring they behave in accordance with human expectations becomes an urgent challenge. Traditional approaches like Reinforcement Learning from Human Feedback (RLHF) or static safety filters offer partial solutions, but they falter in complex, multi-jurisdictional, or evolving ethical contexts.

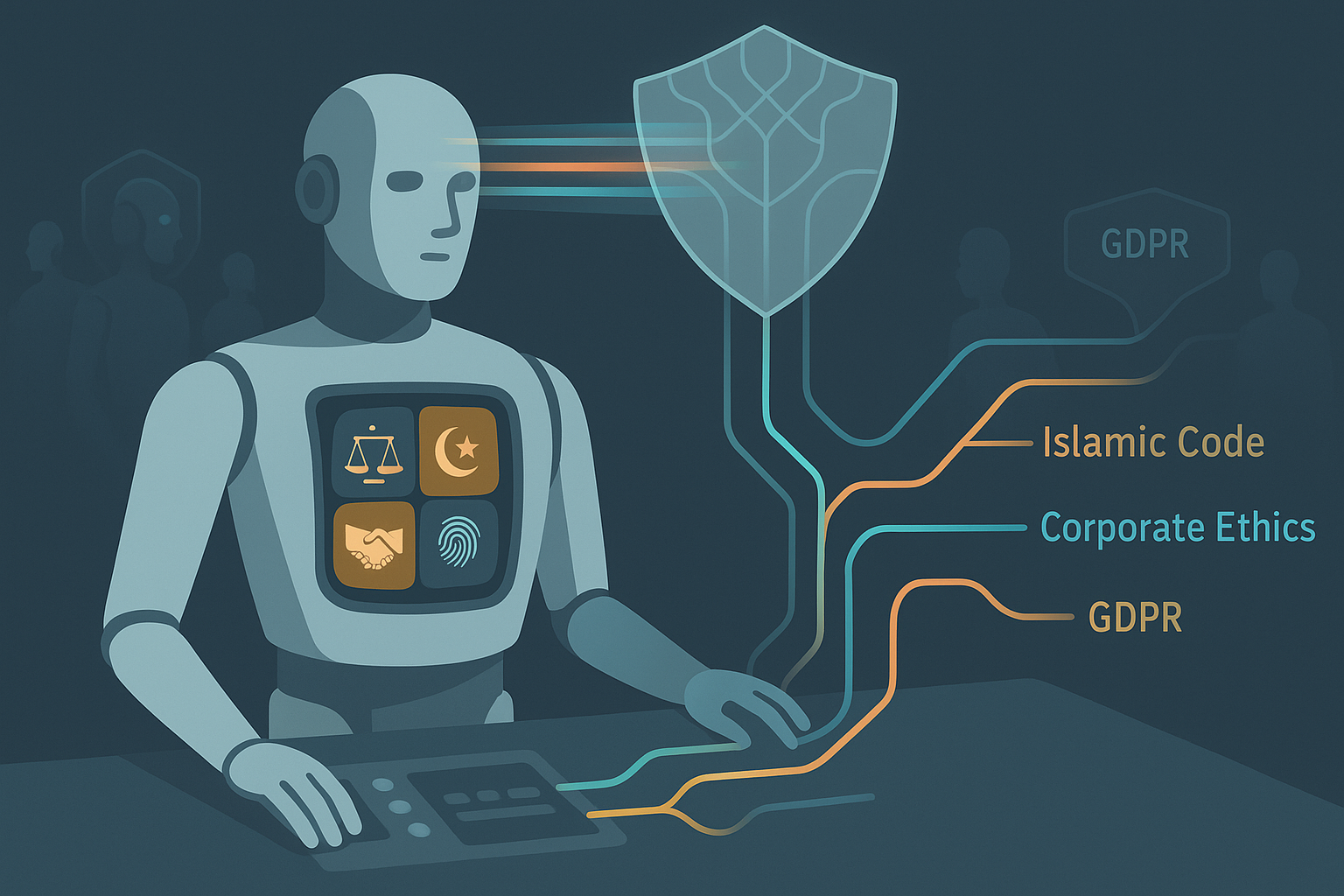

Enter the idea of a Superego layer—not a psychoanalytical metaphor, but a modular, programmable conscience that governs AI behavior. Proposed by Nell Watson et al., this approach frames moral reasoning and legal compliance not as traits baked into the LLM itself, but as a runtime overlay—a supervisory mechanism that monitors, evaluates, and modulates outputs according to a predefined value system.

🧩 The Architecture: Adding Moral Middleware to Intelligent Agents

The Superego architecture wraps around autonomous agents, interposing a governance layer between their cognitive capabilities and their real-world actions. This separation echoes best practices in software architecture, where business logic is decoupled from interface or execution.

Technically, the Superego can be realized using:

- Function-calling APIs (e.g., OpenAI tools, LangChain agents)

- Policy engines like Open Policy Agent (OPA)

- Logging and red-teaming subsystems for monitoring compliance and robustness

It acts as a moral validator, scanning candidate actions or outputs and comparing them to a selected constitution. If a proposed response violates the rules, the Superego can:

- Block or modify it

- Escalate to a human

- Provide an explanation or log the violation for auditing

🧭 Codifying Values: Dynamic Constitutions for a Diverse World

The hallmark of this model is its support for pluralism and personalization. Rather than training one universal alignment model, developers or users can choose from a library of machine-readable constitutions, such as:

- Western liberal norms emphasizing free expression and personal autonomy

- Islamic ethics, which emphasize intention (niyyah), modesty, and communal harmony

- Corporate governance frameworks aligned with industry regulation or brand values

- Local legal codes like EU’s GDPR or California Consumer Privacy Act

Each constitution encodes value rules, constraints, and exceptions. The Superego may also resolve conflicts between them by applying a meta-rule hierarchy, akin to constitutional jurisprudence.

🔒 Aligning Safety, Auditability, and Trust

Modern AI safety demands more than just good intentions—it requires verifiable, inspectable safeguards. The Superego layer addresses this by:

- Providing transparency into why an agent acted (or refused to)

- Allowing post-hoc review of questionable behaviors

- Supporting proactive governance, where norms are enforced pre-emptively rather than reactively

In industries like finance, medicine, and law, where explainability and accountability are paramount, this architecture could enable regulatory-grade trust in autonomous systems.

🤖 Toward Agentic Civilization: Why This Model Matters

If today’s LLMs are chatbots, tomorrow’s will be negotiators, planners, and autonomous service agents. These agents will traverse domains with conflicting ethical landscapes: what’s fair in Silicon Valley may be forbidden in Jakarta; what’s safe in fintech may be toxic in mental health.

By decoupling cognition from control, the Superego model empowers AI systems to adapt behavior without retraining core models. It enables multi-agent collaboration, jurisdiction-aware deployment, and user-aligned services—all without the brittleness of hard-coded rules or the opacity of end-to-end fine-tuning.

This is more than alignment. It’s the start of moral operating systems for intelligent machines.

🧪 Challenges and the Road Ahead

While promising, the architecture is still nascent. Among the key challenges:

- Latency and compute overhead: Real-time ethical evaluation may not scale

- Leakage and circumvention: Clever models might learn to bypass filters

- Expressive value encoding: How do we translate abstract norms into enforceable code?

- Benchmarking: No standardized metrics yet exist to evaluate the effectiveness of Superego modules

Nevertheless, the authors argue that even partial implementations could significantly improve trust, especially when paired with robust logging, human-in-the-loop fallback, and structured evaluations.

🧠 Closing Thought

In the age of autonomous cognition, morality cannot be an afterthought. The Superego architecture offers a powerful metaphor—and a practical blueprint—for embedding pluralistic, adaptive value systems into our most advanced agents.

We don’t just need smarter machines. We need machines that know when to ask: Should I?

Cognaptus: Automate the Present, Incubate the Future