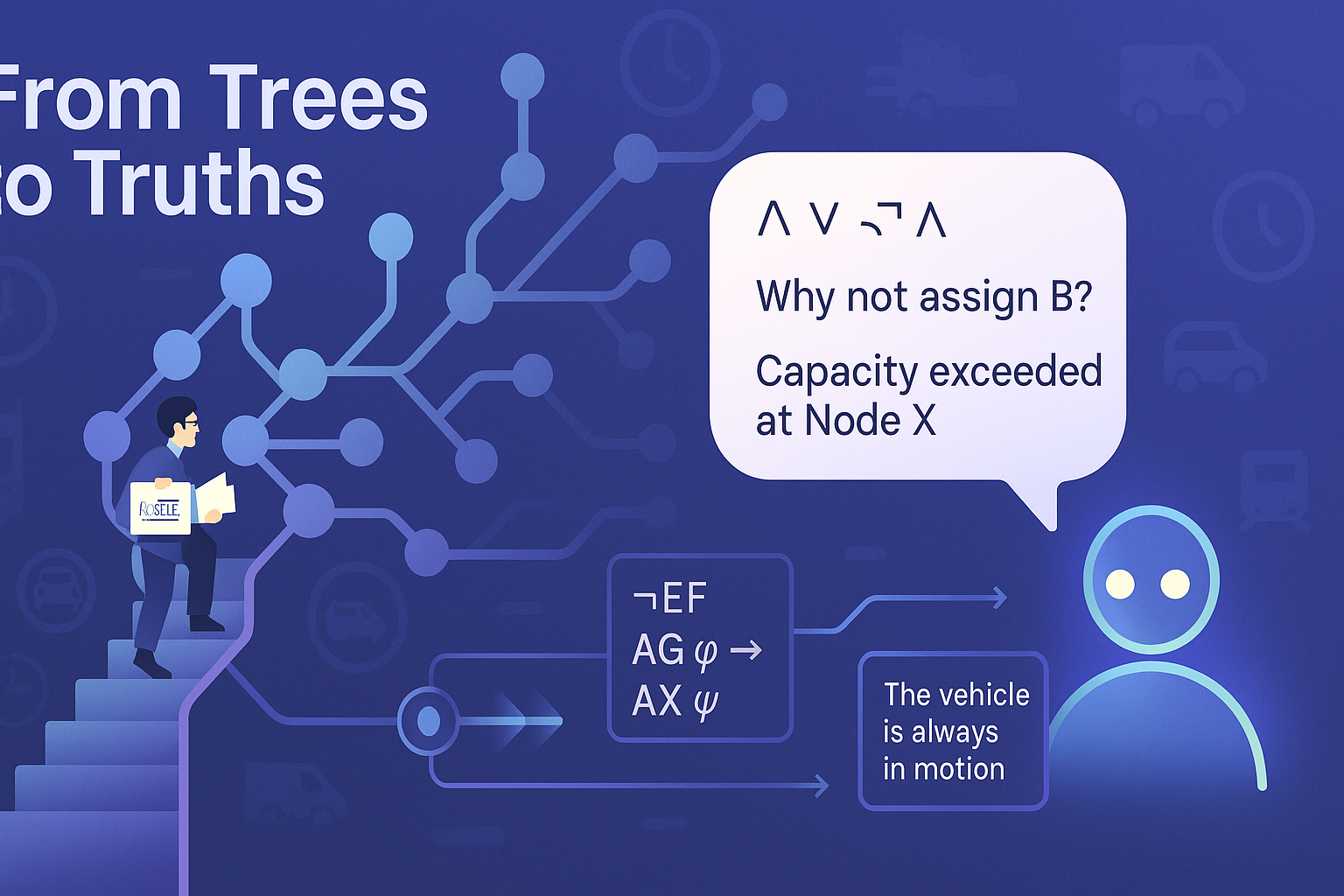

In the quest to make AI more trustworthy, few challenges loom larger than explaining sequential decision-making algorithms like Monte Carlo Tree Search (MCTS). Despite its success in domains from transit scheduling to game playing, MCTS remains a black box to most practitioners, generating decisions from expansive trees of sampled possibilities without accessible rationale. A new framework proposes to change that by fusing LLMs with formal logic to bring transparency and dialogue to this crucial planning tool1.

Why MCTS Needs Explanation

MCTS is prized for its performance under uncertainty, but it’s notoriously opaque. Each decision emerges from navigating thousands of sampled rollouts, making it nearly impossible to trace the “why” behind a specific action. This complexity impedes trust, especially in high-stakes environments like paratransit route planning, where human oversight is non-negotiable.

The Framework: Logic Meets Language

The proposed system combines three ingredients:

- Computation Tree Logic (CTL): A formalism that enables expressing temporal logic over the branching search tree.

- LLM Query Interface: Users pose natural language questions about past MCTS decisions.

- Logic-Backed Response Pipeline: A sequence of modules transforms questions into logical variables and constraints, scores them against the tree, and outputs consistent natural language explanations.

A typical use case might involve a domain expert asking, “Why didn’t the planner assign the vehicle to request B at 10:00 AM?” The system then identifies the relevant node, parses the user intent, converts it into formal logic, checks it against the search tree, and replies with a human-readable rationale referencing real MDP constraints, priorities, or occupancy dynamics.

Background: Three Anchors of Planning Logic

Understanding how this framework functions requires foundational insight into three interconnected domains:

-

Markov Decision Processes (MDPs): At the core of MCTS lies the MDP, a probabilistic model defining state transitions, actions, and rewards. MCTS navigates the policy space by simulating action sequences within this model, forming a decision tree where nodes represent states and branches correspond to actions.

-

Temporal Logic (CTL): Computation Tree Logic is a modal logic used to reason about paths through trees. It allows assertions like “eventually a condition will hold” or “a condition holds on all future paths,” offering a precise way to compare MCTS branches and subtrees beyond surface-level statistics.

-

Prompt-Conditioned LLM Reasoning: By translating natural-language queries into logic-conditioned prompts, LLMs can generate explanations that align with both domain knowledge and sampled data. Instead of hallucinating reasons, the model works with structured, validated inputs that maintain factual consistency.

Query Taxonomy

The system supports 26 natural language query types, categorized across three tiers of reasoning:

| ID | Query Type Example | Logic Tier |

|---|---|---|

| 01 | “What was the capacity at Node X?” | Base-Level |

| 02 | “How many pickups happened in this branch?” | Derived |

| 03 | “Why not assign to Request B?” | Logic Comparison |

| 04 | “Did we ever prioritize short trips?” | Derived |

| 05 | “Was fairness considered here?” | Logic Comparison |

| 06 | “Which vehicle had fewer total delays?” | Logic Comparison |

| 07 | “What was the average wait time in this path?” | Derived |

| 08 | “Was capacity ever exceeded in subtree Y?” | Derived |

| 09 | “Was Request A served on all valid paths?” | Logic Comparison |

| 10 | “Is Node X a terminal state?” | Base-Level |

| 11 | “What’s the longest delay in this branch?” | Derived |

| 12 | “Did vehicle 3 reach request C?” | Base-Level |

| 13 | “Where was the first pickup for vehicle 2?” | Base-Level |

| 14 | “How often was occupancy over 70%?” | Derived |

| 15 | “Which path had the fewest requests served?” | Logic Comparison |

| 16 | “Was request C skipped in this subtree?” | Derived |

| 17 | “When did we exceed the maximum ride time?” | Base-Level |

| 18 | “Compare delays between vehicles A and B” | Logic Comparison |

| 19 | “Did we reassign requests mid-plan?” | Derived |

| 20 | “Was Node Y revisited?” | Base-Level |

| 21 | “Why not delay request D instead?” | Logic Comparison |

| 22 | “What constraints blocked assignment?” | Logic Comparison |

| 23 | “When did fairness override efficiency?” | Logic Comparison |

| 24 | “Did all plans meet the 45-minute threshold?” | Derived |

| 25 | “Was the max capacity always respected?” | Derived |

| 26 | “What’s the deepest branch in the tree?” | Derived |

Evidence-Based Reasoning in Three Tiers

The system classifies all queries into one of three logic-based evidence types:

- Base-Level: Single-node facts, such as capacity at a given decision point.

- Derived: Aggregations, such as average wait times across subtrees.

- Logic Comparison: More abstract claims involving comparisons across branches using CTL.

An LLM generates logic expressions from a few-shot prompt pool matched to the 26 query types. These expressions are then parsed, evaluated, and used to build responses with traceable reasoning.

Domain Knowledge via RAG

For general MCTS or domain-related questions, a small Retrieval-Augmented Generation (RAG) module retrieves background knowledge (e.g., constraints of paratransit systems) from a curated 3,000-word base and embeds relevant facts into the LLM prompt only if similarity scores pass a quality threshold. This helps answer questions like “What does it mean to optimize for fairness in assignments?”

Here are three real examples where the RAG module helped disambiguate or supplement LLM answers:

| Query | Retrieved Fact | Explanation Generated |

|---|---|---|

| “Why not assign request B?” | “Requests beyond 45-minute delay threshold are deprioritized.” | “Assigning request B would have violated the system’s 45-minute max delay rule.” |

| “What’s the fairness criteria?” | “Fairness is defined as minimizing the variance in wait time.” | “The plan aimed to equalize rider experience by minimizing wait time variance.” |

| “Was this a valid vehicle handoff?” | “Handoffs are valid only if no penalty exceeds 10 mins.” | “This handoff was valid since all delay penalties remained within the 10-min window.” |

Real Results: Numbers That Speak

Quantitative evaluation across 620 handcrafted queries demonstrates how much factual grounding matters. Compared with plain GPT-4 or Llama3.1, this framework (using the same LLM backbones) achieved:

- FactCC Improvement: 1.6× better (GPT-4), 2.4× better (Llama3.1)

- BERTScore Boost: Up to 7.9× increase

In other words, it’s not just talking more clearly—it’s talking more truthfully.

| Model | FactCC @1 | BERTScore @1 |

|---|---|---|

| GPT-4 | 42.31% | 40.00% |

| MCTS (GPT) | 72.12% | 88.46% |

| Llama3.1 | 25.77% | 06.15% |

| MCTS (Llama) | 67.88% | 86.54% |

Broader Implications

This approach represents a hybrid logic-data path forward for xAI: combine LLMs’ fluency with the rigor of symbolic reasoning and domain constraints. Unlike typical natural language explanations, which risk sounding plausible but wrong, logic-informed explanations remain anchored to the real dynamics of the underlying MDP.

Such a system could scale beyond MCTS to other complex control systems—or become a building block for safe AI agents that explain before they act.

Relation to Previous Work

You might be thinking this sounds familiar—and you’d be right. Our April 2 article, “Rules of Engagement: Why LLMs Need Logic to Plan”2, covered the theoretical promise of integrating LLMs with logic for planning systems. That article set the philosophical and technical foundation: logic ensures verifiability, and language ensures usability.

So, what’s new here?

Innovations in This Work:

- Post-Hoc Explanation over MCTS Traces: Instead of focusing on planning with logic, this article highlights explaining past plans—a critical leap for auditability.

- Query-to-Logic Module with Prompt Pools: This work introduces a taxonomy of 26 natural-language query types and associates each with prompt logic templates.

- Use of CTL on Actual MCTS Trees: Rather than simulate toy reasoning paths, this system applies logic to real sampled rollouts.

- Quantitative Truthfulness Metrics: We evaluate explanations with FactCC and BERTScore—something the April 2 article did not.

Together, these enhancements translate earlier theory into operational, measured utility.

Final Thoughts

As AI systems increasingly plan, recommend, and decide on our behalf, making their reasoning visible isn’t just a research goal—it’s a social requirement. This work brings us a step closer by making sequential planning interpretable without dumbing it down. It speaks logic, but in our language.

Cognaptus: Automate the Present, Incubate the Future.